Beyond the Price Tag – Why Organizations Choose Nutanix

In many customer conversations today, the discussion about Nutanix starts in a very pragmatic place: price. Before we get the chance to talk about architecture, automation, or hybrid cloud strategies, most organizations first want to answer a simpler question: Can we even afford this option? Only...

10 Things You Probably Didn’t Know About Nutanix

Nutanix is often described with a single word: HCI. That description is not wrong, but it is incomplete. Over the last decade, Nutanix has evolved from a hyperconverged infrastructure (HCI) pioneer into a mature enterprise cloud platform that now sits at the center of many VMware replacement...

Cloud Repatriation and the Growth Paradox of Public Cloud IaaS

Over the past two years, a new narrative has taken hold in the cloud market. No, it is not always about sovereign cloud. 🙂 Headlines talk about cloud repatriation - nothing really new, but it is still out there. CIOs speak openly about pulling some workloads back on-premises. Analysts write about...

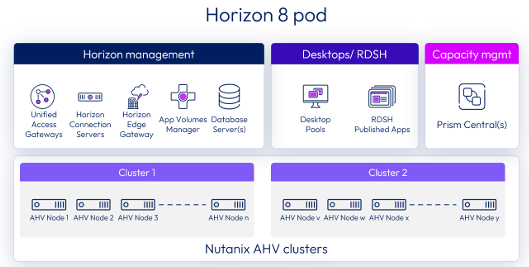

Nutanix’s EUC Stack Reduces TCO and Improves ROI

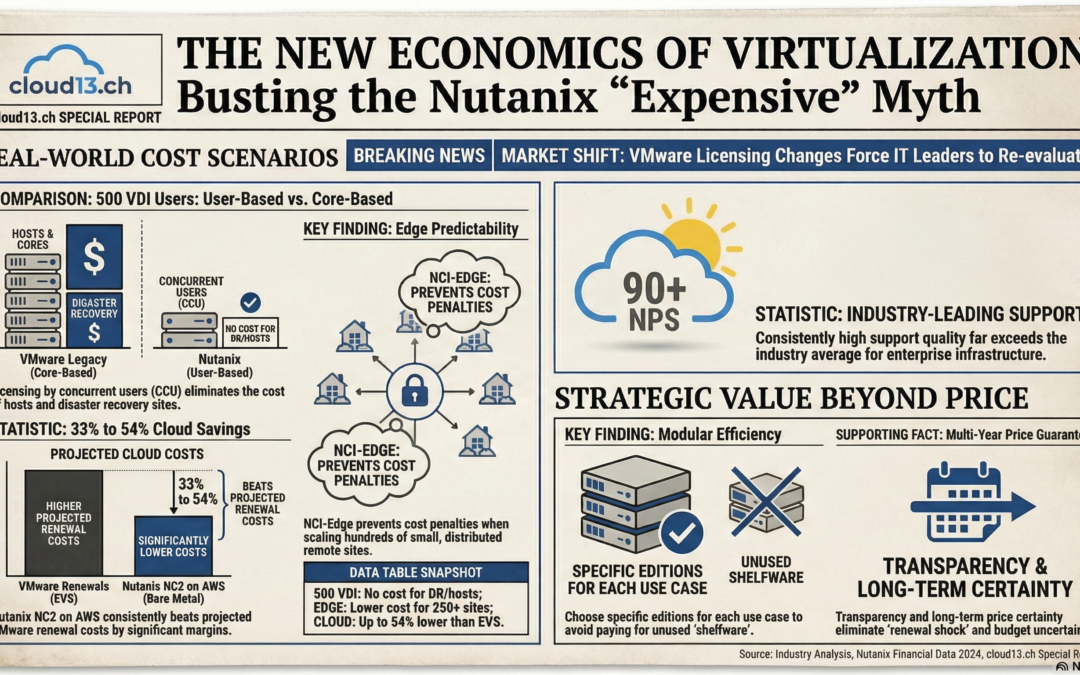

Virtual Desktop Infrastructure (VDI) has always been a conservative technology. It sits close to users, productivity, and operational risk. For years, the dominant conversation revolved around brokers, protocols, and user experience. Today, that conversation is shifting more towards licensing,...

Multi-cloud is normal in public cloud. Why is “single-cloud” still normal in private cloud?

If you ask most large organizations why they use more than one public cloud, the answers are remarkably consistent. It is not fashion, and it is rarely driven by engineering curiosity. It is risk management and a best of breed approach. Enterprises distribute workloads across multiple public...

Nutanix should not be viewed primarily as a replacement for VMware

Public sector organizations rarely change infrastructure platforms lightly. Stability, continuity, and operational predictability matter more than shiny and modern solutions. Virtual machines became the dominant abstraction because they allowed institutions to standardize operations, separate...

Nutanix Is Quietly Redrawing the Boundaries of What an Infrastructure Platform Can Be

Real change happens when a platform evolves in ways that remove old constraints, open new economic paths, and give IT teams strategic room to maneuver. Nutanix has introduced enhancements that, taken individually, appear to be technical refinements, but observed together, they represent something...

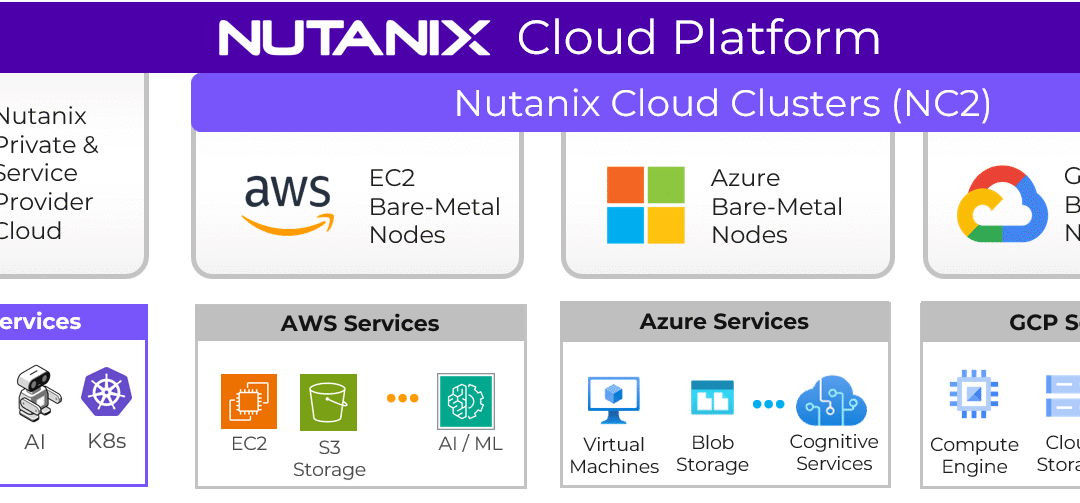

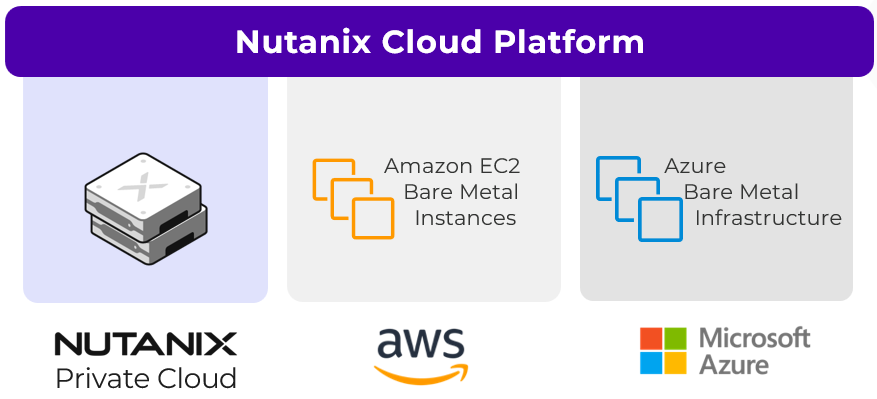

A Primer on Nutanix Cloud Clusters (NC2)

If you strip cloud strategy down to its essentials, you quickly notice that IT leaders are protecting three things. I am talking about continuity, autonomy and freedom of movement. Yet most clouds, private or public, quietly decimate at least one of these freedoms. You can gain elasticity but lose...