VMware vSphere – The Enterprise Data Platform

The world is creating and consuming more data than ever. There are multiple reasons that can explain this trend. Data creates the foundation for many digital products and services. And we read more and more about companies that want or need to keep their data on-premises because of reasons like data proximity, performance, data privacy, data sovereignty, data security, and predictable cost control. We also know that the edge is growing much faster than large data centers. These and other factors are the reasons why CIOs and decision-makers are now focusing on data more than ever before.

We live in a digital era where data is one of the most valuable assets. The whole economy from the government to local companies would not be able to function without data. Hence, it makes sense to structure and analyze the data, so a company’s data infrastructure becomes a profit center and is not just seen as a cost center anymore.

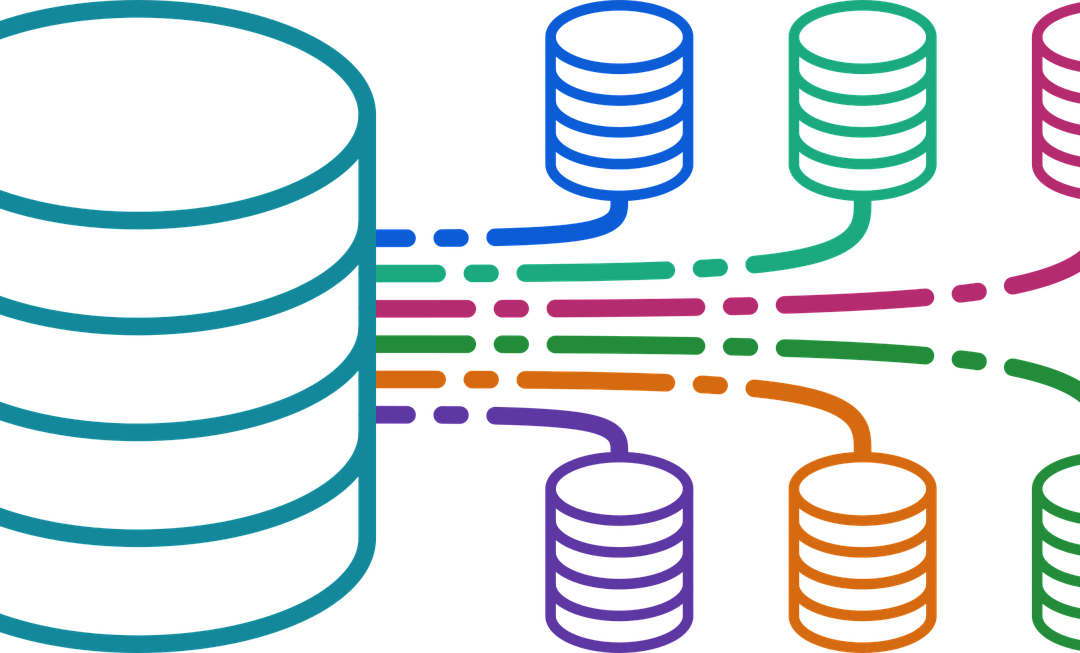

Data Sprawl

A lot of enterprises are confronted with the so-called data sprawl. Data sprawl means that an organization’s data is stored on and consumed by different devices and operating systems in different locations. There are cases where the consumers and the IT teams are not sure anymore where some of the data is stored and how it should be accessed. This is a huge risk and results in a loss of security and productivity.

Since the discussions about sovereign clouds and data sovereignty have started, it has never been more important where a company’s data resides, and where and how one can consume that data.

Enterprises have started to follow a cloud-smart approach: They put the right application and its data in the right cloud, based on the right reasons. In other words, they think twice now where and how they store their data.

What databases are popular?

When talking to developers and IT teams, I mostly received the following names (in no particular order):

- Oracle

- MSSQL

- MySQL

- PostgreSQL

I think it would be a fair statement to make that a lot of customers are looking for alternatives to reduce expensive database and database management solutions (DBMS). It seems that Postgres and MySQL earned a lot of popularity over the past years, while Oracle is still considered one of the best databases on the market – even seen as one of the most expensive and least liked solutions. But I also hear other solutions like MongoDB, MariaDB, and Redis mentioned in more discussions.

DBaaS and Public Cloud Characteristics

It is nothing new: Developers are looking for a public-cloud-like experience for their on-premises deployments. They want an easy and smooth self-service experience without the need for opening tickets and waiting for several days to get their database up and running. And we also know that open-source and freedom of choice are becoming more important to companies and their developers. Some of the main drivers here are costs and vendor lock-in.

IT teams on the other side want to provide security and compliance, more standardization around versions and types, and an easy way to backup and restore databases. But the truth is, that a lot of companies are struggling to provide this kind of Database-as-a-Service (DBaaS) experience to their developers.

The idea and expectation of DBaaS are to reduce management and operational efforts with the possibility to easily scale databases up and down. The difference between the public cloud DBaaS offering and your on-premises data center infrastructure is the underlying physical and virtual platform.

On-premises it could be theoretically any hardware, but VMware vSphere is still the most used virtualization platform for an enterprise’s data (center) infrastructure.

VMware vSphere and Data

VMware shared the information that studying their telemetry from their customer base showed that almost 25% of VMware workloads are data workloads (databases, data warehouses, big data analytics, data queueing, and caching) and it looks like that MS SQL Server still has the biggest share of all databases that are hosted on-premises.

They are also seeing a high double-digit growth (approx. 70-90%) when it comes to MySQL and steady growth with PostgreSQL. Rank 4 is probably Redis followed by MongoDB.

VMware Data Solutions

VMware Data Solutions, formerly known as Tanzu Data Services, is a powerful part of the entire VMware portfolio and consists of:

- VMware GemFire – Fast, consistent data for web-scaling concurrent requests fulfills the promise of highly responsive applications.

- VMware RabbitMQ – A fast, dependable enterprise message broker that provides reliable communication among servers, apps, and devices.

- VMware Greenplum – VMware Greenplum is a massively parallel processing database. Greenplum is based on open-source Postgres, enabling Data Warehousing, aggregation, AI/ML and extreme query speed.

- VMware SQL – VMware’s open-source SQL Database (Postgres & MySQL) is a Relational database service providing cost-efficient and flexible deployments on-demand and at scale. Available on any cloud, anywhere.

- VMware Data Services Manager – Reduce operational costs and increase developer agility with VMware Data Services Manager, the modern platform to manage and consume databases on vSphere.

VMware Data Services Manager and VMware SQL

VMware SQL allows customers to deploy curated versions of PostgreSQL and MySQL and DSM is the solution that enables customers to create this DBaaS experience their developers are looking for.

Data Services Manager has the following key features:

- Provisioning – Provision different configurations of databases (MySQL, Postgres, and SQL Server) with either freely

configurable or pre-defined sizing of compute and memory resources, depending on user permissions - Backup & Restore – Backup, Transactional log, Point in Time Recovery (PiTR), on-demand or as scheduled

- Scaling – Modify instances depending on usage (scale up, scale down, disk extension)

- Replication – Replicate (Cold/Hot or Read Replicas) across managed zones

- Monitoring – Monitor database engine, vSphere infrastructure, networking, and more.

…and supports the following components and versions (with DSM v1.4):

- MySQL 8.0.30

- Postgres 10.23.0, 11.18.0, 12.13.0, 13.9.0

- MSSQL Server 2019 (Standard, Developer, Enterprise Edition)

Companies with a lot of databases have now a way at least to manage, control and secure Postgres, MySQL and MSSQL DB instances from a centralized tool than can be accessed via the UI or API.

Project Moneta

VMware’s vision is to become the cloud platform of choice. What started with compute, storage and network, continues with data: make it as easy to consume as the rest of their software-defined data center stack.

VMware has started with DSM and sees Moneta, which is still an R&D project, as the next evolution. The focus of Moneta is to bring better self-service and programmatic consumption capabilities (e.g., integration with GitHub).

Project Moneta will provide native integration with vSphere+ and the Cloud Consumption Interface (CCI). While nothing is official yet, I think of it as a vSphere+ and VMware Cloud add-on service that would provide data infrastructure capabilities.

Final Words

If your developers want to use PostgreSQL, MySQL and MSSQL, and if your IT struggles to deploy, manage, secure and backup those databases, then DSM with Tanzu SQL can help. Both solutions are also perfectly made for disconnected use cases or airgapped environments.

Note: The DB engines are certified, tested and supported by VMware.