I understand it, believe me. The public cloud promised a lot: speed, scale, flexibility. But over time, cracks have appeared. Bills grow faster than workloads and compliance becomes harder, not easier. And some applications never really fit, especially those that demand low latency or strict control.

So, we are told, companies are pulling workloads back from the public cloud. These reverse cloud migrations are also known as cloud repatriation. You have to understand, it is not a reversal of digital transformation and the abandoning of public cloud – it’s a correction. A realignment based on experience, governance needs, and financial pressure.

But the answer isn’t to go backward, if your expectations have not been met. The challenge is to retain the benefits of the cloud – automation, elasticity, operational efficiency – while regaining the control that is often lost in the public model.

But moving away from the cloud doesn’t mean giving up the cloud. The real question is: how do we keep what worked and fix what didn’t?

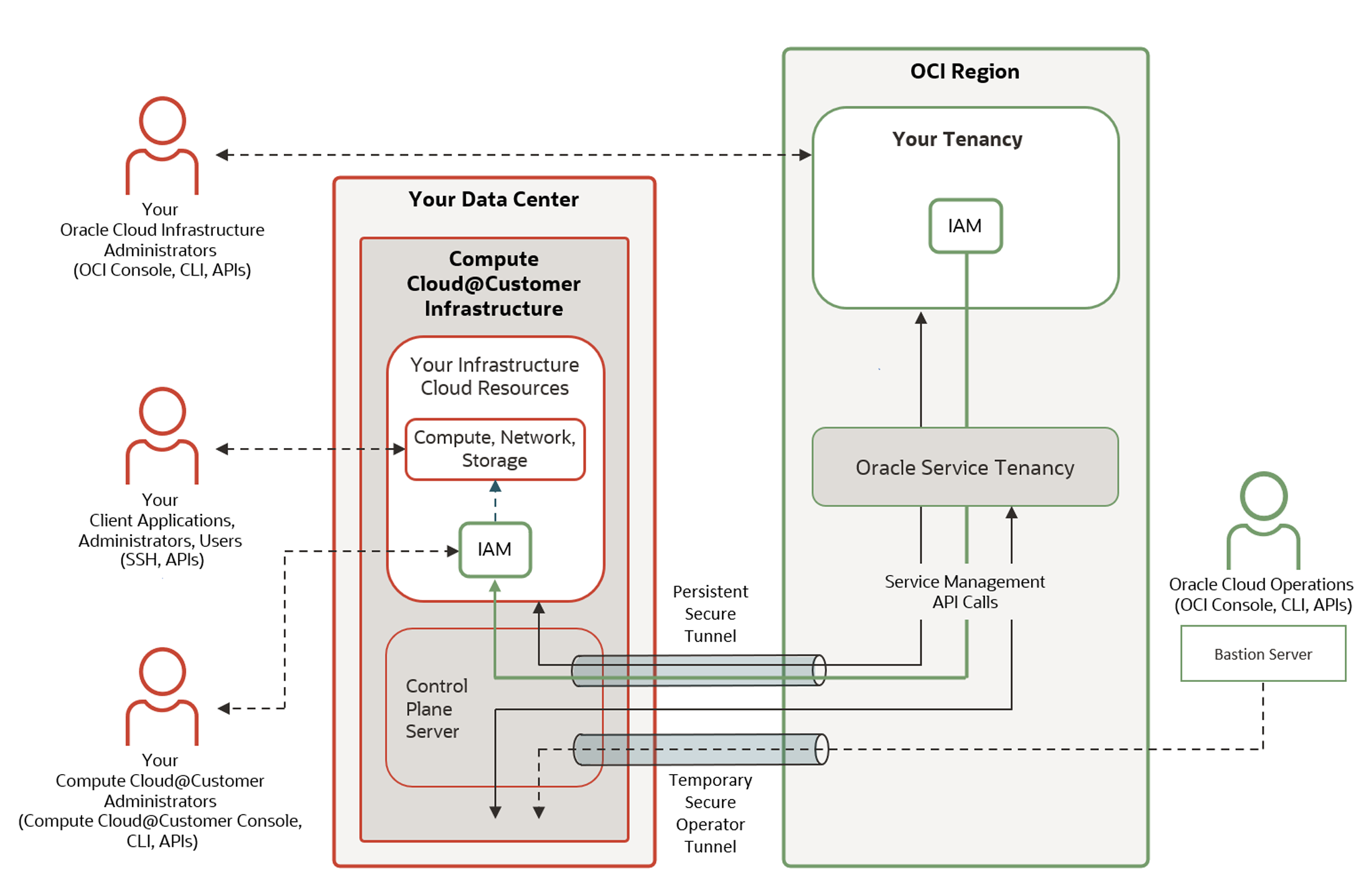

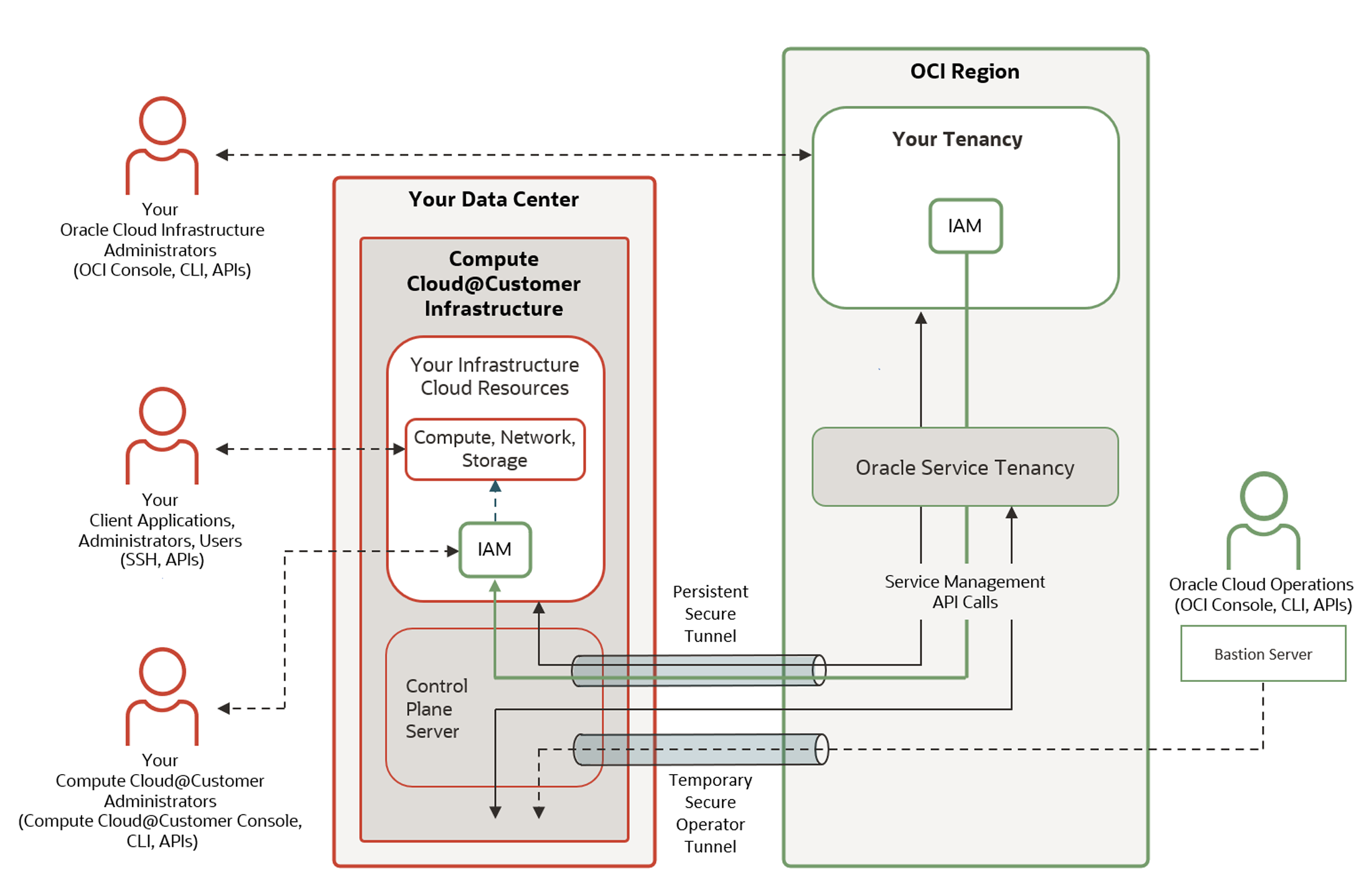

Oracle Compute Cloud@Customer (C3) was built precisely for that purpose. It brings Oracle’s public cloud infrastructure and tooling into your data center, under your governance, with the same APIs, security, and operational model. What follows is how C3 directly addresses the core reasons driving repatriation and why many enterprises are choosing a more strategic hybrid path forward. C3 changes everything.

Cost Control Without Surprises

Ask any IT leader what drove their move to the cloud, and chances are “cost savings” is on the list. Ask them what drove them back, and they will likely say “cost surprises.” 🙂

Public cloud can scale, but it also scales your bill. Between data egress fees, idle VM costs, and unpredictable licensing, many organizations find their cloud TCO spiraling. Oracle Cloud Infrastructure, Oracle Compute Cloud@Customer in this scenario, changes the equation. It delivers the OCI experience on-premises, in a consumption-based OpEx model, but with predictability built in. No data egress. No hidden costs. No guessing. Just clear, auditable resource usage within your own data center.

Performance Without Compromise

Latency is often a business risk. Trading platforms, AI inference, and high-speed transaction systems, they all demand millisecond or sub-millisecond responsiveness. But in the public cloud, compute and data are often separated across zones or regions.

With C3, you bring compute right to where your data lives. Ultra-low latency, high-throughput workloads no longer need to be shoehorned into far-off regions. The cloud comes to you and it is backed by high-performance storage, native GPU options, and OCI’s virtual cloud networking.

Data Sovereignty, Security & Compliance – Rebuilt for Reality

Oracle C3 provides on-premises infrastructure, fully managed by Oracle, but entirely controlled by you. Data never leaves your facility unless you allow it. Access is managed through Operator Access Control, which gives you precise control over who can log in, when, and for what. Encryption at rest, in motion, and during access? Built in. Full audit trails? Native. That is the level of control regulators expect and enterprises now demand.

Governance, Visibility & Control

One of the hidden challenges of public cloud? Shadow IT. Teams spin up services without oversight, leading to risks in compliance, billing, and security posture.

With Oracle C3, everything runs within the bounds of your governance framework. You control IAM, compartmentalization, policy enforcement, tagging, metering, and quotas. It is the same control plane as OCI, so your security posture doesn’t depend on where the workload runs.

Operational Resilience You Actually Own

Let’s be honest: handing over infrastructure management can reduce operational overhead, but it can also mean giving up visibility, scheduling flexibility, and recovery control.

Oracle Compute Cloud@Customer delivers the best of both worlds. Oracle manages the infrastructure lifecycle, from firmware updates to patching. But you define the maintenance windows, and the failover behaviour. DR scenarios, backup policies, hardware separation – they are yours to orchestrate.

What Is Operator Access Control?

Oracle Operator Access Control (OpCtl) is a feature used in products like Oracle Compute Cloud@Customer (C3) and Exadata Cloud@Customer, designed to give customers:

-

Explicit approval over Oracle’s administrative access

-

Time-bound, purpose-specific access windows

-

Comprehensive logging and session recording

-

Segregation of duties and multi-party authorization

So, before any Oracle operator can access the C3 environment for maintenance, updates, or troubleshooting, the customer must approve the request, define the time window, and scope the level of access permitted. All sessions are fully audited, with logs available to the customer for compliance and security reviews. This ensures that sensitive workloads and data remain under strict governance, aligning with zero-trust principles and regulatory requirements.

So, in practice, you can say:

“No one from Oracle can access my infrastructure unless I approve it, for a specific task, at a specific time.”

This is an excellent feature and tool for operational governance, auditability, and security assurance.

If you think about the U.S. CLOUD Act, then OpCtl, in my opinion, strengthens your legal and practical posture since you control the external access to the C3 systems. Additionally, you can provide proof and logs that no access occurred without your approval.

Let’s Think Differently. Give It A Try!

A Swiss professor recently outlined four conditions for digital sovereignty in the public cloud. The assumptions are valid, but they are also rooted in a narrow view of how the cloud has to work. If you want cloud, you have to give up control. And if you want sovereignty, you have to give up most of the cloud (services).

That binary thinking doesn’t hold up anymore. And it never should have.

Let’s be clear: digital sovereignty is not about avoiding cloud, it’s about deploying it on your terms. And that’s exactly what Oracle Compute Cloud@Customer (C3) enables as a third path (besides public cloud and repatriation).

Let’s take the arguments one by one.

1. “Only unmodified open source software ensures sovereignty”

Yes, I agree, open standards matter. But sovereignty isn’t just about code transparency. It’s about control over where software runs, how it’s operated, and who has access.

With C3, you run any open-source stack you want, inside your own data center. But more importantly, you also control the platform it runs on. Compute, storage, and networking stay within your facility, under your governance. You decide the architecture, the patch cycle, and the integrations. And you do it without giving up cloud automation, elasticity, or DevOps tooling.

2. “Internal know-how must be retained”

Agreed. Sovereignty without competence is meaningless.

C3 supports the same APIs, SDKs, Terraform modules, and CLI as the Oracle public cloud. That means your teams build skills once and apply them everywhere – on-premises, in the public cloud, or across hybrid landscapes.

You keep operational knowledge in-house. You train on real cloud-native patterns. And you run them on infrastructure that belongs to you.

3. “Avoid proprietary, specialized services”

This is where things get nuanced.

Most enterprises don’t want to avoid modern services. They just want freedom of movement (aka portability). With C3, you are not locked into proprietary ecosystems. You get the full Oracle Cloud Infrastructure stack but deployed in your data center, on infrastructure fully under your legal and physical control.

Because the environment is API-compatible with OCI, you are not locked in – you are portable by design. Move workloads to Oracle public regions. Or any other cloud. Or don’t. It is your choice. I would call that leverage.

4. “SaaS without data export is unacceptable”

Right again. Exit strategy matters.

C3 isn’t SaaS. It’s IaaS and PaaS delivered as a service inside your firewall. And because you control the storage, the networking, and the OS stack, you always retain the ability to export your data by using open formats, standard tools, and your own access policies.

Want to back up to another system? Build cross-platform failover? Disconnect from Oracle entirely? No problem. Your data stays in your hands.

Final Thought

Cloud repatriation is happening for good reasons. But walking away from cloud entirely isn’t the answer. The better move is to rethink where the cloud belongs and who’s in control of it.

Oracle Compute Cloud@Customer gives you the cloud experience your teams want, with the sovereignty your business needs.

And today, that may be the one most strategic infrastructure choice you can make (besides Oracle’s EU Sovereign Cloud and Dedicated Cloud offerings).

If you are working in the public sector, have a look at this article: Enabling Public Sector Unity – How Oracle Alloy Could Power a Government Cloud and Cross-Agency Collaboration