Why the Sovereign AI Platform from Nutanix Ends the DIY Illusion

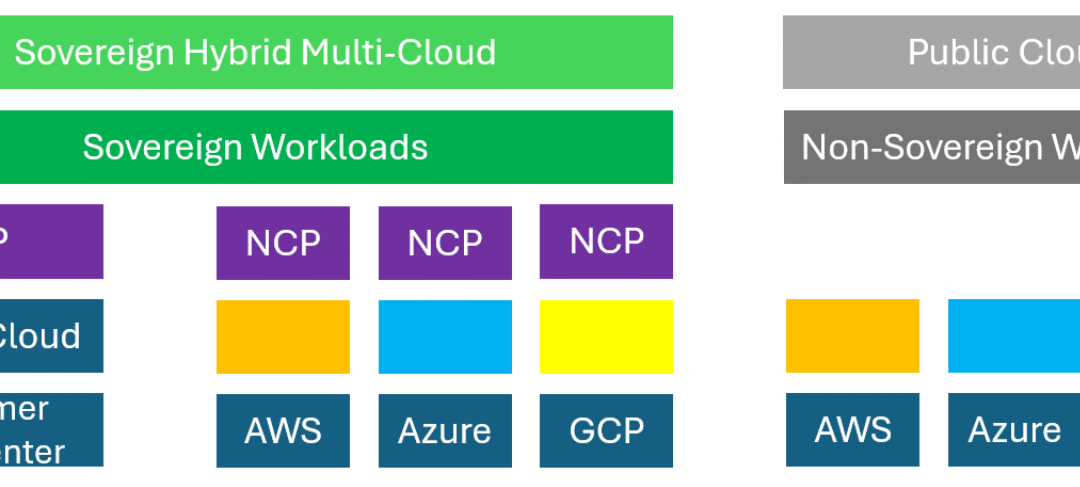

AI has moved into every boardroom conversation. However, meaningful results don’t come from building everything from scratch. For enterprises and public organizations, sovereignty has become the real test of digital trust, and platforms like NCP, NKP, and NAI give an answer where others struggle.

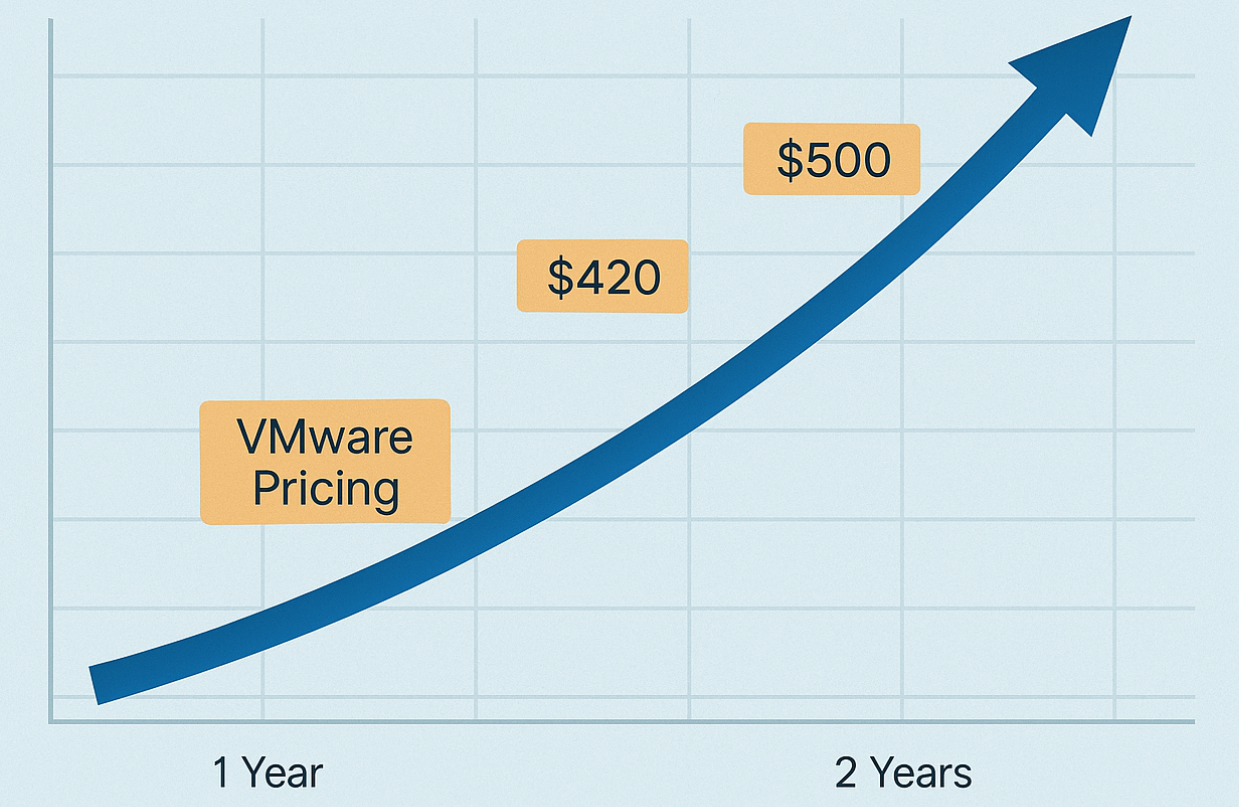

Over the past year, enterprises and public institutions have increasingly tried to build their own AI platforms. The idea sounds compelling. You can run open-source large language models in-house, fine-tune them with proprietary data, and operate a fully controlled environment. In practice, this approach proves difficult.

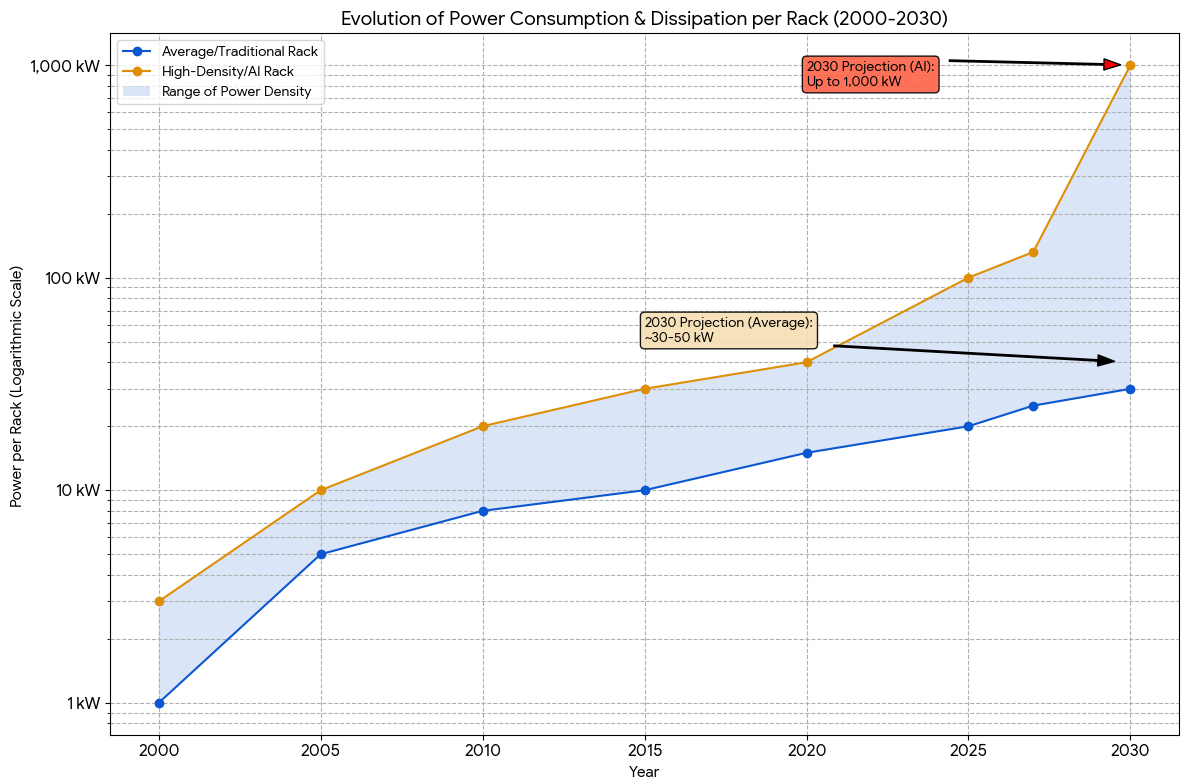

The pace of change is relentless. Models evolve in weeks, tooling shifts every quarter, and lifecycle management is more complex than anticipated. Teams quickly discover that maintaining infrastructure, compliance, and updates requires far more resources than expected. What was meant to guarantee independence often ends in fragile prototypes that never scale.

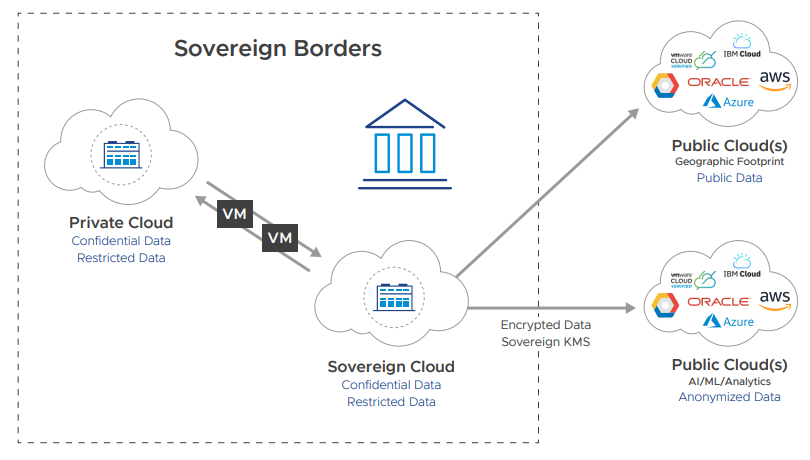

True sovereignty is not (only) about doing everything internally but also about keeping control while relying on platforms that deliver the operational stability needed to run AI securely and at scale.

Nutanix Cloud Platform – The Sovereign Private Cloud Foundation

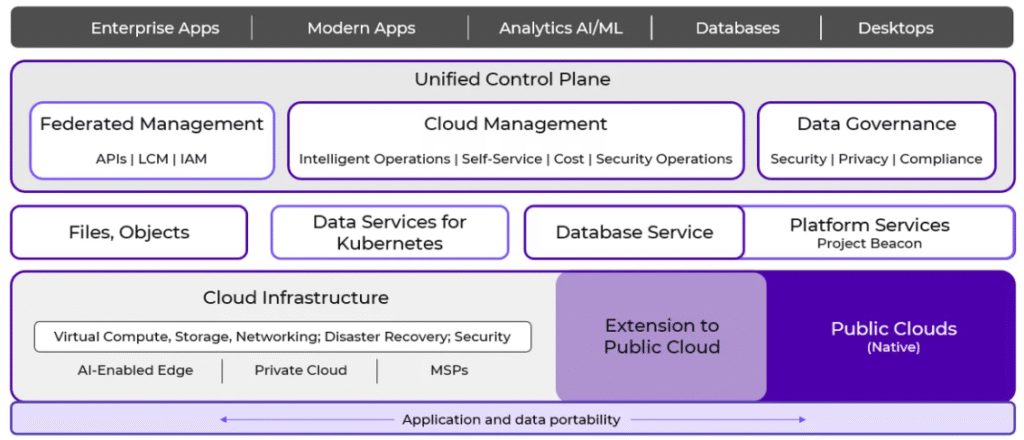

Nutanix Cloud Platform (NCP) provides exactly that. It offers a private cloud foundation that allows organizations to remain in control of infrastructure and data, while avoiding the trap of re-creating a hyperscaler internally.

Sovereignty in this context means deciding who governs updates, how compliance is enforced, and which integrations are allowed. NCP delivers this flexibility through its modular architecture. Customers can adopt only the layers they need, combine them with open-source components, or run third-party solutions on the same platform.

For AI, where workloads evolve quickly and ecosystems are fragmented, this adaptability is critical. NCP ensures that the foundation remains under the customer’s control while still being ready for future demands.

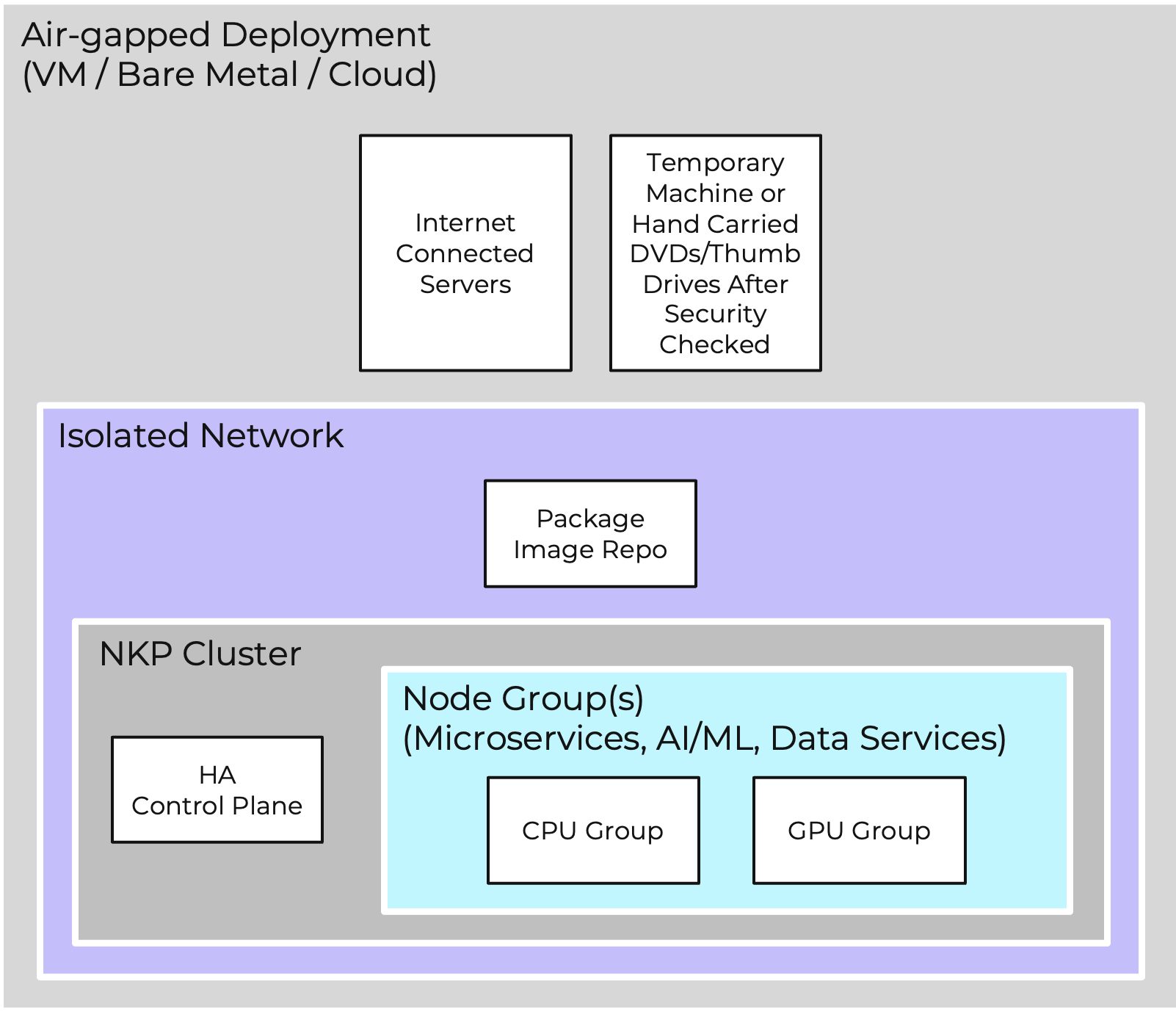

Nutanix Kubernetes Platform – Orchestrating AI Workloads

Running AI workloads requires more than infrastructure. It depends on reliable orchestration, lifecycle management, and scalability. This is where Nutanix Kubernetes Platform (NKP) plays a central role.

NKP delivers an enterprise-ready Kubernetes distribution with consistent operations across environments. Instead of spending resources on patching and troubleshooting upstream clusters, teams can focus on building and deploying AI applications, whether retrieval-augmented generation (RAG) pipelines, vector databases, or fine-tuned models.

The combination of NCP and NKP means that organizations can operate AI in a compliant, sovereign environment, without being slowed down by the underlying complexity.

Nutanix Enterprise AI – Bringing Enterprise AI to Life

Nutanix Enterprise AI (NAI) builds on this foundation by making AI adoption tangible. It provides pre-validated, production-ready blueprints and integrations that simplify how AI infrastructure is deployed and scaled.

Instead of each organization reinventing the wheel, NAI accelerates the journey by delivering tested architectures for GPU management, data pipelines, and model deployment. Combined with NCP and NKP, it creates a stack where AI workloads can move from experiment to production without losing compliance or control.

NAI ensures that sovereignty means having a trusted, repeatable path to make AI real.

Between Dependency and Autonomy

Enterprises today face two extremes. On one side lies the dependency on hyperscalers, with the risk of (multiple forms of) lock-in and limited control. On the other side stands full do-it-yourself, which consumes resources and rarely delivers production-ready results.

Sovereign AI requires balance. Buy the infrastructure foundation, partner on orchestration, and build only what creates real differentiation. This middle path is where NCP and NKP demonstrate their strength by enabling sovereignty without sacrificing agility.

A Future Still in the Making

The debate about AI and sovereignty is only at the beginning. Regulations will evolve, compliance requirements will tighten, and technology stacks will keep changing. What is clear today? Organizations that embed sovereignty into their AI strategy from the start will be better positioned for the future.

With NCP, NKP, and NAI, enterprises gain a foundation where sovereignty is designed in and adaptability is preserved. That makes them enablers of sustainable AI strategies in an era where control and trust are as important as innovation itself.