A Primer On Oracle Compute Cloud@Customer

Enterprises across regulated industries, such as banking, healthcare, and the public sector, often find themselves caught in a dilemma: they want the scale and innovation of the public cloud, but they can’t move their data off-premises due to regulatory, latency, or sovereignty concerns. The answer is not one-size-fits-all, and the market reflects that through several deployment models:

- Public cloud vendors extending to on-premises (AWS Outposts, Azure Local + Azure Arc, Google Distributed Cloud Edge)

- Software vendors offering a “private cloud” (Nutanix, VMware by Broadcom)

- Hardware vendors offering “cloud-like” experiences (HPE GreenLake, Dell APEX, Lenovo TruScale)

Oracle C3 bridges the best of all three worlds:

- Runs OCI control plane on-prem, with native compute, storage, GPU, and PaaS services

- Keeps data resident while Oracle manages the infrastructure

- Oracle manages hardware, software, updates, and lifecycle

- Integration with Oracle Exadata and Autonomous Database

- Same APIs, SDKs, CLI, and DevOps tools as OCI

Architecture

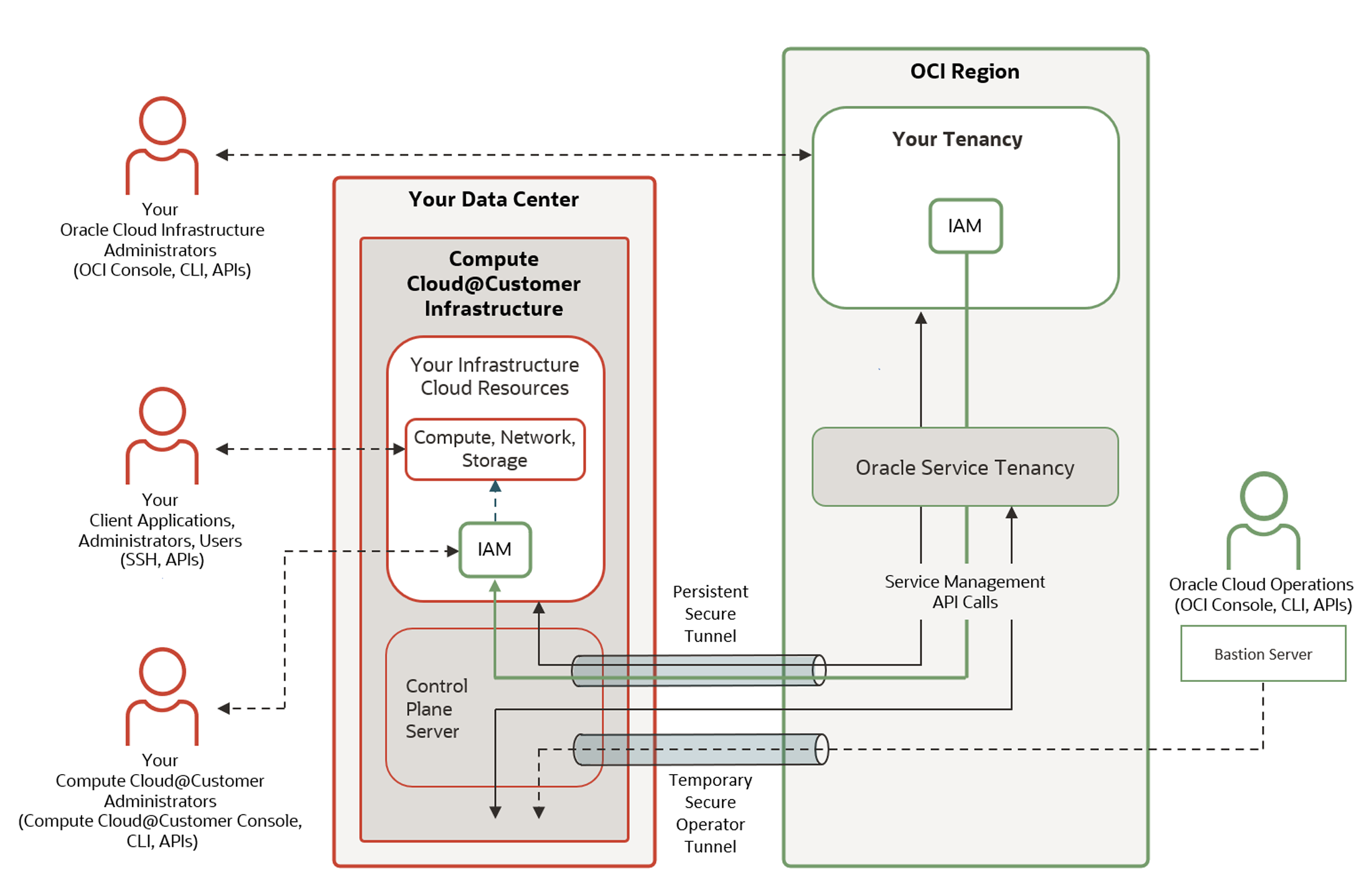

The Cloud Control Plane is an advanced software platform that operates within Oracle Cloud Infrastructure (OCI). It serves as the central management interface for deploying and operating resources, including those running on Oracle Compute Cloud@Customer. Customers access the Cloud Control Plane securely via a web browser, command-line interface (CLI), REST APIs, or language-specific SDKs, enabling flexible integration into existing IT and DevOps workflows.

At the heart of the platform is the identity and access management (IAM) system that allows multiple teams or departments to share a single OCI tenancy while maintaining strict control over access. Using compartments, organizations can logically organize and isolate resources such as Compute Cloud@Customer instances, and enforce granular access policies across the environment.

Communication between the Cloud Control Plane and the on-premises C3 system is established through a dedicated, secure tunnel. This encrypted tunnel is hosted by specialized management nodes within the rack. These nodes function as a gateway to the infrastructure, handling all control plane communications. In addition to maintaining the secure connection, they also:

- Orchestrate cloud automation within the on-premises environment

- Aggregate and route telemetry and diagnostic data to Oracle Support Services

- Host software images and updates used for patching and maintenance

Important: Even if connectivity between the Cloud Control Plane and the on-premises system is temporarily lost, virtual machines (VMs) and applications continue running uninterrupted on C3. This ensures high availability and operational continuity, even in isolated or restricted network environments.

Beyond deployment and orchestration, the Cloud Control Plane also handles essential lifecycle operations such as provisioning, patching, backup, and monitoring, and supports usage metering and billing.

Core Capabilities & Services

When you sign in to Oracle Compute Cloud@Customer, you gain access to the same types of core infrastructure resources available in the public Oracle Cloud Infrastructure (OCI). Here is what you can create and manage on C3:

- Compute Instances. You can launch virtual machines (instances) tailored to your application requirements. Choose from various instance shapes based on CPU count, memory size, and network performance. Instances can be deployed using Oracle-provided platform images or custom images you bring yourself.

- Virtual Cloud Networks (VCNs). A VCN is a software-defined, private network that replicates the structure of traditional physical networks. It includes subnets, route tables, internet/NAT gateways, and security rules. Every compute instance must reside within a VCN. On C3, you can configure the Load Balancing service (LBaaS) to automatically distribute network traffic.

- Capacity and Performance Storage. Block Volumes, File Storage, Object Storage

Oracle Operator Access Control

To further support enterprise-grade security and governance, Oracle Compute Cloud@Customer includes Oracle Operator Access Control (OpCtl), which is a sophisticated system designed to manage and audit privileged access to your on-premises infrastructure by Oracle personnel. Unlike traditional support models, where vendor access can be blurred or overly permissive, OpCtl gives customers explicit control over every support interaction.

Before any Oracle operator can access the C3 environment for maintenance, updates, or troubleshooting, the customer must approve the request, define the time window, and scope the level of access permitted. All sessions are fully audited, with logs available to the customer for compliance and security reviews. This ensures that sensitive workloads and data remain under strict governance, aligning with zero-trust principles and regulatory requirements.

Available GPU Options on Compute Cloud@Customer

As enterprises aim to run AI, machine learning, digital twins, and graphics-intensive applications on-premises, Oracle introduced GPU expansion for Compute Cloud@Customer. This enhancement brings NVIDIA L40S GPU power directly into your data center.

Each GPU expansion node in the C3 environment is equipped with four NVIDIA L40S GPUs, and up to six of these nodes can be added to a single rack. For larger deployments, a second expansion rack can be connected, enabling support for a total of 12 nodes and up to 48 GPUs within a C3 deployment.

Oracle engineers deliver and install these GPU racks pre-configured, ensuring seamless integration with the base C3 system. These nodes connect to the existing compute and storage infrastructure over a high-speed spine-leaf network topology and are fully integrated with Oracle’s ZFS storage platform.

Platform-as-a-Service (PaaS) Offerings on C3

For organizations adopting microservices and containerized applications, Oracle Kubernetes Engine (OKE) on C3 provides a fully managed Kubernetes environment. Developers can deploy and manage Kubernetes clusters using the same cloud-native tooling and APIs as in OCI, while operators benefit from lifecycle automation, integrated logging, and metrics collection. OKE on C3 is ideal for hybrid deployments where containers may span on-prem and cloud environments.

The Logical Next Step After Compute Cloud@Customer?

Typically, organizations choose to move to OCI Dedicated Region when their cloud needs outgrow what C3 currently offers. As companies expand their cloud adoption, they require a richer set of PaaS capabilities, more advanced integration and analytics tools, and cloud-native services like AI and DevOps platforms that are not fully available in C3 yet. OCI Dedicated Region is designed to meet these demands by providing a comprehensive, turnkey cloud environment that is fully managed by Oracle but physically isolated within your data center.

I consider OCI Dedicated Region as the next-generation private cloud. If you are a VMware by Broadcom customer and looking for alternatives, have a look at 5 Strategic Paths from VMware to Oracle Cloud Infrastructure.

Final Thought – Choose the Right Model for Your Journey

Every organization is on its own digital transformation journey. For some, that means moving aggressively into the public cloud. For others, it’s about modernizing existing infrastructure or complying with tight regulations. If you need cloud-native services, enterprise-grade compute, and strong data sovereignty, Oracle Compute Cloud@Customer is one of the most complete and future-proof options available.