Oracle Cloud Infrastructure – The Cloud That Actually Delivers

In 2024, I wrote an article about cloud repatriation (aka reverse cloud migrations) and the fact that businesses are adopting a more nuanced workload-centric strategy, instead of just bringing some of the workloads back to on-premises data centers. The article did not focus (primarily) on costs, but now it seems to be the right time to take a closer look at cloud economics and why Oracle Cloud Infrastructure (OCI) is a path worth investigating.

Disclaimer: The views and opinions are my own, not my employer’s.

Why Enterprises Are Bringing Workloads Back and Why They Are Still Missing the Obvious Answer

Let us be honest, cloud repatriation is happening because customers feel let down. The promised land of cloud economics has not delivered. CFOs and CIOs are looking at their monthly cloud bills and asking the same question: “Where are the savings we were promised?”

Many jumped in expecting linear cost efficiency, which means that you pay for what you use, scale when you need, and save when you do not. But instead, they found themselves locked into complex pricing models, surprise data egress charges, and massive bills that just do not line up with actual value. It is no wonder so many companies are taking a second look at their on-prem strategies.

While the cloud offers a ton of advantages, not all clouds are created equal. Most enterprises default to the big two or three providers, thinking that is the safest, smartest choice. But over time, they realize their workloads – especially those that are I/O-heavy, latency-sensitive, or simply require consistent performance – are not running efficiently, and they are costing a fortune.

Is cloud economics more myth than math? The bigger question we should all be asking is: why are we ignoring better options, especially when they are cheaper and more performant?

Geopolitics – The New Cloud Strategy Trigger

Beyond cost and performance, geopolitical uncertainty is now a major factor as well shaping cloud decisions. From shifting data sovereignty laws to rising tensions between global superpowers, enterprises are realizing that overreliance on a single hyperscaler can expose them to regulatory, compliance, and supply chain risks.

Guess what…The problem was always there. It is nothing new. And “the problem” is much bigger than just public clouds. But let us park this topic for a while.

So, why are we ignoring better options?

OCI was architected from the ground up with a modern, high-performance, cost-predictable mindset. And yet, for reasons that still are not 100% clear to me, it gets overlooked/ignored far too often.

Let us talk numbers and say that OCI delivers a 30–50% lower TCO compared to other hyperscalers. Let us assume that this is not fluff but the reality: Compute, storage, and networking are simply cheaper on OCI, and they come with better performance.

What is the Problem?

Here is what I do not get: if cost and performance are the two biggest drivers of cloud repatriation, and OCI excels at both, why are not more customers seriously considering Oracle Cloud? Does it have something to do with the KPIs decision-makers are measured on? Is it about not losing face? Is it about sunk cost fallacy? All the above? Is it because Oracle is not screaming as loudly as the others? Or is it just inertia and sticking with what is familiar, even if it is not working? Or is it about doing the same (mistake) others are making?

Whatever the reason, it is time for a reset. Enterprises need to look beyond the usual suspects and start asking themselves some hard questions:

- Are we really getting value from our cloud provider?

- Are we paying a premium for subpar performance?

- Are we repatriating workloads simply because we chose the wrong cloud in the first place?

The Real Value of OCI – Look Beyond Price

Everyone loves talking about cloud cost savings, and yes, OCI delivers big on that front. But the conversation should not stop there. In fact, some of the biggest reasons customers stay with OCI long term have nothing to do with price tags and everything to do with how it actually feels to run real workloads on a platform built the right way.

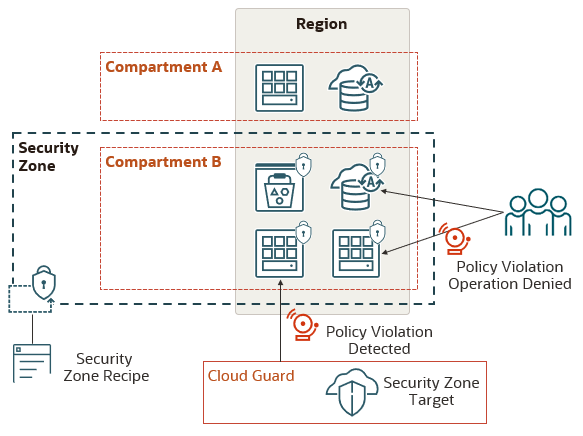

Let us talk about security. With OCI, it is not something you bolt on after the fact or pay extra for, it is built in from the ground up. Encryption is always on. Patching is automated and proactive. The way tenancy and network isolation work in OCI gives you far less surface area to worry about.

Again, performance is another big one. It is not just fast, it is predictably fast. You do not have to overprovision or tweak the system to get the speed you need. The network is high-bandwidth and low-latency by default. Compute and storage are designed to handle enterprise-grade workloads without the tuning. It just works, out of the box.

But here is the part that is harder to quantify and just as important: operational peace of mind. With OCI, teams spend less time firefighting and more time building. You are not constantly chasing down unpredictable behaviour or buried in billing surprises (SLAs and prices are the same for ALL regions!).

And if control and flexibility are part of your strategy, then OCI goes even further. With OCI Dedicated Region, you can bring the full power of public cloud into your own data center, fully managed by Oracle, with no compromises. For partners and service providers, Oracle Alloy offers the ability to build and brand your own cloud, running on OCI’s infrastructure, while retaining control over operations and customer relationships. And when data residency, air-gapping, or national security are non-negotiable, Oracle Cloud Isolated Region delivers a physically and logically isolated cloud, fully disconnected from the public internet. No other cloud provider comes close to offering this kind of architectural flexibility at this level of maturity.

Final Thoughts

But now back to topic. 🙂 Cloud repatriation is much more complex and bigger than most of us think. Cloud repatriation should not just be a retreat, it should be a recalibration. And that recalibration should include a serious look at OCI. If you want predictable costs, industry-leading performance, flexibility, real cloud economics, and not just marketing slides, then it is time to rethink what cloud success really looks like.

Because at the end of the day, the cloud should not be about what is trendy. It should be about what works and what pays off. And about who can execute your strategy.