Multi-cloud is normal in public cloud. Why is “single-cloud” still normal in private cloud?

If you ask most large organizations why they use more than one public cloud, the answers are remarkably consistent. It is not fashion, and it is rarely driven by engineering curiosity. It is risk management and a best of breed approach.

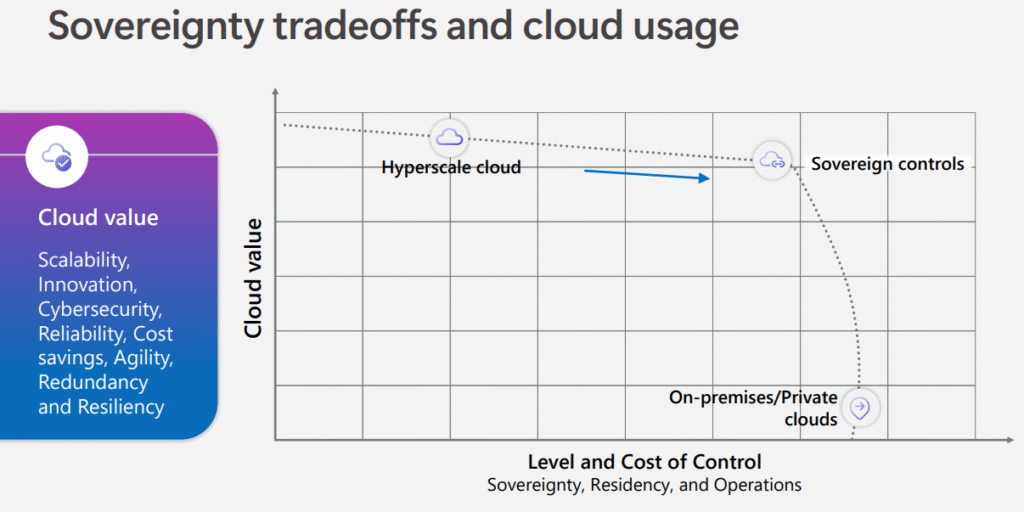

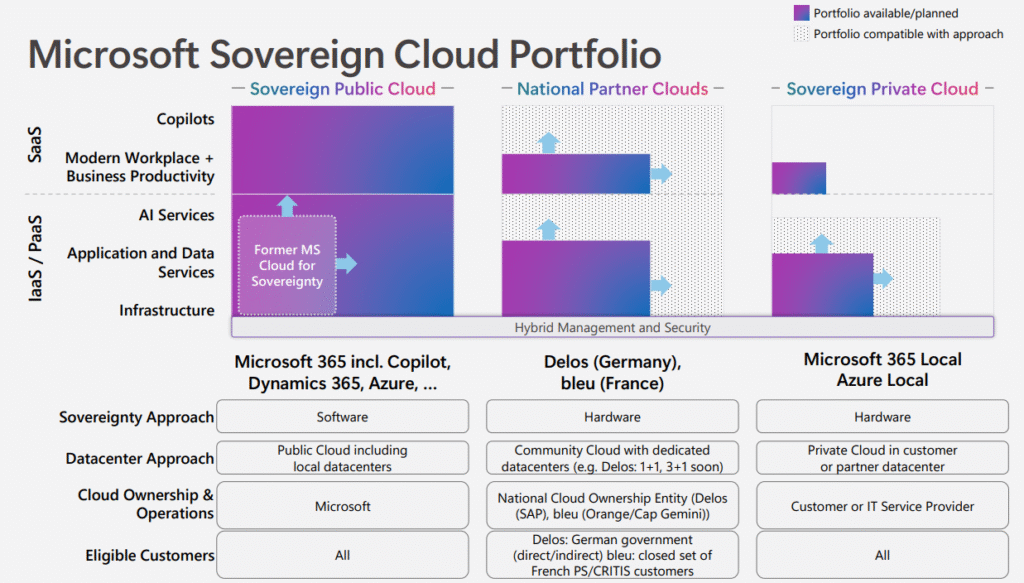

Enterprises distribute workloads across multiple public clouds to reduce concentration risk, comply with regulatory expectations, preserve negotiation leverage, and remain operationally resilient in the face of outages that cannot be mitigated by adding another availability zone. In regulated industries, especially in Europe, this thinking has become mainstream. Supervisors explicitly expect organisations to understand their outsourcing dependencies, to manage exit scenarios, and to avoid structural lock-in where it can reasonably be avoided.

Now apply the same logic one layer down into the private cloud world, and the picture changes dramatically.

Across industries and geographies, a significant majority of private cloud workloads still run on a single private cloud platform. In practice, this platform is often VMware (by Broadcom). Estimates vary, but the dominance itself is not controversial. In many enterprises, approximately 70 to 80 percent of virtualized workloads reside on the same platform, regardless of sector.

If the same concentration existed in the public cloud, the discussion would be very different. Boards would ask questions, regulators would intervene, architects would be tasked with designing alternatives. Yet in private cloud infrastructure, this concentration is often treated as normal, even invisible.

Why?

Organisations deliberately choose multiple public clouds

Public cloud multi-cloud strategies are often oversimplified as “fear of lock-in”, but that misses the point.

The primary driver is concentration risk. When critical workloads depend on a single provider, certain failure modes become existential. Provider-wide control plane outages, identity failures, geopolitical constraints, or contractual disputes cannot be mitigated by technical architecture alone. Multi-cloud does not eliminate risk, but it limits the blast radius.

Regulation reinforces this logic. The European banking supervision, for example, treats cloud as an outsourcing risk and expects institutions to demonstrate governance, exit readiness, and operational resilience. An exit strategy that only exists on paper is increasingly viewed as insufficient. There are also pragmatic reasons. Jurisdictional considerations, data protection regimes, and shifting geopolitical realities make organizations reluctant to anchor everything to a single legal and operational framework. Multi-cloud (or hybrid cloud) becomes a way to keep strategic options open.

And finally, there is negotiation power. A credible alternative changes vendor dynamics. Even if workloads never move, the ability to move matters.

This mindset is widely accepted in the public cloud. It is almost uncontroversial.

How the private cloud monoculture emerged

The dominance of a single private cloud platform did not happen by accident, and it did not happen because enterprises were careless.

VMware earned its position over two decades by solving real problems early and building an ecosystem that reinforced itself. Skills became widely available, tooling matured, and operational processes stabilized. Backup, disaster recovery, monitoring, security controls, and audit practices are all aligned around a common platform. Over time, the private cloud platform evolved into more than just software. It became the operating model.

And once that happens, switching becomes an organizational transformation.

Private cloud decisions are also structurally centralized. Unlike public cloud consumption, which is often decentralized across business units, private cloud infrastructure is intentionally standardized. One platform, one set of guardrails, one way of operating. From an efficiency and governance perspective, this makes sense. From a dependency perspective, it creates a monoculture.

For years, this trade-off was acceptable because the environment was stable, licensing was predictable, and the ecosystem was broad. The rules of the game did not change dramatically.

That assumption is now being tested.

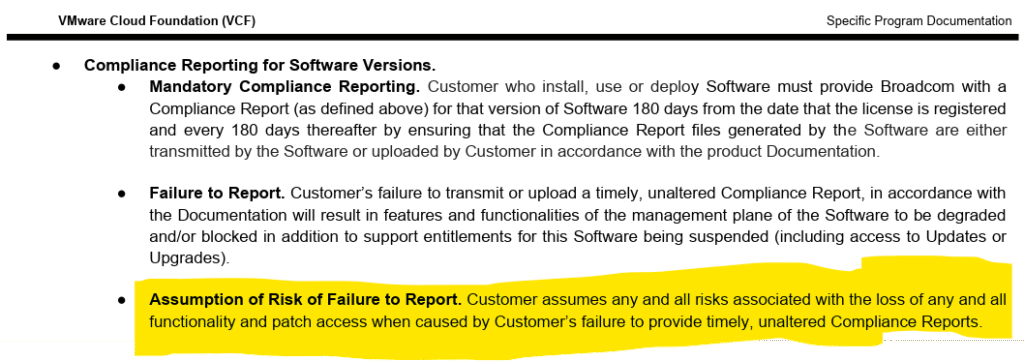

What has changed is not the technology, but the dependency profile

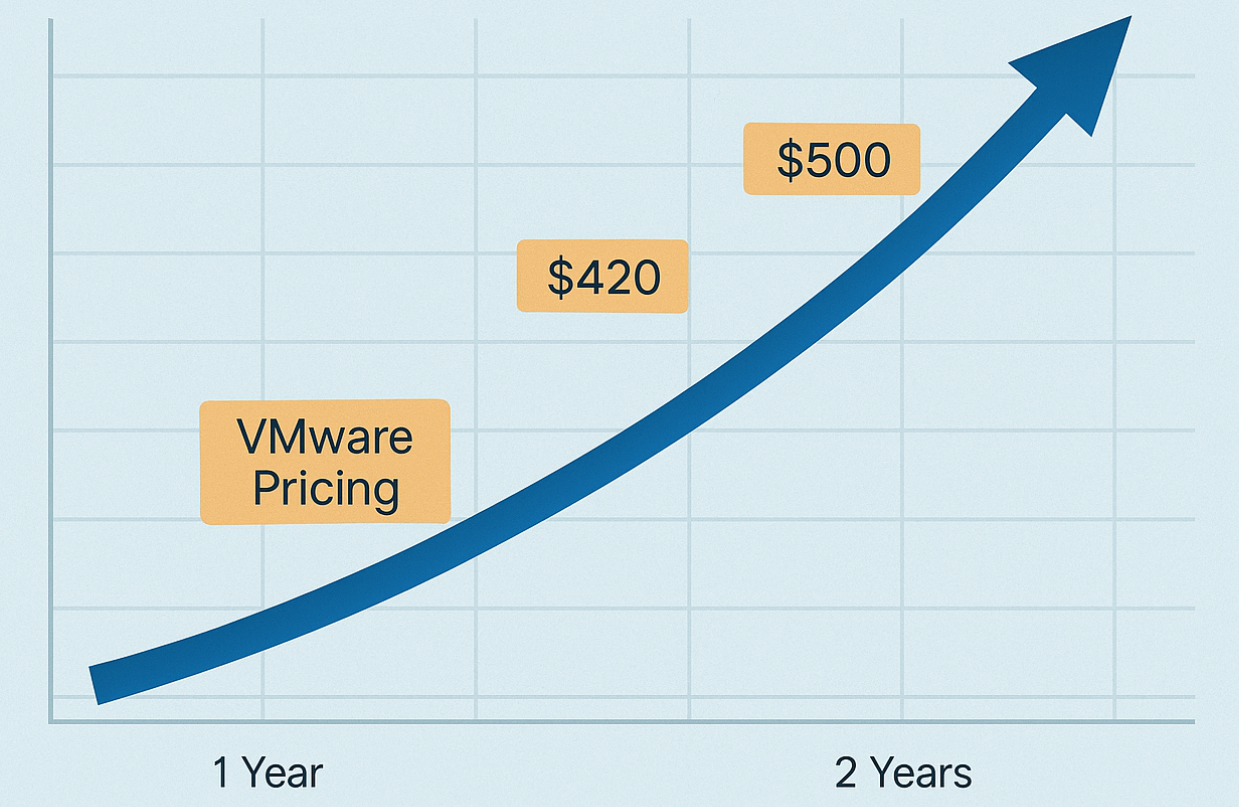

VMware remains a technically strong private cloud platform. That is not in dispute. What has changed under Broadcom is the commercial and ecosystem context in which the platform operates. Infrastructure licensing has shifted from a largely predictable, incremental expense into a strategically sensitive commitment. Renewals are no longer routine events. They become moments of leverage.

At the same time, changes in partner models and go-to-market structures affect how organizations buy, renew, and support their private cloud infrastructure. When the surrounding ecosystem narrows, dependency increases, even if the software itself remains excellent.

This is not a judgment on intent or quality. It is just a structural observation. When one private cloud platform represents the majority of an organization’s infrastructure, any material change in pricing, licensing, or ecosystem access becomes a strategic risk by definition.

The real issue is not lock-in, but the absence of a credible exit

Most decision-makers do not care about hypervisors, they care about exposure. The critical question is not whether an organization plans to leave its existing private cloud platform. The question is whether it could leave, within a timeframe the business could tolerate, if it had to.

In many cases, the honest answer is no.

Economic dependency is the first dimension. When a single vendor defines the majority of your infrastructure cost base, budget flexibility shrinks.

Operational dependency is the second. If tooling, processes, security models, and skills are deeply coupled to one platform, migration timelines stretch into years. That alone is a risk, even if no migration is planned.

Ecosystem dependency is the third. Fewer partners and fewer commercial options reduce competitive pressure and resilience.

Strategic dependency is the fourth. The private cloud platform is increasingly becoming the default landing zone for everything that cannot go to the public cloud. At that point, it is no longer just infrastructure. It is a critical organizational infrastructure.

Public cloud regulators have language for this. They call it outsourcing concentration risk. Private cloud infrastructure rarely receives the same attention, even though the consequences can be comparable.

Concentration risk in the public sector – When dependency is financed by taxpayers

In the public sector, concentration risk is not only a technical or commercial question but also a governance question. Public administrations do not invest their own capital. Infrastructure decisions are financed by taxpayers, justified through public procurement, and expected to remain defensible over long time horizons. This fundamentally changes the risk calculus.

When a public institution concentrates the majority of its private cloud infrastructure on a single platform, it is committing public funds, procurement structures, skills development, and long-term dependency to one vendor’s strategic direction. Now, what does it mean for a nation where 80 or 90% of its public sector is dependent on one single vendor?

That dependency can last longer than political cycles, leadership changes, or even the original architectural assumptions. If costs rise, terms change, or exit options narrow, the consequences are beared by the public. This is why procurement law and public sector governance emphasize competition, supplier diversity, and long-term sustainability. In theory, these principles apply equally to private cloud platforms. In practice, historical standardization decisions often override them.

There is also a practical constraint. Public institutions cannot move quickly. Budget cycles, tender requirements, and legal processes mean that correcting structural dependency is slow and expensive once it is entrenched.

Seen through this lens, private cloud concentration risk in the public sector is not a hypothetical problem. It is a deferred liability.

Why organizations hesitate to introduce a new or second private cloud platform

If concentration risk is real, why do organizations not simply add a second platform?

Because fragmentation is also a risk.

Enterprises do not want five private cloud platforms. They do not want duplicated tooling, fragmented operations, or diluted skills. Running parallel infrastructures without a coherent operating model creates unnecessary cost and complexity, without addressing the underlying problem. This is why most organizations are not looking for “another hypervisor”. They are seeking a second private cloud platform that preserves the VM-centric operating model, integrates lifecycle management, and can coexist without necessitating a redesign of governance and processes.

The main objective here is credible optionality.

A market correction – Diversity returns to private cloud infrastructure

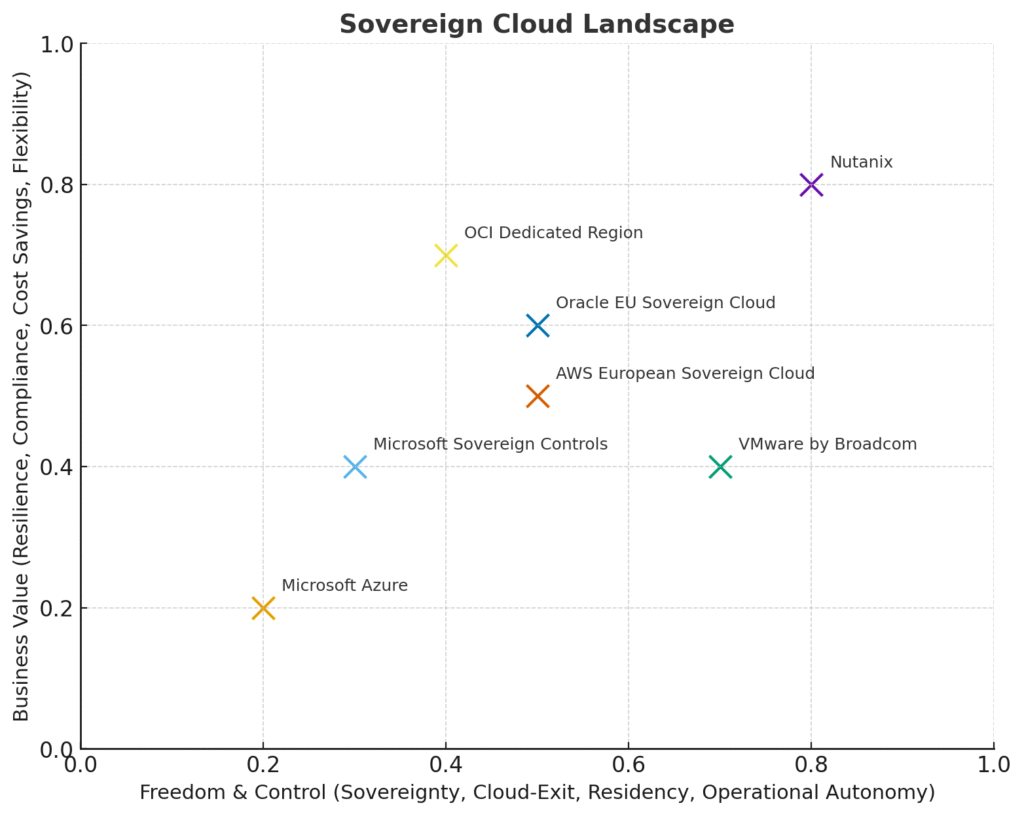

One unintended consequence of Broadcom’s acquisition of VMware is that it has reopened a market that had been largely closed for years. For a long time, the conversation about private cloud infrastructure felt settled. VMware was the default, alternatives were niche, and serious evaluation was rare. That has changed.

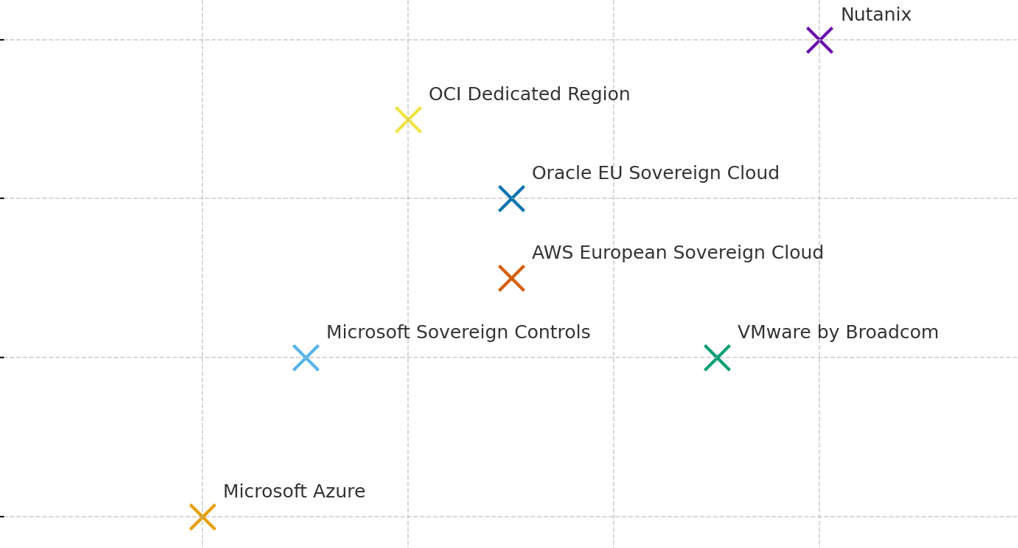

Technologies that existed on the margins are being reconsidered. Xen-based platforms are evaluated again, where simplicity and cost control dominate. Proxmox is discussed more seriously in environments that value open-source governance and transparency. Microsoft Hyper-V is re-examined, where deep Microsoft integration already exists.

At the same time, vendors are responding. HPE Morpheus VM Essentials reflects a broader trend toward abstraction and lifecycle management that reduces direct dependency on a single virtualization layer.

Nutanix appears in this context not as a disruptive newcomer, but as an established private cloud platform that fits a diversification narrative. For some organizations, it represents a way to introduce a second platform without abandoning existing operations or retraining entire teams from scratch.

None of these options is a universal replacement. That is not the point. The point is that choice has returned.

This diversity is healthy. It forces vendors to compete on clarity, pricing, ecosystem openness, and operational value. It forces customers to revisit assumptions that have gone unchallenged for years and it reintroduces architectural optionality into a layer of infrastructure that had become remarkably static.

This conversation matters now

For years, private cloud concentration risk was theoretical. Today, it is increasingly tangible.

The combination of high platform concentration, shifting commercial models, and narrowing ecosystems forces organizations to re-examine decisions they have not questioned in over a decade. Not because the technology suddenly failed, but because dependency became visible.

The irony is that enterprises already know how to reason about this problem. They apply the same logic every day in public cloud.

The difference is psychological. Private cloud infrastructure feels “owned”. It runs on-premises and it feels sovereign. That feeling can be partially true, but it can also obscure how much strategic control has quietly shifted elsewhere.

A measured conclusion

This is not a call for mass migration away from VMware. That would be reactive and, in many cases, irresponsible.

It is a call to apply the same discipline to private cloud platforms that organizations already apply to public cloud providers. Concentration risk does not disappear because infrastructure runs in a data center.

So, if the terms change, do you have a credible alternative?