Why One Customer Chose PostgreSQL on Oracle Cloud Infrastructure and Still Saved on Oracle Costs

A few days ago, I had a really insightful conversation with a colleague, who shared a customer story that perfectly captured something seen more and more across different industries: organizations looking to modernize, reduce complexity, and take control of their database strategy without being locked into one model or one engine.

The story revolved around a customer who decided to move away from their on-premises Oracle databases. At first, it might sound like the classic “cloud migration” narrative everyone has heard before, but this one is different. They weren’t switching cloud providers, and they weren’t turning their back on Oracle either. In fact, they stayed with Oracle. Just in a different way.

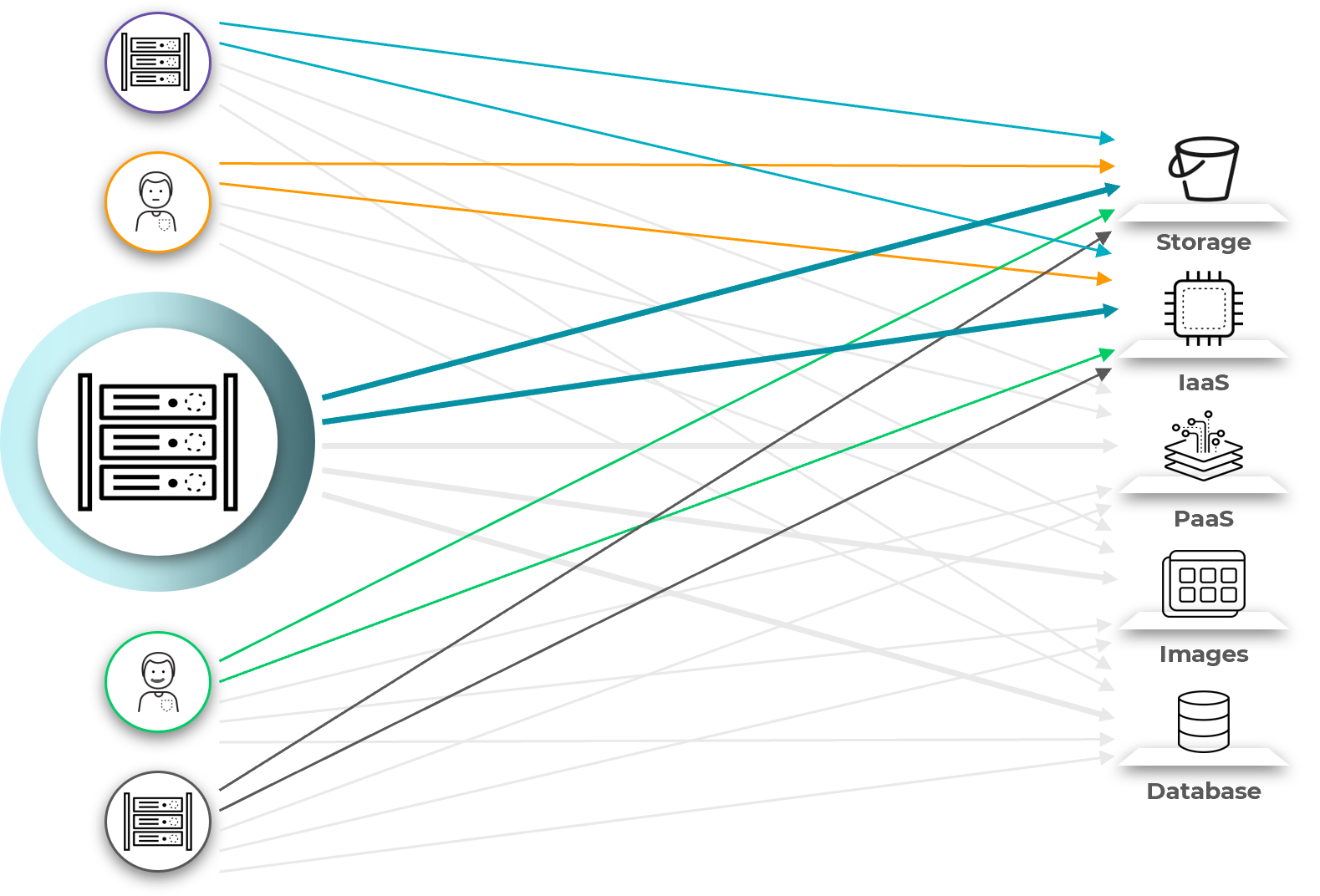

They migrated a significant portion of their Oracle workloads to PostgreSQL, but they ran that PostgreSQL on Oracle Cloud Infrastructure (OCI). And even more interesting: they took advantage of Oracle Support Rewards to reduce their overall cloud spend by leveraging the Oracle support contracts they were already paying for on-premises.

It’s one of those use cases that highlights how important flexibility has become in modern enterprise IT. Customers want to modernize, but they also want the freedom to choose the right tools for each workload. With OCI continuing to evolve and expand, Oracle has positioned itself to support both traditional enterprise use cases and modern, open-source-first strategies.

This particular customer story is a strong example of that. Moving from on-prem Oracle database to PostgreSQL, but doing it in a way that continues to benefit from Oracle’s infrastructure, incentives, and long-term roadmap.

A Familiar Starting Point

The customer, a mid-sized company in the DACH region, was running several Oracle database instances on physical hardware in their own data center. The environment was stable, but due to ongoing sovereignty discussions in the market and in order to meet their CIO’s strategic directive to adopt more open-source technologies, the customer decided to move a subset of their Oracle databases to PostgreSQL.

This wasn’t about a full migration or a complete cloud exit. The approach was pragmatic. Only the databases that were suitable for PostgreSQL and could be decoupled from on-prem dependencies were selected for migration. Other Oracle workloads either remained on-premises or weren’t ideal candidates to move at this time. But the customer is also evaluating Oracle Exadata Cloud@Customer as a way to maintain a consistent cloud operating model while keeping certain workloads close to home.

What stood out was that the team didn’t move these PostgreSQL workloads to another cloud provider. They chose to run them on OCI, which allowed them to benefit from modern open-source technologies while remaining inside Oracle’s ecosystem.

PostgreSQL on OCI

PostgreSQL continues to grow in popularity among developers and architects, especially in cloud-native environments. Oracle recognized this demand and responded with OCI Database with PostgreSQL, a fully managed, enterprise-grade PostgreSQL service designed to meet the needs of both modern application development and mission-critical workloads.

In this case, the customer saw OCI PostgreSQL as a natural extension of their existing cloud investments. They were already using OCI for other workloads and appreciated the operational consistency across services. Running PostgreSQL within OCI meant they avoided the complexity and overhead of introducing another cloud provider, ensuring centralized governance, billing, and support across both Oracle and PostgreSQL workloads.

The service itself offers built-in high availability, automated backups, patching, monitoring, and tight integration with OCI’s broader security and identity services. These features gave the customer the reliability they needed without the overhead of managing infrastructure themselves.

Oracle Support Rewards – A Hidden Advantage

One of the most compelling parts of this story wasn’t just technical. It was financial.

Despite moving certain workloads away from Oracle database, the customer continued to benefit from Oracle’s ecosystem through the Oracle Support Rewards (OSR) program. This initiative allows organizations with existing Oracle on-premises support contracts to earn rewards when consuming OCI services.

With Oracle Support Rewards, you can earn rewards when using Oracle Cloud Infrastructure services, and those rewards can be applied to pay for support contracts that customers have for other eligible on-premises Oracle products.

For example, a customer can be both an OCI customer, and have an on-premises Oracle database enterprise license that they invoice for monthly. The customer’s OCI usage allows them to earn rewards that they can apply to pay the Oracle database enterprise support contract invoice. These invoices are paid in a separate Billing Center system outside of OCI. As a result, Oracle Support Rewards can help lower your support bill.

Get more value out of OCI with Oracle Support Rewards. It’s simple. For every dollar spent on OCI, you can accrue $0.25 in Oracle Support Rewards. If you’re an unlimited license agreement (ULA) customer, you can accrue $0.33 for every dollar spent.

That’s right: even though the workloads had moved to open-source PostgreSQL, the customer still used Oracle’s infrastructure and Oracle rewarded them for it.

The Bigger Picture

This migration wasn’t about replacing Oracle. It was about evolving the database landscape while staying on a trusted platform.

For the PostgreSQL use cases, the customer chose to modernize and go cloud-native. But for mission-critical Oracle workloads that require high performance and data residency, they are actively considering Oracle Exadata Cloud@Customer. This would allow them to extend OCI’s cloud operating model into their own data center – delivering the same automation, APIs, and support model as OCI, without compromising on location or control.

This hybrid approach ensures operational consistency across environments while supporting both open-source and enterprise-grade workloads.

OCI provided the customer with the flexibility to adopt open-source technologies, modernize legacy systems, and still benefit from Oracle’s performance, security, and enterprise infrastructure. And with OSR, Oracle helped reduce the cost of that transformation even when it involved PostgreSQL.

Final Thoughts

This use case was a great reminder of how cloud strategy isn’t always black and white. Sometimes the right answer isn’t “migrate everything to X”. It’s about giving customers choices while offering incentives that make those choices easier.

In this case, the customer successfully modernized part of their database environment, adopted open-source technology, and avoided a complex multi-cloud scenario, all while staying within Oracle’s ecosystem and leveraging its financial and operational benefits.

For any organization thinking about PostgreSQL, data sovereignty, or maintaining architectural flexibility with tools like Exadata Cloud@Customer, this is a story worth looking at more closely.