Can a Unified Multi-Cloud Inventory Transform Cloud Management?

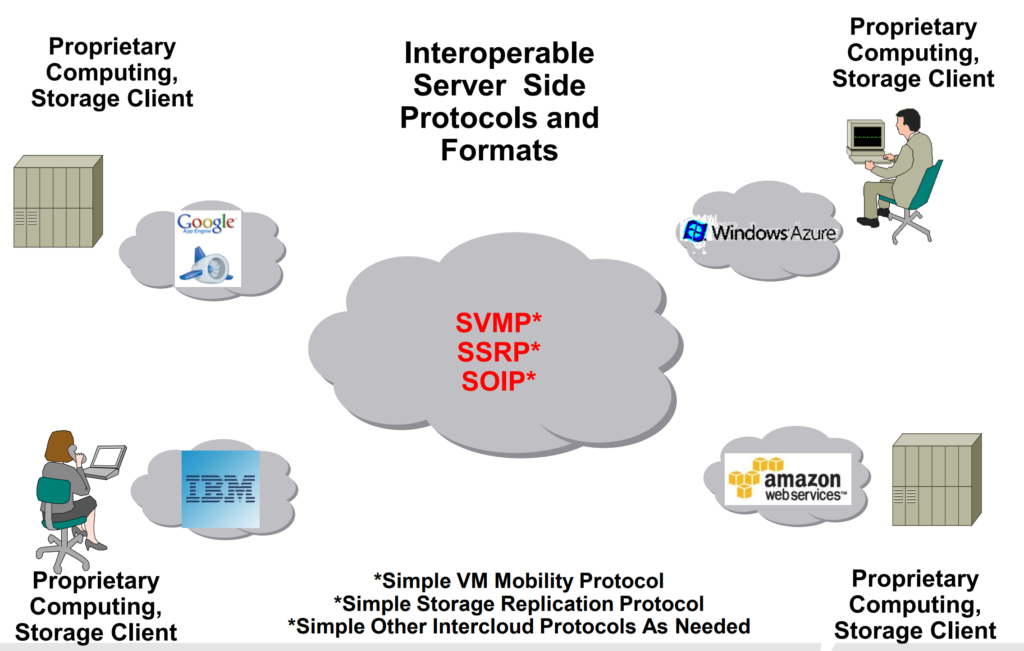

When we spread our workloads across clouds like Oracle Cloud, AWS, Azure, Google Cloud, maybe even IBM, or smaller niche players, we knowingly accept complexity. Each cloud speaks its own language, offers its own services, and maintains its own console. What if there were a central place where we could see everything: every resource, every relationship, across every cloud? A place that lets us truly understand how our distributed architecture lives and breathes?

I find myself wondering if we could one day explore a tool or approach that functions as a multi-cloud inventory, keeping track of every VM, container, database, and permission – regardless of the platform. Not because it’s a must-have today, but because the idea sparks curiosity: what would it mean for cloud governance, cost transparency, and risk reduction if we had this true single pane of glass?

Who feels triggered now because I said “single pane of glass”? 😀 Let’s move on!

Could a Multi-Cloud Command Center Change How We Visualize Our Environment?

Let’s imagine it: a clean interface, showing not just lists of resources, but the relationships between them. Network flows across cloud boundaries. Shared secrets between apps on “cloud A” and databases on “cloud B”. Authentication tokens moving between clouds.

What excites me here isn’t the dashboard itself, but the possibility of visualizing the hidden links across clouds. Instead of troubleshooting blindly, or juggling a dozen consoles, we could zoom out for a bird’s-eye view. Seeing in one place how data and services crisscross providers.

I don’t know if we’ll get there anytime soon (or if such a solution already exists) but exploring the idea of a unified multi-cloud visualization tool feels like an adventure worth considering.

Multi-Cloud Search and Insights

When something breaks, when we are chasing a misconfiguration, or when we want to understand where we might be exposed, it often starts with a question: Where is this resource? Where is that permission open?

What if we could type that question once and get instant answers across clouds? A global search bar that could return every unencrypted public bucket or every server with a certain tag, no matter which provider it’s on.

Wouldn’t it be interesting if that search also showed contextual information: connected resources, compliance violations, or cost impact? It’s a thought I keep returning to because the journey toward proactive multi-cloud operations might start with simple, unified answers.

Could a True Multi-Cloud App Require This Kind of Unified Lens?

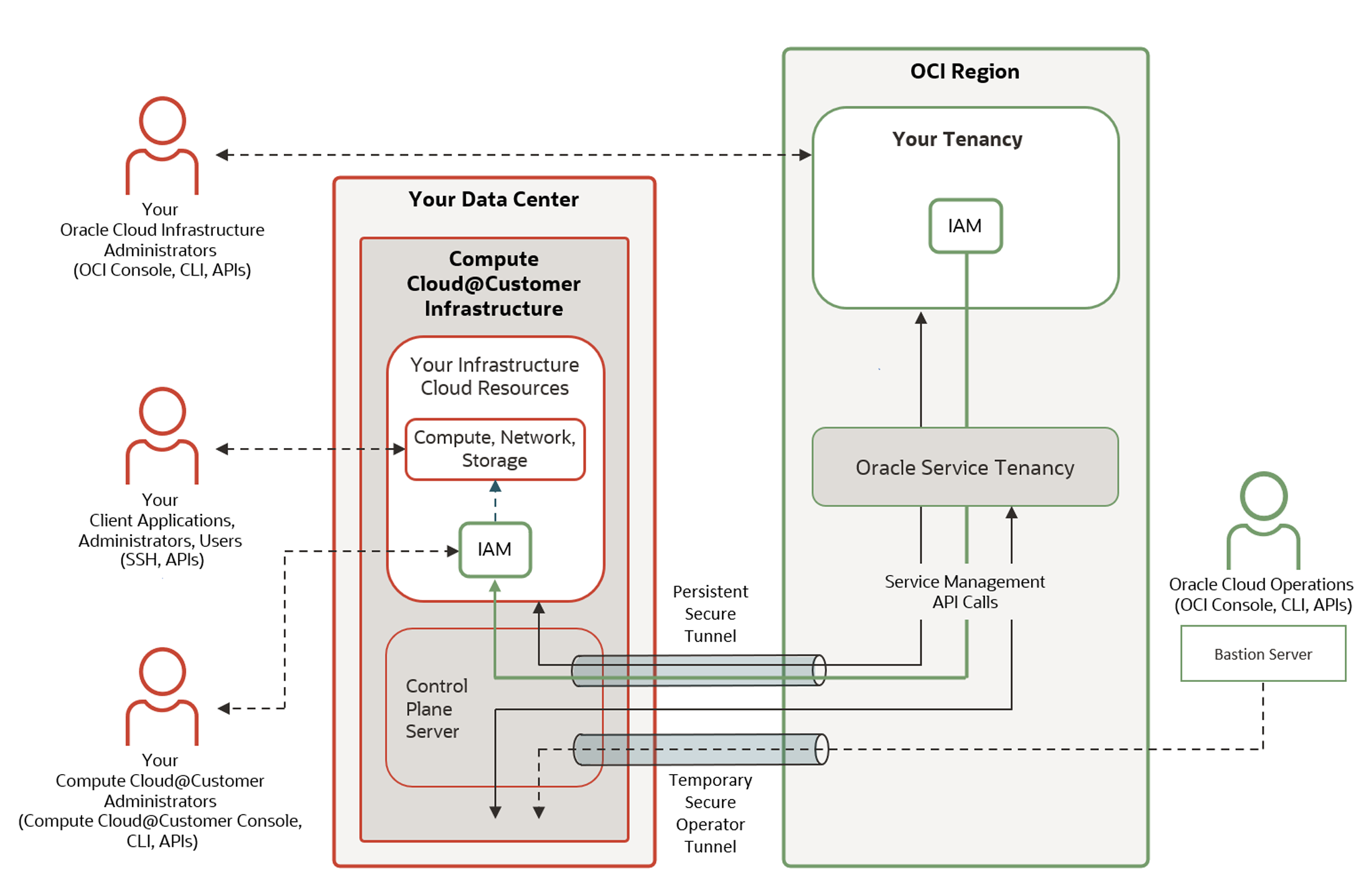

Some teams are already building apps that stretch across clouds: an API front-end in one provider, authentication in another, ML workloads on specialized platforms, and data lakes somewhere else entirely. These aren’t cloud-agnostic apps, they are “cloud-diverse” apps. Purpose-built to exploit best-of-breed services from different providers.

That makes me wonder: if an app inherently depends on multiple clouds, doesn’t it deserve a control plane that’s just as distributed? Something that understands the unique role each cloud plays, and how they interact, in one coherent operational picture?

I don’t have a clear answer, but I can’t help thinking about how multi-cloud-native apps might need true multi-cloud-native management.

VMware Aria Hub and Graph – Was It a Glimpse of the Future?

Not so long ago, VMware introduced Aria Hub and Aria Graph with an ambitious promise: a single place to collect and normalize resource data from all major clouds, connect it into a unified graph, and give operators a true multi-cloud inventory and control plane. It was one of the first serious attempts to address the challenge of understanding relationships between cloud resources spread across different providers.

The idea resonated with anyone who has struggled to map sprawling cloud estates or enforce consistent governance policies in a multi-cloud world. A central graph of every resource, dependency, and configuration sounded like a game-changer. Not only for visualization, but also for powerful queries, security insights, and cost management.

But when Broadcom acquired VMware, they shifted focus away from VMware’s SaaS portfolio. Many SaaS-based offerings were sunset or sidelined, including Aria Hub and Aria Graph, effectively burying the vision of a unified multi-cloud inventory platform along with them.

I still wonder: did VMware Aria Hub and Graph show us a glimpse of what multi-cloud operations could look like if we dared to standardize resource relationships across clouds? Or did it simply arrive before its time, in an industry not yet ready to embrace such a radical approach?

Either way, it makes me even more curious about whether we might one day revisit this idea and how much value a unified resource graph could unlock in a world where multi-cloud complexity continues to grow.

Final Thoughts

I don’t think there’s a definitive answer yet to whether we need a unified multi-cloud inventory or command center today. Some organizations already have mature processes and tooling that work well enough, even if they are built on scripts, spreadsheets, or point solutions glued together. But as multi-cloud strategies evolve, and as more teams start building apps that intentionally spread across multiple providers, I find myself increasingly curious about whether we will see renewed demand for a shared data model of our entire cloud footprint.

Because with each new cloud we adopt, complexity grows exponentially. Our assets scatter, our identities and permissions multiply, and our ability to keep track of everything by memory or siloed dashboards fades. Even something simple, like understanding “what resources talk to this database?” becomes a detective story across clouds.

A solution that offers unified visibility, context, and even policy controls feels almost inevitable if multi-cloud architectures continue to accelerate. And yet, I’m also aware of how hard this problem is to solve. Each cloud provider evolves quickly, their APIs change, and mapping their semantics into a single, consistent model is an enormous challenge.

That’s why, for now, I see this more as a hypothesis. An idea to keep exploring rather than a clear requirement. I’m fascinated by the thought of what a central multi-cloud “graph” could unlock: faster investigations, smarter automation, tighter security, and perhaps a simpler way to make sense of our expanding environments.

Whether we build it ourselves, wait for a vendor to try again, or discover a new way to approach the problem, I’m eager to see how the industry experiments with this space in the years ahead. Because in the end, the more curious we stay, the better prepared we’ll be when the time comes to simplify the complexity we’ve created.