OCI Network Firewall Powered by Palo Alto Networks

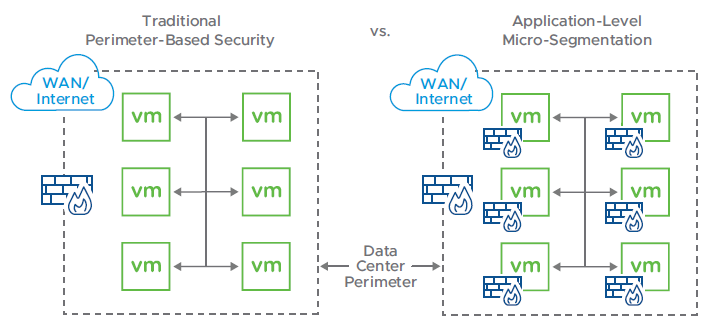

Enterprises running workloads in Oracle Cloud Infrastructure (OCI) often look for deeper inspection, granular control, and consistent policy enforcement. Capabilities that extend beyond the functionalities of basic security groups and route tables.

Historically, many teams implemented these capabilities by deploying virtual appliances or building complex service chains using native components. While functional, those approaches introduced operational complexity, created scaling challenges, and often led to inconsistent enforcement across environments.

To address this, in May 2022, Oracle introduced the OCI Network Firewall, a fully managed, stateful, Layer 7-aware firewalling service built into the OCI platform powered by Palo Alto Networks’ virtual firewall technology. Unlike virtual appliance deployments, this service is provisioned and managed like any other OCI resource, offering deep packet inspection and policy enforcement without requiring the management of underlying infrastructure.

How the OCI Network Firewall Works

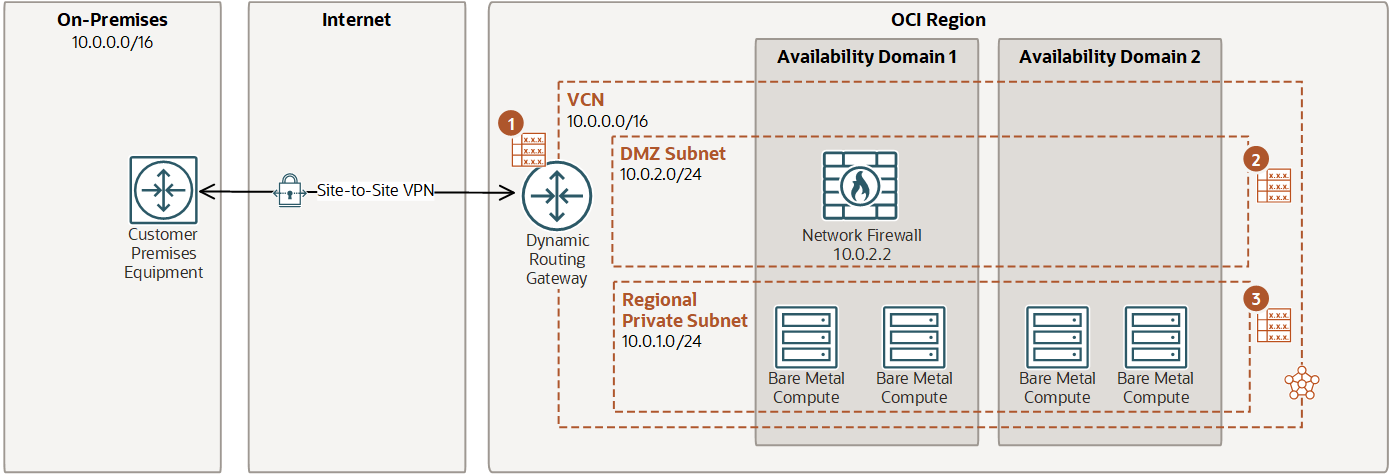

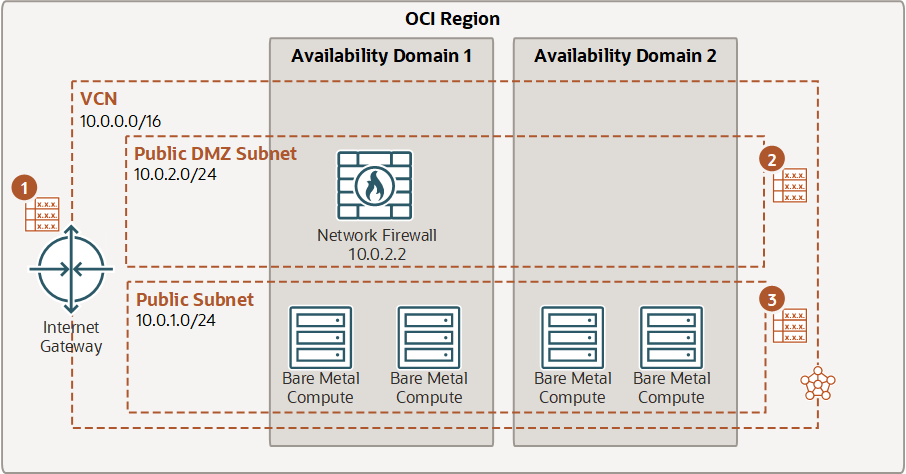

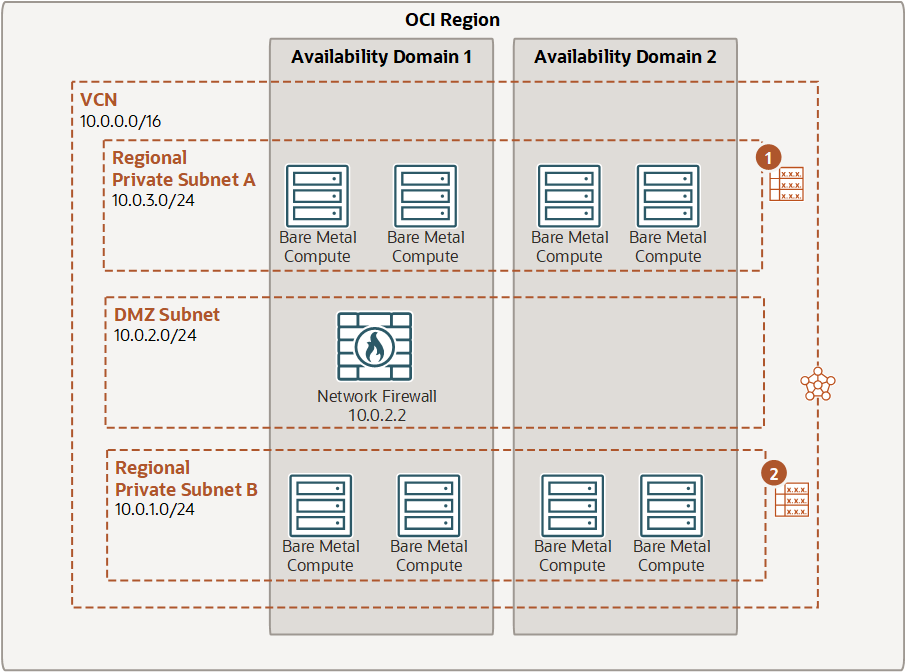

The OCI Network Firewall is deployed as a centralized, stateful firewall instance inside a Virtual Cloud Network (VCN). It operates as an inline traffic inspection point that you can insert into the flow of traffic between subnets, between VCNs, or between a VCN and the public internet. Instead of managing a virtual machine with a firewall image, you provision the firewall through the OCI console or Terraform, like any other managed service.

Under the hood, the firewall is powered by the Palo Alto Networks VM-Series engine. This enables deep packet inspection and supports policies based on application identity, user context, and known threat signatures – not just on IP addresses and ports. Once provisioned, the firewall is inserted into traffic paths using routing rules. You define which traffic flows should be inspected by updating route tables, either in subnets or at the Dynamic Routing Gateway (DRG) level. OCI automatically forwards the specified traffic to the firewall, inspects it based on your defined policies, and then forwards it to its destination.

Logging and telemetry from the firewall are natively integrated with OCI Logging and Monitoring services, making it possible to ingest logs, trigger alerts, or forward data to external tools. Throughput can scale up to 4 Gbps per firewall instance, and high availability is built into the service, with deployments spanning fault domains to ensure resiliency.

OCI Network Firewall is a highly available and scalable instance that you create in a subnet. The firewall applies business logic specified in a firewall policy attached to the network traffic. Routing in the VCN is used to direct traffic to and from the firewall. OCI Network Firewall provides a throughput of 4 Gb/sec, but you can request an increase up to 25 Gb/sec. The first 10 TB of data is processed at no additional charge.

The firewall supports advanced features such as intrusion detection and prevention (IDS/IPS), URL and DNS filtering, and decryption of TLS traffic. Threat signature updates are managed automatically by the service, and policy configuration can be handled via the OCI console, APIs, or integrated with Panorama for centralized policy management across multi-cloud or hybrid environments.

Integration with Existing OCI Networking

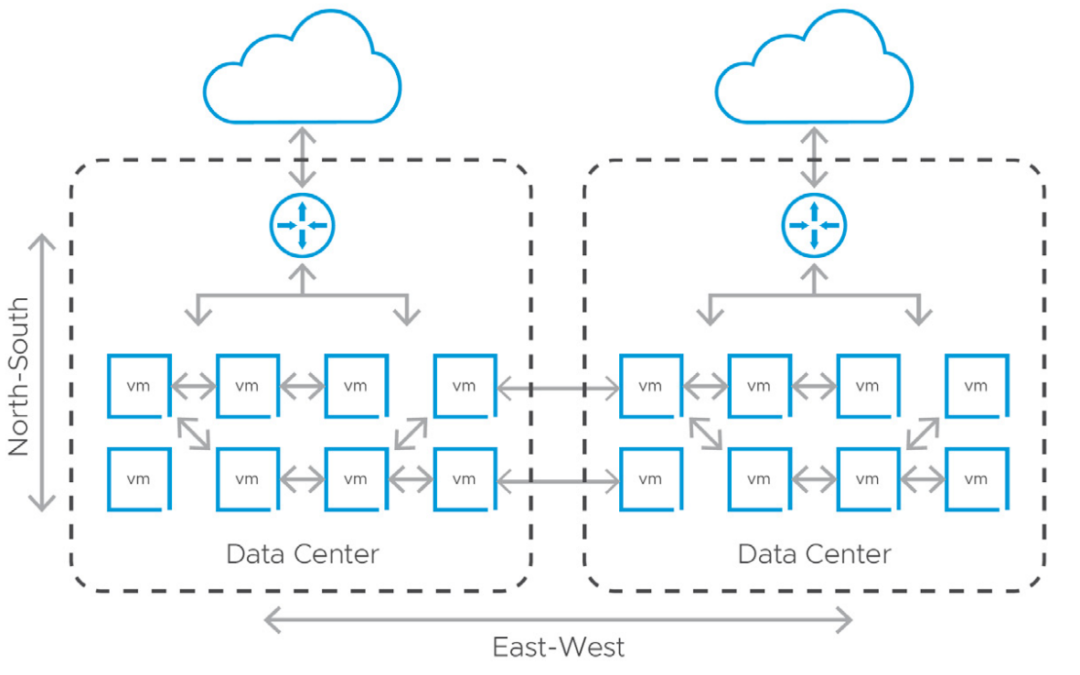

OCI Network Firewall fits well into the kinds of network topologies most commonly used in OCI, particularly hub-and-spoke architectures or centralized inspection designs. In many deployments, customers set up multiple workload VCNs (the spokes) that connect to a central hub VCN via local peering. The hub typically hosts shared services such as logging, DNS, internet access via a NAT gateway, and now, the network firewall.

By configuring route tables in the spoke VCNs to forward traffic to the firewall endpoint in the hub, organizations can centralize inspection and policy enforcement across multiple VCNs. This model avoids having to deploy and manage firewalls in each spoke and enables consistent rules for outbound internet traffic, inter-VCN communication, or traffic destined for on-premises networks over the DRG.

The insertion of the firewall is purely routing-based, meaning there’s no need for IPsec tunnels or overlays to redirect traffic. As a result, latency is minimal and the setup remains relatively simple. With high availability built in and native integration into OCI’s monitoring and logging stack, the firewall becomes a part of the existing infrastructure.

Design Considerations

While the OCI Network Firewall removes much of the operational burden of managing security appliances, there are still architectural choices that influence how effective the deployment is. One key consideration is routing. Since traffic inspection is based on routing decisions, the firewall only sees traffic that has been explicitly directed through it. That means route tables must be carefully designed to forward the correct flows.

Firewall placement also plays a role in the overall design. Centralized deployment in a hub VCN can simplify management and enforce consistent policy, but it might introduce additional hops in the network path. Depending on the traffic patterns and latency sensitivity of your applications, you may need to evaluate whether east-west inspection should also be enabled or limited to specific flows.

Monitoring and visibility should be planned from the start. Logging is not retained indefinitely, so integrating logs with OCI Logging Analytics, pushing them to a SIEM, or exporting them to object storage for long-term retention is a best practice. You should also account for log volume and potential cost, especially in high-throughput environments.

Throughput limits are another factor. As mentioned before, a single firewall instance supports up to 4 Gbps, so if your architecture requires higher performance, you may need to segment inspection points or scale up to 25 Gbps upon request. Understanding these thresholds is important when designing for resilience and future growth.

Finally, while the firewall is managed, policy complexity still needs governance. Deep inspection features can be powerful but require thoughtful rule design to avoid unnecessary latency or policy overlap.

Final Thoughts

The OCI Network Firewall adds a much-needed layer of native, centralized security enforcement to Oracle Cloud. It brings next-generation firewall capabilities into the platform in a way that aligns with how modern infrastructure is built: with automation, centralized control, and reduced operational overhead. For teams that have struggled to scale or standardize firewalling in OCI using virtual appliances, this service simplifies a lot of that work.

That said, it’s still a tool. Its effectiveness depends on how well it’s integrated into your network design and how clearly your security policies are defined. For organizations moving toward more distributed architectures or running regulated workloads in OCI, it’s a step in the right direction – a platform-native way to improve visibility and control without losing flexibility.

Note: Please be aware that OCI Network Firewall design concepts and configurations are part of the Oracle Cloud Infrastructure 2025 Networking Professional exam.