Open Source and Vendor Lock-In

When talking about multi-cloud and cost efficiency, open source is often discussed because it can be deployed and operated on all private and public clouds. From my experience and conversations with customers, open source is most of the time directly connected to discussions about vendor lock-ins.

Organizations want to avoid or minimize the use of proprietary software to avoid becoming dependent on a particular vendor or service. And there are different factors like proprietary technology or service, or long-term contracts. It is also about not giving a specific supplier leverage over your organization – for example when this supplier is increasing their prices. Another reason to avoid vendor lock-in is the notion that proprietary software can limit or reduce innovation in your environment.

CNCF and Kubernetes

Let us take Kubernetes as an example. Kubernetes, which is also known as K8s, was contributed as an open-source seed technology by Google to the Linux Foundation in 2015, which formed the sub-foundation “Cloud Native Computing Foundation” (CNCF). Founding CNCF members include companies like Google, Red Hat, Intel, Cisco, IBM, and VMware.

Currently, the CNCF has over 167k project contributors, over 800 members, and more than 130 certified Kubernetes distributions and platforms. Open source projects and the adoption of cloud native technologies are constantly growing.

The Cloud Native Computing Foundation, its members, and contributors have the same mission in mind. They want to provide drive the cloud native adoption by providing open and cloud native software that “can be implemented on a variety of architectures and operating systems”. This is one of the values described in the CNCF mission statement).

If we access the CNCF Cloud Native Interactive Landscape, one will get an understanding of how many open source projects are supported by the CNCF and this open source community.

Since donated to CNCF, a lot of companies on this planet are using Kubernetes, or at least a distribution of it:

- Amazon Elastic Kubernetes Service Distro (Amazon EKS-D)

- Azure (AKS) Engine

- Cisco Intersight Kubernetes Service

- K3s – Lightweight Kubernetes

- MetalK8s

- Oracle Cloud Native Environment

- Rancher Kubernetes

- Red Hat OpenShift

- VMware Tanzu Kubernetes Grid (TKG)

A distribution, or distro, is when a vendor takes core Kubernetes — that’s the unmodified, open source code (although some modify it) — and packages it for redistribution. Usually, this entails finding and validating the Kubernetes software and providing a mechanism to handle cluster installation and upgrades. Many Kubernetes distributions include other proprietary or open source applications.

These were just a few of the total 66 certified Kubernetes distributions. What about the certified hosted Kubernetes service offerings? Let me list here some of the popular ones out of the 53 total:

- Alibaba Cloud Container Service for Kubernetes (ACK)

- Amazon Elastic Container Service for Kubernetes (EKS)

- Azure Kubernetes Service (AKS)

- Google Kubernetes Engine (GKE)

- Nutanix Kubernetes Engine (formerly Karbon)

- Oracle Container Engine for Kubernetes (OKE)

- Red Hat OpenShift Dedicated

While Kubernetes is open source, different vendors create curated versions of Kubernetes, add some proprietary services, and then offer it as a managed service. The notion of open source is that you can take all of your applications and their components and leave a specific cloud provider if needed.

Trade-Offs

Open source software can make cloud migrations easier in some ways (e.g., if you use the same database in all the clouds). Kubernetes is designed to be cloud-agnostic, meaning that it can run on multiple cloud platforms. This can make it easier to move applications and workloads between different clouds without needing to rewrite the code or reconfigure the infrastructure. At least this was the expectation of Kubernetes. And it should be clear by now, that a managed service or platform means a lock-in. No matter if this is GKE, EKS, AKS, or VMware Tanzu for Kubernetes.

You cannot avoid a (vendor) lock-in. You have the same with open source. It is about trade-offs.

If you deploy workloads in multiple clouds, you end up with different vendors/partners, different solutions, and technologies. For me, it is about operations at the end of the day. How do you manage and operate multiple clouds and their different managed services? How do you deploy and use open source software in different clouds?

I have not seen one customer saying that they moved away from AKS, EKS, GKE, or Tanzu and went back to the upstream version of Kubernetes and built the application platform around it by themselves from scratch with other open source projects. You can do it, but you need someone who did that before and can guide you. Why?

There are other container-related technologies like databases, streaming & messaging, service proxies, API gateways, cloud native storage, container runtimes, service meshes, and cloud native network projects. Let us have a look at the different categories and examples:

- Database, 62 different projects (Cassandra, MySQL, Redis, PostgreSQL, Scylla)

- Storage, 66 different projects (Container Storage Interface, MinIO, Velero)

- Network, 25 different projects (Antrea, Cilium, Flannel, Container Network Interface, Open vSwitch, Calico, NGINX)

- Service Proxy, 21 different projects (Contour, Envoy, HAProxy, MetalLB, NGINX)

- Observability & Analysis, 145 projects (Grafana, Icinga, Nagios, Prometheus)

It is complex to deploy, integrate, operate and maintain different open source projects that you most probably need to integrate with proprietary software as well. So, one trade-off and disadvantage of open source software could be that it is developed and maintained by a community of volunteers. Some companies need enterprise support.

Note: Do not forget that even though you may be using open source software in different private and public clouds, you cannot change the fact that you most probably still have to use specific services of each cloud platform (e.g., network and storage). In this case, you have a dependency or lock-in on a different architectural layer.

If it is about costs, then open source can be helpful here, sure, but we shouldn’t forget the additional operational efforts. You will never get the costs down to zero with open source

The Reality

Graduated and incubating CNCF projects are considered to be running stable and can be used in production. Some examples would be Envoy, etcd, Harbor, Kubernetes, Open Policy Agent, and Prometheus.

Companies and developers have different motivations why open source. Open source software lowers your total cost of ownership (TCO), is created by skillful and talented people, you have more flexibility because of non-proprietary standards, it is cloud agnostic, has strong and fast support from the community when finding bugs, and is considered to be secure for use in production.

Open source is even so much liked that its usage attracts talent. There is no other community of this size that is collaborating on innovation and industry standardization!

But the Apache Log4j vulnerability showed the whole world that open source software needs to become more secure, and that project contributors and users need to ensure the integrity of the source code, build, and distribution in all open source software since a growing number of companies are using open source software as part of their solutions and managed services.

There are certain situations where open source software needs to be integrated with proprietary software. Commercial software can also provide more enterprise-readiness and can provide a complete solution, whereas with open source software on the other hand, you have to deploy and use a combination of different projects (to achieve the same). This could mean a lot of effort for a company. And you have to ensure the interoperability of the implemented software stack.

Technical issues always occur, no matter if it’s open source or proprietary software. Open source software does not provide the enterprise support some organizations are looking for.

While one has to decide what is best for their company and strategy, a lot of people are overwhelmed by the huge and confusing CNCF landscape that gives you so many options. Instead of deploying and integrating different open source projects by themselves, organizations are looking for public cloud service providers that take care of the management and ecosystem (network, storage, databases etc.) related to Kubernetes and this way is seen as the easiest way to get started with cloud native.

What has started for some organizations in one public cloud with one hosted Kubernetes offering has sometimes grown to a landscape with three different public clouds and four different Kubernetes distributions or hosted services.

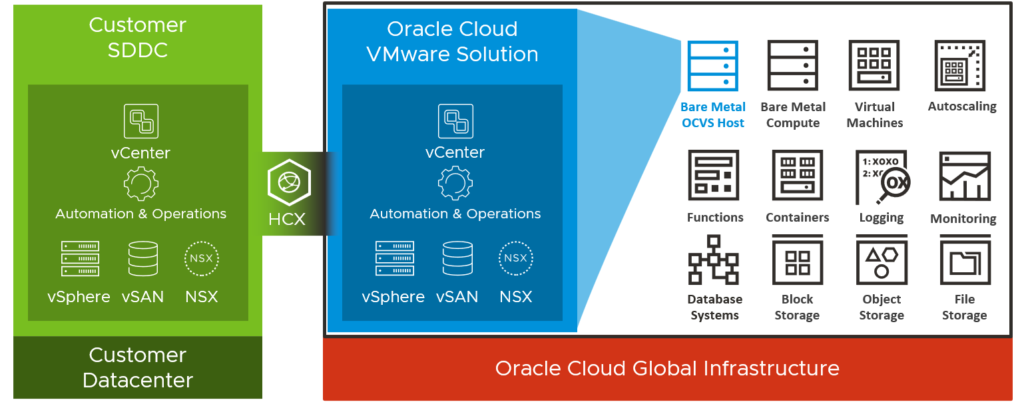

Example: Companies may have started with Kubernetes or VMware Tanzu on-premises and use AKS, EKS and GKE in their public clouds.

How do you cost-efficiently manage all these different distributions and services over different clouds with different management consoles and security solutions? Tanzu Mission Control and Tanzu Application Platform could be on option.

VMware and Open Source

VMware and some of their engineers are part of the community and they actively contribute to projects like Kubernetes, Harbor, Carvel, Antrea, Contour and Velero. Interested in some stats (filtered by the last decade)?

- Kubernetes – Top 3 Contributors: Google, Red Hat, VMware

- Istio – Top 3 Contributors: Google, IBM, Huawei (#7 VMware)

- Antrea – Top 3 Contributors: VMware, Amazon, Microsoft

- Buildpacks – Top 3 Contributors: Pivotal, Heroku, VMware

Open source is an essential part of any software strategy—from a developer’s laptop to the data center. At VMware, we’re committed to open source and their communities so that we can all deliver better solutions: software that’s more secure, scalable, and innovative. VMware Tanzu is open source aligned and built on a foundation of open source projects.

VMware Tanzu

VMware (Tanzu) leverages some of the leading open source technologies in the Kubernetes ecosystem. They use Cluster API for cluster lifecycle management, Harbor for container registry, Contour for ingress, Fluentbit for logging, Grafana and Prometheus for monitoring, Antrea and Calico for container networking, Velero for backup and recovery, Sonobuoy for conformance testing, and Pinniped for authentication.

VMware Tanzu Application Platform

According to VMware, they built Tanzu Application Platform (TAP) with an open source-first mindset. Here are some of the most popular technologies and projects:

More information can be found here.

VMware Data Services

VMware has also a family of on-demand caching, messaging, and database software (from the acquisition of Pivotal):

- VMware GemFire – Fast, consistent data for web-scaling concurrent requests fulfills the promise of highly responsive applications.

- VMware RabbitMQ – A fast, dependable enterprise message broker provides reliable communication among servers, apps, and devices.

- VMware Greenplum – VMware Greenplum is a massively parallel processing database. Greenplum is based on open source Postgres, enabling Data Warehousing, aggregation, AI/ML and extreme query speed.

- VMware SQL – VMware’s open-source SQL Database (Postgres & MySQL) is a Relational database service providing cost-efficient and flexible deployments on-demand and at scale. Available on any cloud, anywhere.

Watch the VMware Explore 2022 session “Introduction to VMware Tanzu Data Services” to learn more about this portfolio.

Developers could start with the Tanzu Developer Center.

VMware SQL and DBaaS

If you are interested in building a DB-as-a-Service offering based on PostgreSQL, MySQL or SQL Server, I recommend the following resources from Cormac Hogan:

- A closer look at VMware Data Services Manager and Project Moneta

- VMware Data Services Manager – Architectural Overview and Provider Deployment

- VMware Data Services Manager – Agent Deployment

- VMware Data Services Manager – Database Creation

- VMware Data Services Manager – SQL Server Database Template

- Introduction to VMware Data Services Manager (video)

Closing

Like always, you or your architects have to decide what makes the most sense for your company, your IT landscape, and your applications. Make or buy? Open source or proprietary software? Happy married or locked in? What is vendor lock-in for you?

In any case, VMware embraces open source!

Key Differentiator #1 – Different SDDC Bundles

Key Differentiator #1 – Different SDDC Bundles