Azure VMware Solution

Update May 2020:

On May 4th Microsoft announced the preview (and the “next evolution”) of Azure VMware Solution which is now a first-party offering service designed, built and supported my Microsoft and endorsed by VMware. This is an entirely new service entirely delivered and supported by Microsoft and does not replace the current AVS solution/service by CloudSimple at this time. This is truly just a Microsoft technology offering and has also nothing to do with a Virtustream branded Azure VMware Solution offering. Short: A way cleaner offering and service with a contract only between Microsoft and VMware.

— Original Text below —

Since Dell Technologies World 2019 it’s clear: VMware and Microsoft are not frenemies anymore!

Dell Technologies and Microsoft announced an expanded partnership which should help customers and provide them more choice and flexibility for their future digital workspace projects or cloud integrations.

One result and announcement of this new partnership is the still pretty new offering called “Azure VMware Solution” (AVS). Other people and websites may also call it “Azure VMware Solution by Virtustream” or “Azure VMware Solution by CloudSimple”.

AVS is a Microsoft first-party offering. Meaning, that it’s sold and supported by Microsoft, NOT VMware. This is one very important difference if you compare it with VMC on AWS. The operation, development and delivery are done by a VMware Cloud Verified and Metal-as-a-Service VCPP (VMware Cloud Provider Program) partner; CloudSimple or Virtustream (subsidiary of Dell Technologies). AVS is fully supported and verified by VMware.

VMware Metal-as-a-Service Authorized partners Virtustream and CloudSimple run the latest VMware software-defined data center technology, ensuring customers enjoy the same benefits of a consistent infrastructure and consistent operations in the cloud as they achieve in their own physical data center, while allowing customers to also access the capabilities of Microsoft Azure.

So, why would someone like Microsoft run VMware’s Cloud Foundation (VCF) stack on Azure? The answer is quite simple. VMware has over 500’000 customers and an estimated number of 70mio VMs which are mostly running on-premises. Microsoft’s doesn’t care if virtual machines (VMs) are running on vSphere, they care about Azure and the consumption in the end. AVS is just another form of Azure, Microsoft says. I would say it’s very unlikely that a customer moves on to Azure native once they are onboarded via Azure VMware Solution.

Microsoft would like to see some of the 70mio running on their platform, no matter if it’s VCF on top of their Azure servers. Customers should get the option to move to the Azure cloud, using Azure native services (e.g. Azure NetApp Files, Azure databases etc.), but give them the choice and flexibility to use their existing technology stack, ecosystem and tools (e.g. automation or operation) they are familiar with – the whole or some part of the VCF coupled with products from the vRealize Suite. Plus, other VMware 3rd party integrations they might have for data protection or backup. This is one unique specialty – Microsoft says – that there is no restricted functionality as you may experience in other VMware clouds.

From VMware’s perspective most of our customers are already Microsoft customers as well. In addition to that VMware’s vision is to provide the freedom of choice and flexibility, same like Microsoft, but it one small difference: to be cloud and infrastructure agnostic. This vision says that VMware doesn’t care if you run your workloads on-prem, on AWS, Azure or GCP (or even at a VCPP partner’s cloud) as long it’s running on the VCF stack. Cloud is not a choice or destination anymore, it has become an operation model.

And to keep it an operation model without having a new silo and the vendor lock-in, it makes totally sense to use VMware’s VCF on top of AWS, Azure, Google Cloud, Oracle, Alibaba Cloud or any other VCPP partners. This ensures that customers have the choice and flexibility they are looking for, coupled with the new and maybe still surprising “new” or “special” public cloud. If your vision is also about workload mobility on any cloud, then VMware is the right choice and partner!

Use Cases

What are the reasons to move to Azure and use Azure VMware Solution?

If you don’t want to scale up or scale out your own infrastructure and would like to get additional capacity almost instantly, then speed is definitely one reason. Microsoft can spin up a new AVS SDDC under 60min, which is impressive. How is this possible? With automation! This proves that VMware Cloud Foundation is the new data center operating system of the future and that automation is a key design requirement. If you would like to experience nearly the same speed and work with the same principles as public cloud provider do, then VCF is the way to go.

The rest of the use cases or reasons are in general the same if we talk about cloud. If it’s not only speed, then agility, (burstable) capacity, expansion in a new geography, DRaaS or for app modernization reasons using cloud native services.

Microsoft Licenses

What I have learned from this MS Ignite recording, is, that you can bring your existing MS licenses to AVS and that you don’t have to buy them AGAIN. In any other cloud this is not the case.

This information can be found here as well:

Beginning October 1, 2019, on-premises licenses purchased without Software Assurance and mobility rights cannot be deployed with dedicated hosted cloud services offered by the following public cloud providers: Microsoft, Alibaba, Amazon (including VMware Cloud on AWS), and Google. They will be referred to as “Listed Providers”.

Regions

If you check the Azure documentation, you’ll see that AVS is only available in US East and West Azure regions, but should be available in Western Europe “in the near future”. In the YouTube video above Microsoft was showing this slide which shows their global rollout strategy and the planned availability for Q2 2020:

According to the Azure regions website Azure VMware Solution is available at the following locations and countries in Europe:

So, North Europe (UK) is expected for H2 2020 and AVS is already available in the West Europe Azure region. Since no information available about the Swiss regions, even the slide from the MS Ignite recording may suggest the availability until May 2020, it’s very unlikely that AVS will be available in Zurich or Geneva before 2021.

So, North Europe (UK) is expected for H2 2020 and AVS is already available in the West Europe Azure region. Since no information available about the Swiss regions, even the slide from the MS Ignite recording may suggest the availability until May 2020, it’s very unlikely that AVS will be available in Zurich or Geneva before 2021.

Azure VMware Solution Components

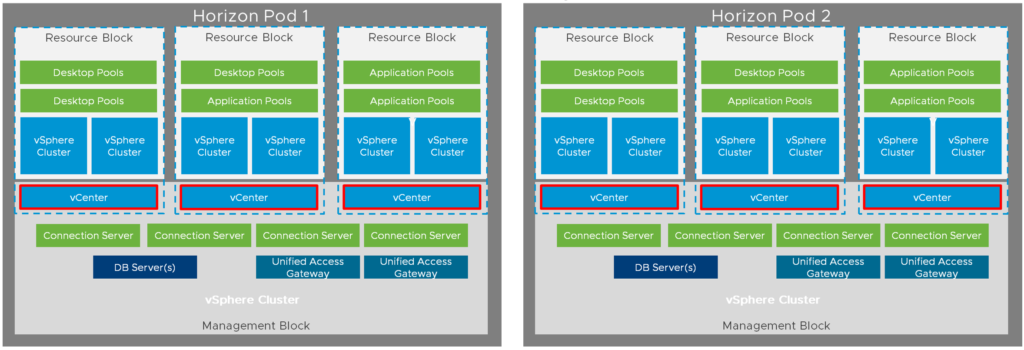

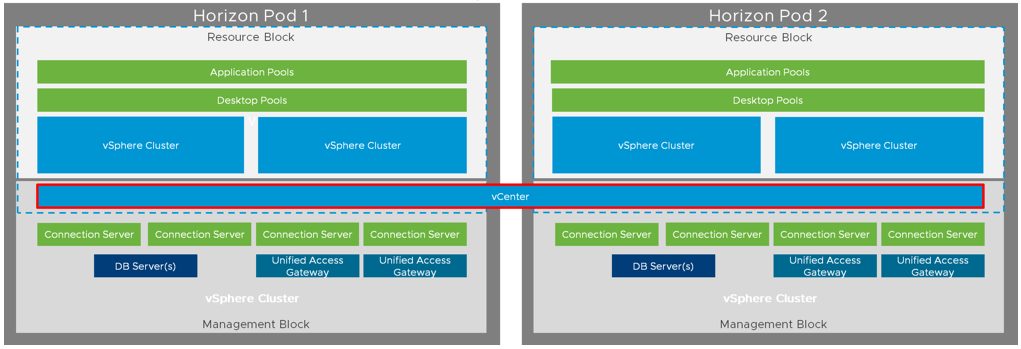

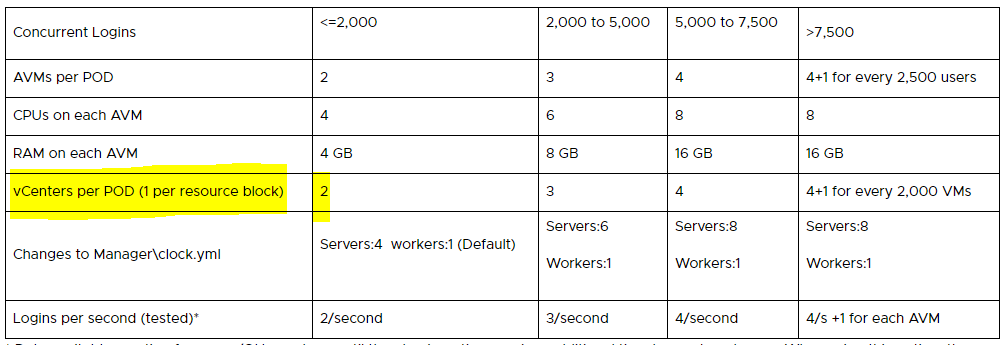

You need at least three hosts to get started with the AVS service and you can scale up to 16 hosts per cluster with a SLA of 99.9%. More information about the available node specifications for your region can be found here. At the moment CloudSimple offers the following host types:

- CS28 node: CPU:2x 2.2 GHz, total 28 cores, 48 HT. RAM: 256 GB. Storage: 1600 GB NVMe cache, 5760 GB data (All-Flash). Network: 4x25Gbe NIC

- CS36 node: CPU 2x 2.3 GHz, total 36 cores, 72 HT. RAM: 512 GB. Storage: 3200 GB NVMe cache 11520 GB data (All-Flash). Network: 4x25Gbe NIC

- CS36m node (only option for West Europe): CPU 2x 2.3 GHz, total 36 cores, 72 HT. RAM: 576 GB. Storage: 3200 GB NVMe cache 13360 GB data (All-Flash). Network: 4x25Gbe NIC

I think it’s clear that the used hypervisor is vSphere and that it’s maintained by Microsoft and not by VMware. There is no host-level access, but Microsoft gives you the possibility of a special “just in time” privileges access (root access) feature, which allows to install necessary software bits you might need – for example for 3rd party software integrations.

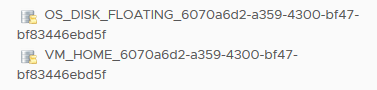

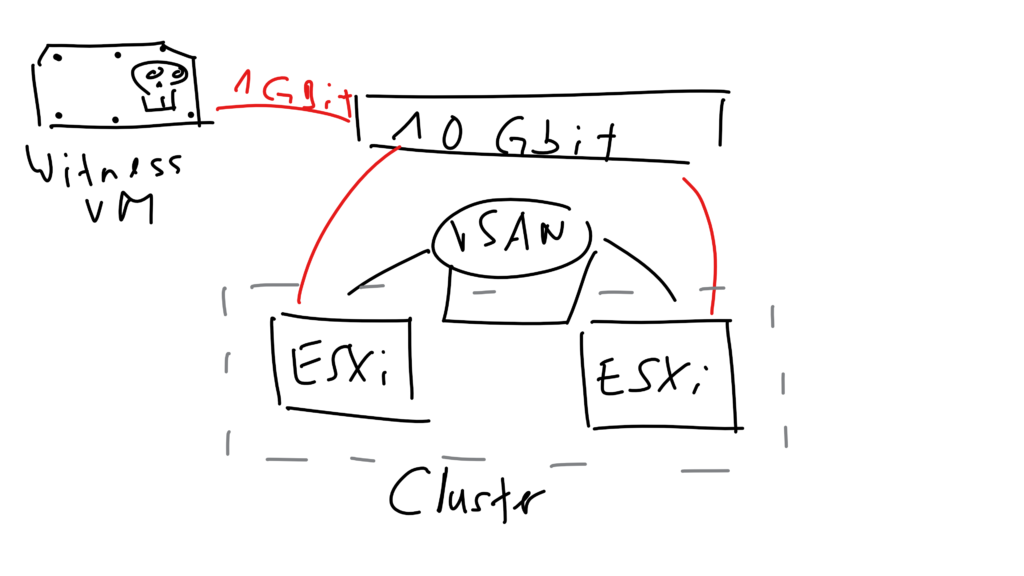

The storage infrastructure is based on vSAN with an all-flash persistent storage and a NVMe cache storage. More capacity can be made available by adding additional nodes or use Azure offerings which can be added to VMs directly.

Networking and security are based on NSX-T which fully supports micro segmentation.

To offer choice, Microsoft gives you the option to manage and see your AVS VMware infrastructure via vCenter or Azure Resource Manager (ARM). The ARM integration will allow you to create, start, stop and delete virtual machines and is not meant to replace existing VMware tools.

Microsoft support is your single point of contact and CloudSimple contacts VMware if needed.

Connectivity Options

CloudSimple provides the following connectivity options to connect to your AVS region network:

- ExpressRoute (high-traffic option – for migration and on-prem to Azure)

- Site-to-Site VPN (use your existing network infrastructure)

- Point-to-Site VPN (for management purposes only)

Depending on the connectivity option you have different ways to bring your VMs to your AVS private cloud:

- vMotion (also possible to use the Fling Cross vCenter Workload Migration Utility)

- HCX (available by request, ExpressRoute needed)

- 3rd party tools (Zerto, Veaam etc.)

How do I get started?

You have to contact your Microsoft account manager or business development manager if would like to know more. But VMware account representatives are also available to support you. If you want to learn more, check https://aka.ms/startavs.

Can I burn my existing Azure Credits?

Yes. Customers with Azure credits can use them through Azure VMware Solution.