Supercloud – A Hybrid Multi-Cloud

I thought it is time to finally write a piece about superclouds. Call it supercloud, the new multi-cloud, a hybrid multi-cloud, cross-cloud, or a metacloud. New terms with the same meaning. I may be biased but I am convinced that VMware is in the pole position for this new architecture and approach.

Let me also tell you this: superclouds are nothing new. Some of you believe that the idea of a supercloud is something new, something modern. Some of you may also think that cross-cloud services, workload mobility, application portability, and data gravity are new complex topics of the “modern world” that need to be discussed or solved in 2023 and beyond. Guess what, most of these challenges and ideas exist for more than 10 years already!

Cloud-First is not cool anymore

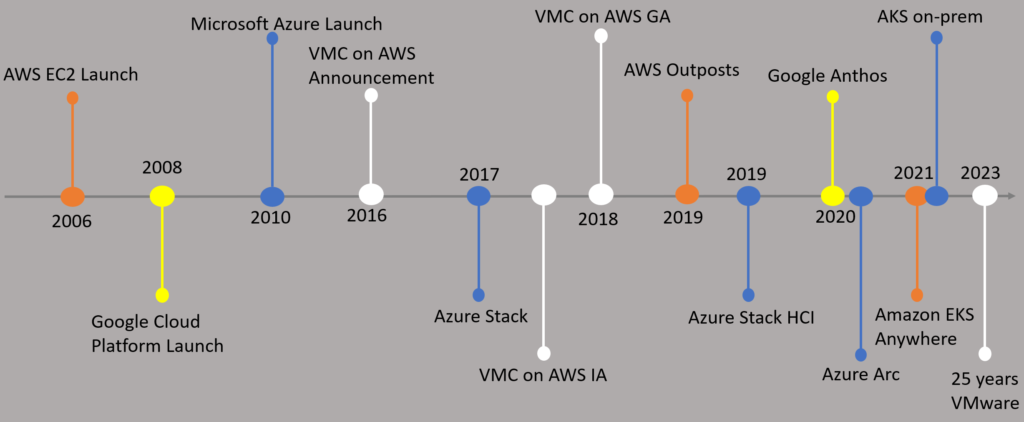

There is clear evidence that a cloud-first approach is not cool or the ideal approach anymore. Do you remember about a dozen years ago when analysts believed that local data centers are going to disappear and the IT landscape would only consist of public clouds aka hyperscalers? Have a look at this timeline:

We can clearly see when public clouds like AWS, Google Cloud, and Microsoft Azure appeared on the surface. A few years later, the world realized that the future is hybrid or multi-cloud. In 2019, AWS launched “Outposts”, Microsoft made Azure Arc and their on-premises Kubernetes offering available only a few years later.

Google, AWS, and Microsoft changed their messaging from “we are the best, we are the only cloud” to “okay, the future is multi-cloud, we also have something for you now”. Consistent infrastructure and consistent operations became almost everyone’s marketing slogan.

As you can also see above, VMware announced their hybrid cloud offering “VMware Cloud on AWS” in 2016, the initial availability came a year after, and since 2018 it is generally available.

From Internet to Interclouds

Before someone coined the term “supercloud”, people were talking about the need for an “intercloud”. In 2010, Vint Cerf, the so-called “Father of the Internet” shared his opinions and predictions on the future of cloud computing. He was talking about the potential need and importance of interconnecting different clouds.

Cerf already understood about 13 years ago, that there’s a need for an intercloud because users should be able to move data/workloads from one cloud to another (e.g., from AWS to Azure to GCP). He was guessing back then that the intercloud problem could be solved around 2015.

We’re at the same point now in 2010 as we were in ’73 with internet.

In short, Vint Cerf understood that the future is multi-cloud and that interoperability standards are key.

There is also a document that also delivers proof that NIST had a working group (IEEE P2302) trying to develop “the Standard for Intercloud Interoperability and Federation (SIIF)”. This was around 2011. How did the suggestion back then look like? I found this youtube video a few years ago with the following sketch:

Workload Mobility and Application Portability

As we can see above, VM or workload mobility was already part of this high-level architecture from the IEEE working group. I also found a paper from NIST called “Cloud Computing Standards Roadmap” dated July 2013 with very interesting sections:

Cloud platforms should make it possible to securely and efficiently move data in, out, and among cloud providers and to make it possible to port applications from one cloud platform to another. Data may be transient or persistent, structured or unstructured and may be stored in a file system, cache, relational or non-relational database. Cloud interoperability means that data can be processed by different services on different cloud systems through common specifications. Cloud portability means that data can be moved from one cloud system to another and that applications can be ported and run on different cloud systems at an acceptable cost.

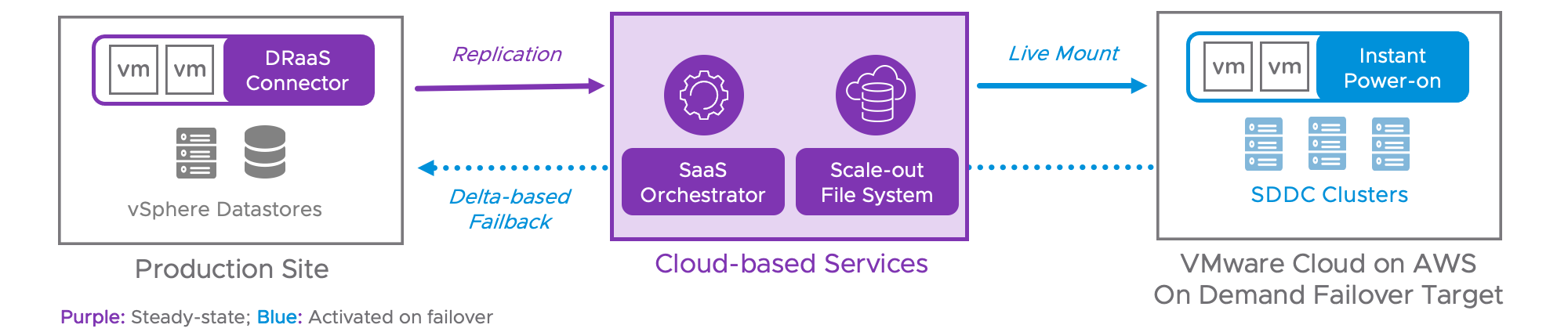

Note: VMware HCX is available since 2018 and is still the easiest and probably the most cost-efficient way to migrate workloads from one cloud to another.

It is all about the money

Imagine it is March 2014, and you read the following announcement: Cisco is going big – they want to spend $1 billion on the creation of an intercloud

Yes, that really happened. Details can be found in the New York Times Archive. The New York Times even mentioned at the end of their article that “it’s clear that cloud computing has become a very big money game”.

In Cisco’s announcement, money had also been mentioned:

Of course, we believe this is going to be good for business. We expect to expand the addressable cloud market for Cisco and our partners from $22Bn to $88Bn between 2013-2017.

In 2016, Cisco retired their intercloud offering, because AWS and Microsoft were, and still are, very dominant. AWS posted $12.2 billion in sales for 2016, Microsoft ended up almost at $3 billion in revenue with Azure.

Remember Cisco’s estimate about the “addressable cloud market”? In 2018, Gartner presented the number of $145B for the worldwide public cloud spend in 2017. For 2023, Gartner forecasted a cloud spend of almost $600 billion.

Data Gravity and Egress Costs

Another topic I want to highlight is “data gravity” coined by Dave McCrory in 2010:

Consider Data as if it were a Planet or other object with sufficient mass. As Data accumulates (builds mass) there is a greater likelihood that additional Services and Applications will be attracted to this data. This is the same effect Gravity has on objects around a planet. As the mass or density increases, so does the strength of gravitational pull. As things get closer to the mass, they accelerate toward the mass at an increasingly faster velocity. Relating this analogy to Data is what is pictured below.

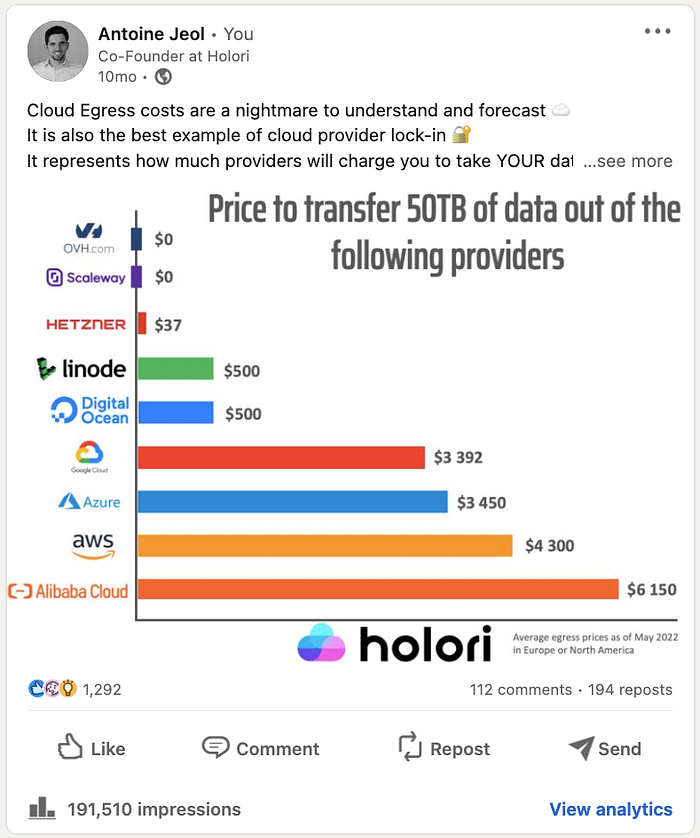

Put data gravity together with egress costs, then one realizes that data gravity and egress costs limit mobility and/or portability discussions:

Source: https://medium.com/@alexandre_43174/the-surprising-truth-about-cloud-egress-costs-d1be3f70d001

By the way, what happened to “economies of scale”?

The Cloud Paradox

As you should understand by now topics like costs, lock-in, and failed expectations (technically and commercially) are being discussed for more than a decade already. That is why I highlighted NIST’s sentence above: Cloud portability means that data can be moved from one cloud system to another and that applications can be ported and run on different cloud systems at an acceptable cost.

Acceptable cost.

While the (public) cloud seems to be the right choice for some companies, we now see other scenarios popping up more often: reverse cloud migrations (also called repatriation sometimes)

I have customers who tell me, that the exact same VM with the exact same business logic costs between 5 to 7 times more when they moved it from their private to a public cloud.

Let’s park that and cover the “true costs of cloud” another time. 😀

Public Cloud Services Spend

Looking at Vantage’s report, we can see the following top 10 services on AWS, Azure and GCP ranked by the share of costs:

If they are right and the numbers are true for most enterprises, it means that customers spend most of their money on virtual machines (IaaS), databases, and storage.

If they are right and the numbers are true for most enterprises, it means that customers spend most of their money on virtual machines (IaaS), databases, and storage.

What does Gartner say?

Let’s have a look at the most recent forecast called “Worldwide Public Cloud End-User Spending to Reach Nearly $600 Billion in 2023” from April 2023:

All segments of the cloud market are expected see growth in 2023. Infrastructure-as-a-service (IaaS) is forecast to experience the highest end-user spending growth in 2023 at 30.9%, followed by platform-as-a-service (PaaS) at 24.1%

Conclusion

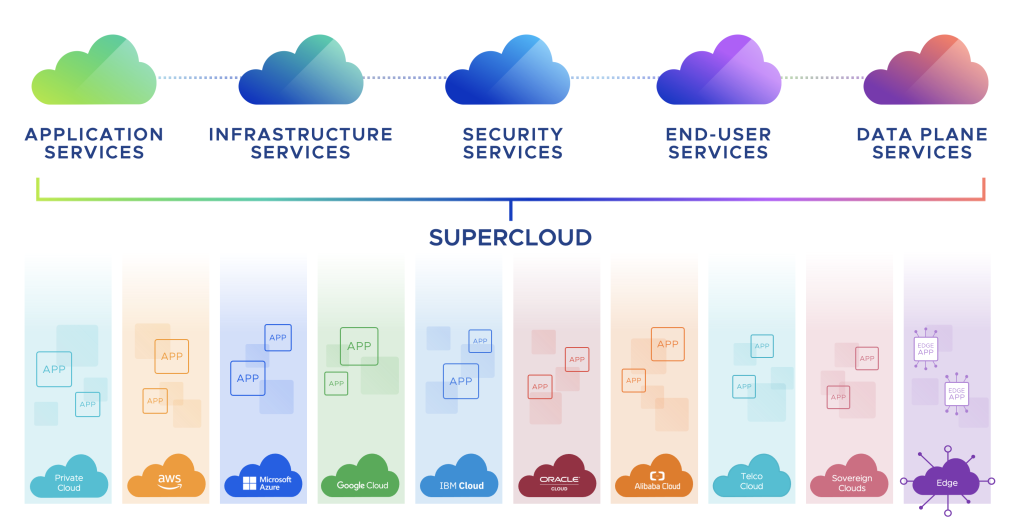

If most companies spend around 30% of their budget on virtual machines and Gartner predicts that IaaS is still having a higher growth than SaaS or PaaS, a supercloud architecture for IaaS would make a lot of sense. You would have the same technology format, could use the same networking and security policies, and existing skills, and benefit from many other advantages as well.

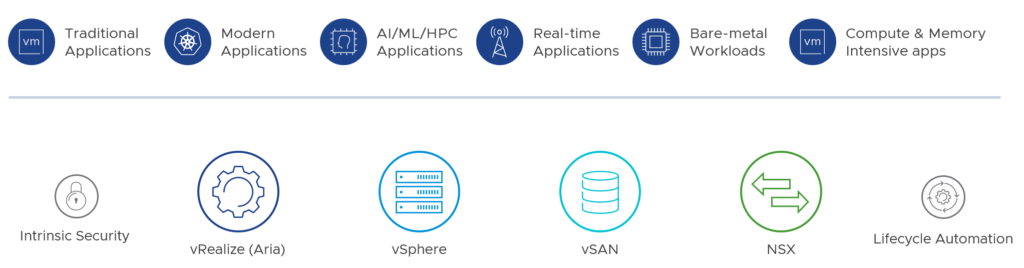

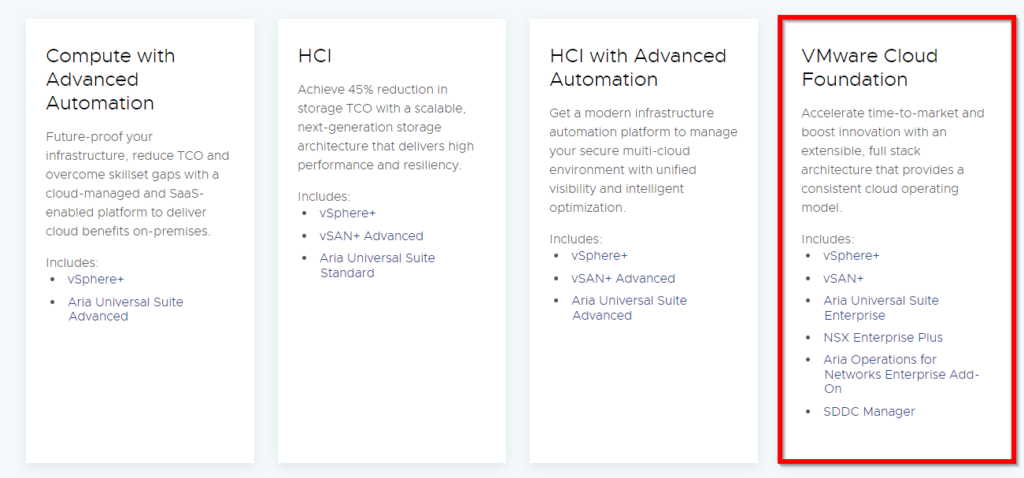

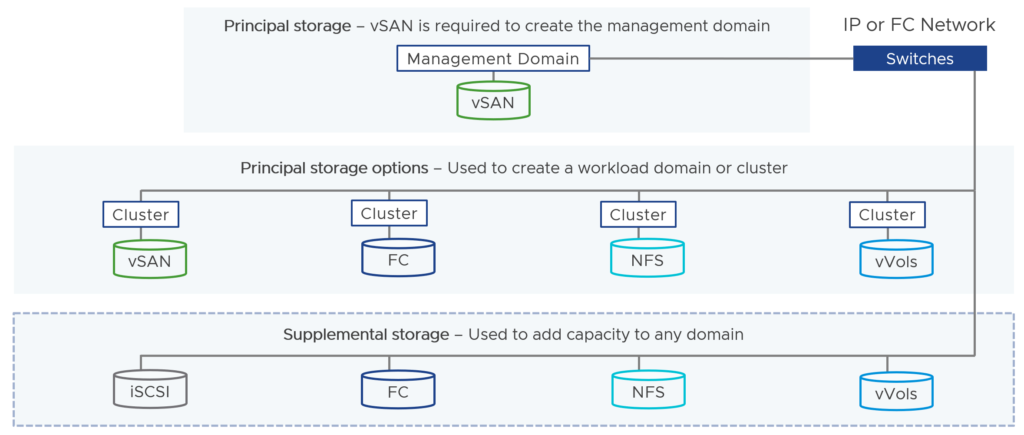

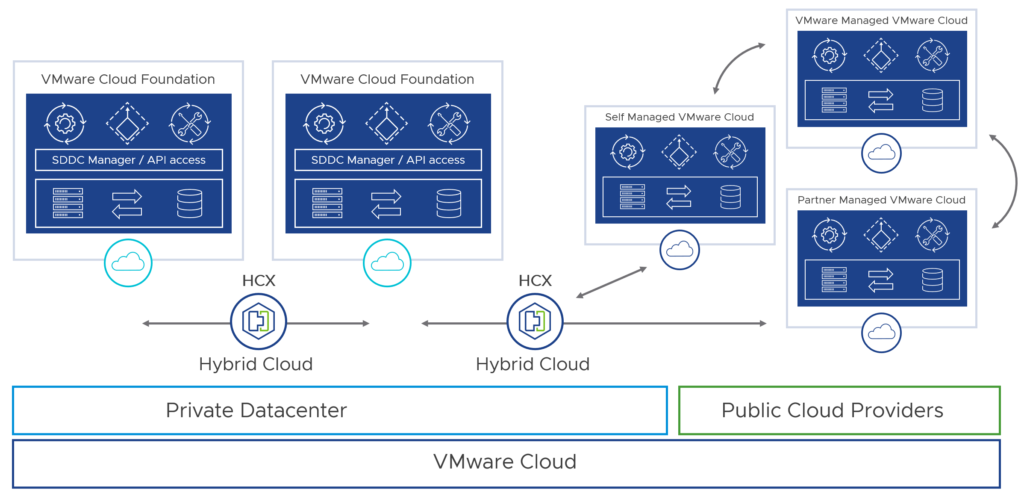

Looking at the VMware Cloud approach, which allows you to run VMware’s software-defined data center (SDDC) stack on AWS, Azure, Google, and many other public clouds, customers could create a seamless hybrid multi-cloud architecture – using the same technology across clouds.

Other VMware products that fall under the supercloud category would be Tanzu Application Platform (TAP), the Aria Suite, and Tanzu for Kubernetes Operations (TKO) which belong to VMware’s Cross-Cloud Services portfolio.

Final Words

I think it is important that we understand, that we are still in the early days of multi-cloud (or when we use multiple clouds).

Customers get confused because it took them years to deploy or move new or existing apps to the public cloud. Now, analysts and vendors talk about cloud exit strategies, reverse cloud migrations, repatriations, exploding cloud costs, and so on.

Yes, a supercloud is about a hybrid multi-cloud architecture and a standardized design for building apps and platforms across cloud. But the most important capability, in my opinion, is the fact that it makes your IT landscape future-ready on different levels with different abstraction layers.