Introduction to Alibaba Cloud VMware Solution (ACVS)

VMware’s hybrid and multi-cloud strategy is to run their Cloud Foundation technology stack with vSphere, vSAN and NSX in any private or public cloud including edge locations. I already introduced VMC on AWS, Azure VMware Solution (AVS), Google Cloud VMware Engine (GCVE) and now I would like to briefly summarize Alibaba Cloud VMware Solution (ACVS).

A lot of European companies, this includes one of my large Swiss enterprise account, defined Alibaba Cloud as strategic for their multi-cloud vision, because they do business in China. The Ali Cloud is the largest cloud computing provider in China and is known for their cloud security, reliable and trusted offerings and their hybrid cloud capabilities.

In September 2018, Alibaba Cloud (also known as Aliyun), a Chinese cloud computing company that belongs to the Alibaba Group, has announced a partnership with VMware to deliver hybrid cloud solutions to help organizations with their digital transformation.

Alibaba Cloud was the first VMware Cloud Verified Partner in China and brings a lot of capabilities and services to a large number of customers in China and Asia. Their current global infrastructure operates worldwide in 22 regions and 67 availability zones with more regions to follow. Outside Main China you find Alibaba Cloud data centers in Sydney, Singapore, US, Frankfurt and London.

As this is a first-party offering from Alibaba Cloud, this service is owned and delivered by them (not VMware). Alibaba is responsible for the updates, patches, billing and first-level support.

Alibaba Cloud is among the world’s top 3 IaaS providers according to Gartner and is China’s largest provider of public cloud services. Alibaba Cloud provides industry-leading flexible, cost-effective, and secure solutions. Services are available on a pay-as-you-go basis and include data storage, relational databases, big-data processing, and content delivery networks.

Currently, Alibaba Cloud has been declared as a Niche player according to the actual Gartner Magic Quadrant for Cloud Infrastructure and Platform Services (CIPS) with Oracle, IBM and Tencent Cloud.

Note: If you would like to know more about running the VMware Cloud Foundation stack on top of the Oracle Cloud as well, I can recommend Simon Long’s article, who just started to write about Oracle Cloud VMware Solution (OCVS).

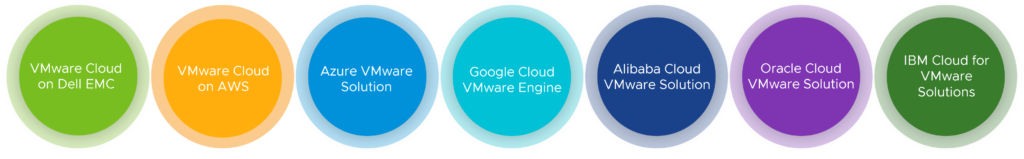

This partnership with VMware and Alibaba Cloud has the same goals like other VMware hybrid cloud solutions like VMC on AWS, OCVS or GCVE – to provide enterprises the possibility to meet their cloud computing needs and the flexibility to move existing workloads easily from on-premises to the public cloud and have highspeed access to the public cloud provider’s native services.

In April 2020, Alibaba Cloud and VMware finally announced the general availability of Alibaba Cloud VMware Solution for the Main China and Hongkong region (initially). This enables customers to seamlessly move existing vSphere-based workloads to the Alibaba Cloud, where VMware Cloud Foundation is running on top of Aliyun’s infrastructure.

As already common with such VMware-based hybrid cloud offerings, this let’s you move from a Capex to a Opex-based cost model based on subscription licensing.

Joint Development

X-Dragon – Shenlong in Chinese – is a proprietary bare metal server architecture developed by Alibaba Cloud for their cloud computing requirements. It offers direct access to CPU and RAM resources without virtualization overheads that bare metal servers offer (built around a custom X-Dragon MOC card). The virtualization technology, X-Dragon, behind Alibaba Cloud Elastic Compute Service (ECS) is now in its third generation. The first two generations were called Xen and KVM.

VMware works closely together with the Alibaba Cloud engineers to develop a VMware SDDC (software-defined data center based on vSphere and NSX) which runs on this X-Dragon bare metal architecture.

The core of the MOC NIC is the X-Dragon chip. The X-Dragon software system runs on the X-Dragon chip to provide virtual private cloud (VPC) and EBS disk capabilities. It offers these capabilities to ECS instances and ECS bare metal instances through VirtIO-net and VirtIO-blk standard interfaces.

Note: The support for vSAN is still roadmap and comes later in the future (no date committed yet). Because the X-Dragon architecture is a proprietary architecture, running vSAN over it requires official certification.

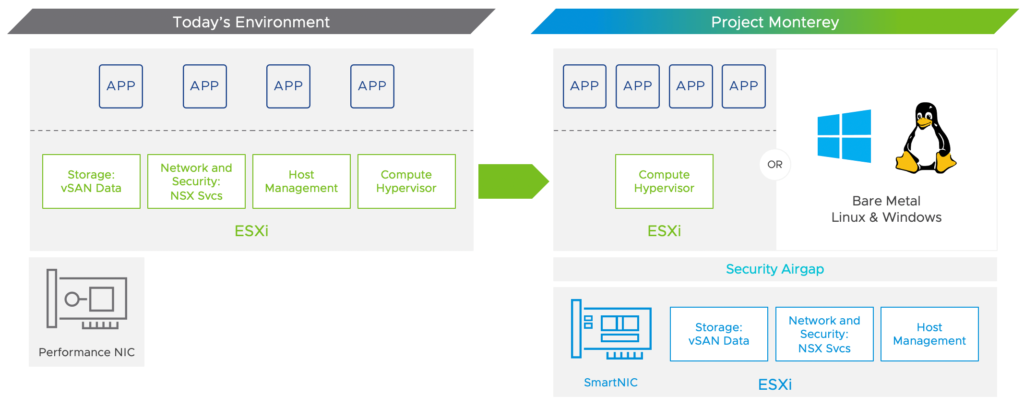

Project Monterey

Have you seen VMware’s announcement at VMworld 2020 about Project Monterey which allows you to run VMware Cloud Foundation on a SmartNIC? For me, this looks similar to the X-Dragon architecture 😉

Use Cases

Use Cases

Data Center extension or retirement. You can scale the data center capacity in the cloud on-demand, if you for example don’t want to invest in your on-premises environment anymore. In case you just refreshed your current hardware, another use case would be the extension of your on-premises vSphere cloud to Alibaba Cloud.

Disaster Recovery and data protection. Here we’ll find different scenarios like recovery (replication) or backup/archive (data protection) use cases. You can use your ACVS private clouds as a disaster recovery (DR) site for your on-premises workloads. This DR solution would be based on VMware Site Recovery Manager (SRM) which can be also used together with HCX. At the moment Alibaba Cloud offers 9 regions for DR sites.

Cloud migrations or consolidation. If you want to start with a lift & shift approach to migrate specific applications to the cloud, then ACVS is the right choice for you. Maybe you want to refresh your current infrastructure and need to relocate or migrate your workloads in an easy and secure way? Another perfect scenario would be the consolidation of different vSphere-based clouds.

Multicast Support with NSX-T

Like with Microsoft Azure and Google Cloud, an Alibaba Cloud ECS instance or VPC in general doesn’t support multicast and broadcast. That is one specific reason why customers need to run NSX-T on top of their public cloud provder’s global cloud infrastructure.

Connectivity Options

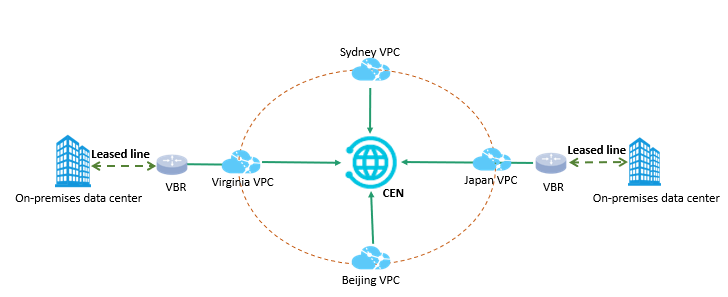

For (multi-)national companies Alibaba Cloud has different enterprise-class networking offerings to connect different sites or regions in a secure and reliable way.

Cloud Enterprise Network (CEN) is a highly-available network built on the high-performance and low-latency global private network provided by Alibaba Cloud. By using CEN, you can establish private network connections between Virtual Private Cloud (VPC) networks in different regions, or between VPC networks and on-premises data centers. The CEN is also available in Europe in Germany (Frankfurt) and UK (London).

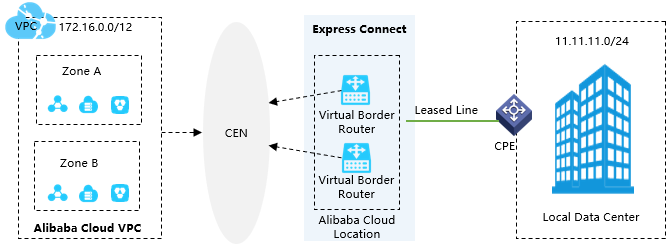

Alibaba Cloud Express Connect helps you build internal network communication channels that feature enhanced cross-network communication speed, quality, and security. If your on-premises data center needs to communicate with an Alibaba Cloud VPC through a private network, you can apply for a dedicated physical connection interface from Alibaba Cloud to establish a physical connection between the on-premises data center and the VPC. Through physical connections, you can implement high-quality, highly reliable, and highly secure internal communication between your on-premises data center and the VPC.

ACVS Architecture and Supported VMware Cloud Services

Let’s have a look at the ACVS architecture below. On the left side you see the Alibaba Cloud with the VMware SDDC stack loaded onto the Alibaba bare metal servers with NSX-T connected to the Alibaba VPC network.

This VPC network allows customers to connect their on-premises network and to have direct acccess to Alibaba Cloud’s native services.

Customers have the advantage to use vSphere 7 with Tanzu Kubernetes Grid and could leverage their existing tool set from the VMware Cloud Management Platform like vRealize Automation (native integration of vRA with Alibaba Cloud is still a roadmap item) and vRealize Operations.

The right side of the architecture shows the customer data centers, which run as a vSphere-based cloud on-premises managed by the customer themselves or as a managed service offering from any service provider. In between, with the red lines, the different connectivity options like Alibaba Direct Connect, SD-WAN or VPN connections are mentioned with different technologies like NSX-T layer 3 VPN, HCX and Site Recovery Manager (SRM).

To load balance the different application services across the different vSphere-based or native clouds, you can use NSX Advanced Load Balancer (aka Avi) to configure GSLB (Global Server Load Balancing) for high availability reasons.

Because the entire stack on top of Alibaba Cloud’s infrastructure is based on VMware Cloud Foundation, you can expect to run everything in VMware’s product portfolio like Horizon, Carbon Black, Workspace ONE etc. as well.

You can also deploy AliCloud Virtual Edges with VMware SD-WAN by VeloCloud.

Node Specifications

The Alibaba Cloud VMware Solution offering is a little bit special and I hope that I was able to translate the Chinese presentations correctly.

First, you have to choose the amount of hosts which gives you specific options.

1 Host (for testing purposes): vSphere Enterprise Plus, NSX Data Center Advanced, vCenter

2+ Hosts (basic type): vSphere Enterprise Plus, NSX Data Center Advanced, vCenter

3+ Hosts (flexibility and elasticity): vSphere Enterprise Plus, NSX Data Center Advanced, vCenter, (vSAN Enterprise)

Site Recovery Manager, vRealize Log Insight and vRealize Operations need to be licensed separately as they are not included in the ACVS bundle.

The current ACVS offering has the following node options and specifications (maximum 32 hosts per VPC):

All sixth-generation ECS instance come equipped with Intel® Xeon® Platinum 8269CY processors. These processors were customized based on the Cascade Lake microarchitecture, which is designed for the second-generation Intel® Xeon® Scalable processors. These processors have a turbo boost with an increased burst frequency of 3.2 GHz, and can provide up to a 30% increase in floating performance over the fifth generation ECS instances.

| Component | Version | License |

| vCenter | 7.0 | vCenter Standard |

| ESXi | 7.0 | Enterprise Plus |

| vSAN (support coming later) | n/a | Enterprise |

| NSX Data Center (NSX-T) | 3.0 | Advanced |

| HCX | n/a | Enterprise |

Note: Customers have the possibility to install any VIBs by themselves with full console access. This allows the customer to assess the risk and performance impacts by themselves and install any needed 3rd party software (e.g. Veeam, Zerto etc.).

If you want to more about how to accelerate your multi-cloud digital transformation initiatives in Asia, you can watch the VMworld presentation from this year. I couldn’t find any other presentation (except the exact same recording on YouTube) and believe that this article is the first publicy available summary about Alibaba Cloud VMware Solution. 🙂