Horizon and Workspace ONE Architecture for 250k Users Part 1

Disclaimer: This article is based on my own thoughts and experience and may not reflect a real-world design for a Horizon/Workspace ONE architecture of this size. The blog series focuses only on the Horizon or Workspace ONE infrastructure part and does not consider other criteria like CPU/RAM usage, IOPS, amount of applications, use cases and so on. Please contact your partner or VMware’s Professional Services Organization (PSO) for a consulting engagement.

To my knowledge there is no Horizon implementation of this size at the moment of writing. This topic, the architecture and the necessary amount of VMs in the data center, was always important to me since I moved from Citrix Consulting to a VMware pre-sales role. I always asked myself how VMware Horizon scales when there are more than only 10’000 users.

250’000 users are the current maximum for VMware Horizon 7.8 and the goal is to figure out how many Horizon infrastructure servers like Connection Servers, App Volumes Managers (AVM), vCenter servers and Unified Access Gateway (UAG) appliances are needed and how many pods should be configured and federated with the Cloud Pod Architecture (CPA) feature.

I will create my own architecture, meaning that I use the sizing and recommendation guides and design a Horizon 7 environment based on my current knowledge, experience and assumption.

After that I’ll feed the Digital Workspace Designer tool with the necessary information and let this tool create an architecture, which I then compare with my design.

Scenario

This is the scenario I defined and will use for the sizing:

Users: 250’000

Data Centers: 1 (to keep it simple)

Internal Users: 248’000

Remote Users: 2’000

Concurrency Internal Users: 80% (198’400 users)

Concurrency Remote Users: 50% (1’000 users)

Horizon Sizing Limits & Recommendations

This article is based on the current release of VMware Horizon 7 with the following sizing limits and recommendations:

Horizon version: 7.8

Max. number of active sessions in a Cloud Pod Architecture pod federation: 250’000

Active connections per pod: 10’000 VMs max for VDI (8’000 tested for instant clones)

Max. number of Connection Servers per pod: 7

Active sessions per Connection Server: 2’000

Max. number of VMs per vCenter: 10’000

Max. connections per UAG: 2’000

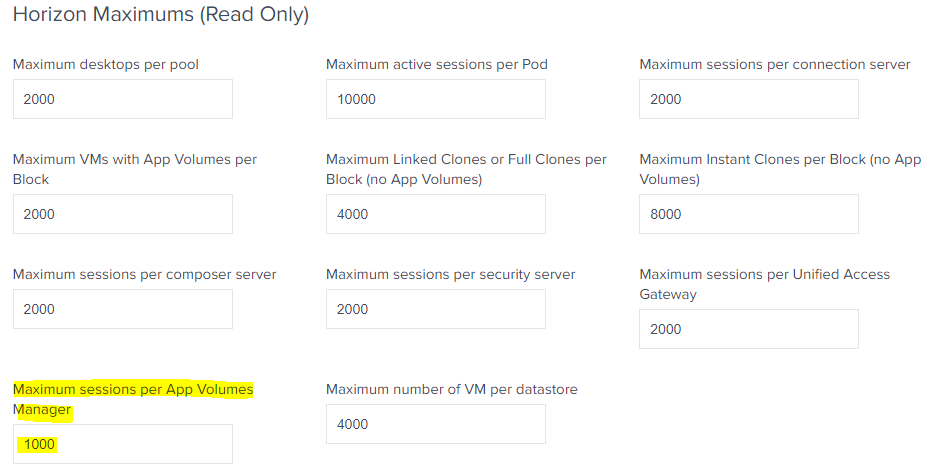

The Digital Workspace Designer lists the following Horizon Maximums:

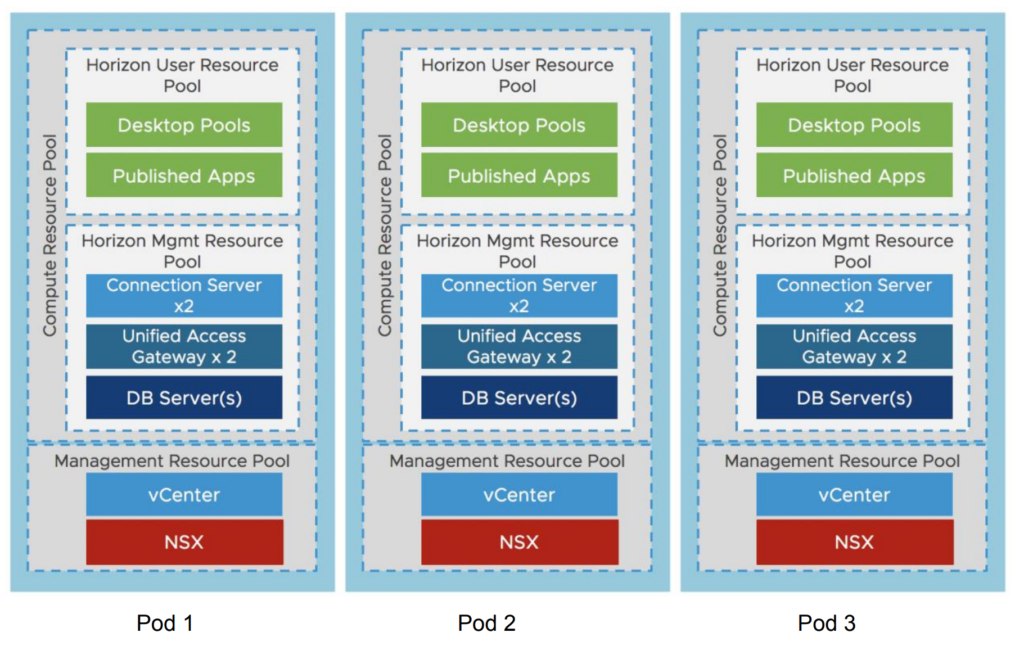

Please read my short article if you are not familiar with the Horizon Block and Pod Architecture.

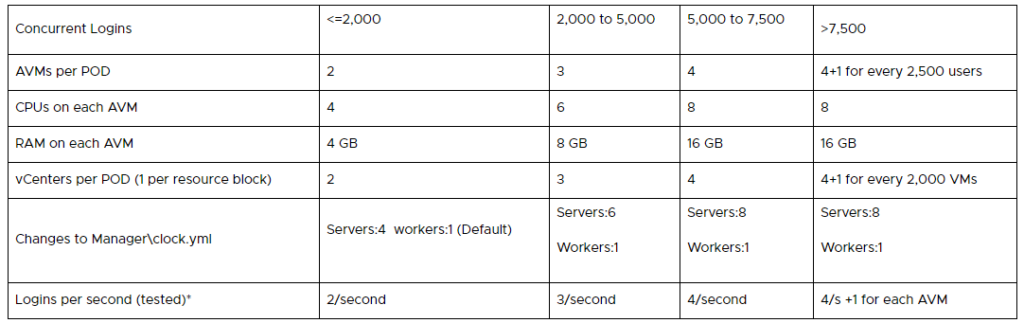

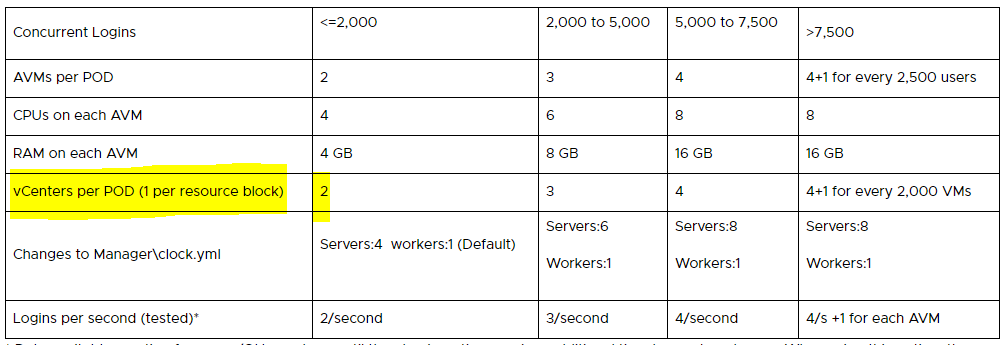

Note: The App Volumes sizing limits and recommendations have been updated recently and don’t follow this rule of thumb anymore that an App Volumes Manager only can handle 1’000 sessions. The new recommendations are based on “concurrent logins per second” login rate:

Architecture Comparison VDI

Please find below my decisions and the one made by the Digital Workspace Designer (DWD) tool:

| Horizon Item | My Decision | DWD Tool | Notes |

|---|---|---|---|

| Number of Users (concurrent) | 199'400 | 199'400 | |

| Number of Pods required | 20 | 20 | |

| Number of Desktop Blocks (one per vCenter) | 100 | 100 | |

| Number of Management Blocks (one per pod) | 20 | 20 | |

| Connection Servers required | 100 | 100 | |

| App Volumes Manager Servers | 80 | 202 | 4+1 AVMs for every 2,500 users |

| vRealize Operations for Horizon | n/a | 22 | I have no experience with vROps sizing |

| Unified Access Gateway required | 2 | 2 | |

| vCenter servers (to manage clusters) | 20 | 100 | Since Horizon 7.7 there is support for spanning vCenters across multiple pods (bound to the limits of vCenter) |

Architecture Comparison RDSH

Please find below my decisions* and the one made by the Digital Workspace Designer (DWD) tool:

| Horizon Item | My Decision | DWD Tool | Notes |

|---|---|---|---|

| Number of Users (concurrent) | 199'400 | 199'400 | |

| Number of Pods required | 20 | 20 | |

| Number of Desktop Blocks (one per vCenter) | 20 | 40 | 1 block per pod since we are limited by 10k sessions per pod, but only have 333 RDSH per pod |

| Number of Management Blocks (one per pod) | 20 | 20 | |

| Connection Servers required | 100 | 100 | |

| App Volumes Manager Servers | 14 | 202 | 4+1 AVMs for every 2,500 users/logins (in this case RDSH VMs (6'647 RDSH totally)) |

| vRealize Operations for Horizon | n/a | 22 | I have no experience with vROps sizing |

| Unified Access Gateway required | 2 | 2 | |

| vCenter servers (to manage resource clusters) | 4 | 40 | Since Horizon 7.7 there is support for spanning vCenters across multiple pods (bound to the limits of vCenter) |

*Max. 30 users per RDSH

Conclusion

VDI

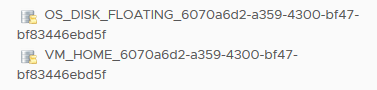

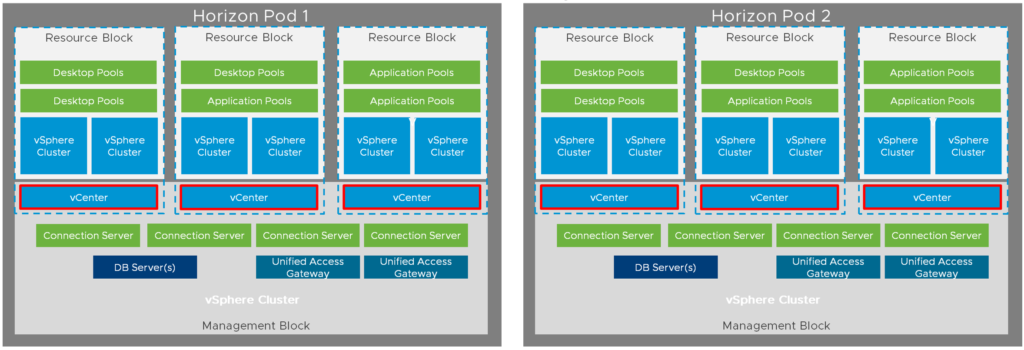

You can see in the table for VDI that I have different numbers for “App Volumes Manager Servers” and “vCenter servers (to manage clusters)”. For the amount of AVM servers I have used the new recommendations which you already saw above. Before Horizon 7.7 the block and pod architecture consisted of one vCenter server per block:

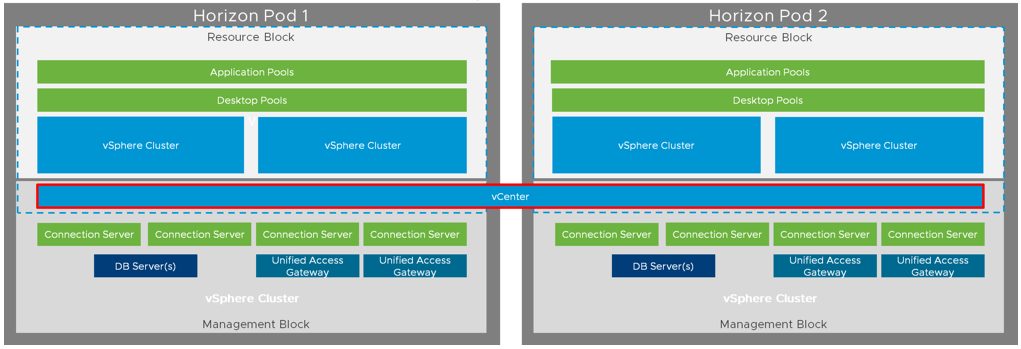

That’s why, I assume, the DWD recommends 100 vCenter servers for the resource cluster. In my case I would only use 20 vCenter servers (yes, it increases the failure domain), because Horizon 7.7 and above allows to span one vCenter across multiple pods while respecting the limit of 10’000 VMs per vCenter. So, my assumption is here, even the image below is not showing it, that it should be possible and supported to use one vCenter server per pod:

RDSH

If you consult the reference architecture and the recommendation for VMware Horizon you could think that one important information is missing:

The details for a correct sizing and the required architecture for RDSH!

We know that each Horizon pod could handle 10’000 sessions which are 10’000 VDI desktops (VMs) if you use VDI. But for RDSH we need less VMs – in this case only 6’647.

So, the number of pods is not changing because of the limitation “sessions per pod”. But there is no official limitation when it comes to resource blocks per pod and having one connection server for every 2’000 VMs or sessions for VDI, to minimize the impact of a resource block failure. This is not needed here I think. Otherwise you would bloat up the needed Horizon infrastructure servers and this increases operational and maintenance efforts, which obviously also increases the costs.

But, where are the 40 resource blocks of the DWD tool coming from? Is it because the recommendation is to have at least two blocks per pod to minimize the impact of a resource block failure? If yes, then it would make sense, because in my calculation you would have 9’971 RDSH users sessions per pod/block and with the DWD calculation only 4’986 (half) per resource block.

*Update 28/07/2019*

I have been informed by Graeme Gordon from technical marketing that the 40 resources blocks and vCenters are coming from here:

I didn’t see that because I expect that we can go higher if it’s a RDSH-only implementation.

App Volumes and RDSH

The biggest difference when we compare the needed architecture for VDI and RDSH is the number of recommended App Volumes Manager servers. Because “concurrent logins at a one per second login rate” for the AVM sizing was not clear to me I asked our technical marketing for clarification and received the following answer:

With RDSH we assign AppStacks to the computer objects rather than to the user. This means the AppStack attachment and filter drive virtualization process happends when the VM is booted. There is still a bit of activity when a user authenticates to the RDS host (assignment validation), but it’s considerably less than the attachment process for a typical VDI user assignment.

Because of this difference, the 1/second/AVM doesn’t really apply for RDSH only implementations.

With this background I’m doing the math with 6’647 logins and neglect the assignment validation activity and this brings me to a number of 4 AVMs only to serve the 6’647 RDS hosts.

Disclaimer

Please be reminded again that these are only calculations to get an idea how many servers/VMs of each Horizon component are needed for a 250k user (~200k CCU) installation. I didn’t consider any disaster recovery requirements and this means that the calculation I have made recommend the least amount of servers required for a VDI- or RDSH-based Horizon implementation.