Multi-Tenancy on VMware Cloud Foundation with vRealize Automation and Cloud Director

In my article VMware Cloud Foundation And The Cloud Management Platform Simply Explained I wrote about why customers need a VMware Cloud Foundation technology stack and what a VMware cloud management platform is.

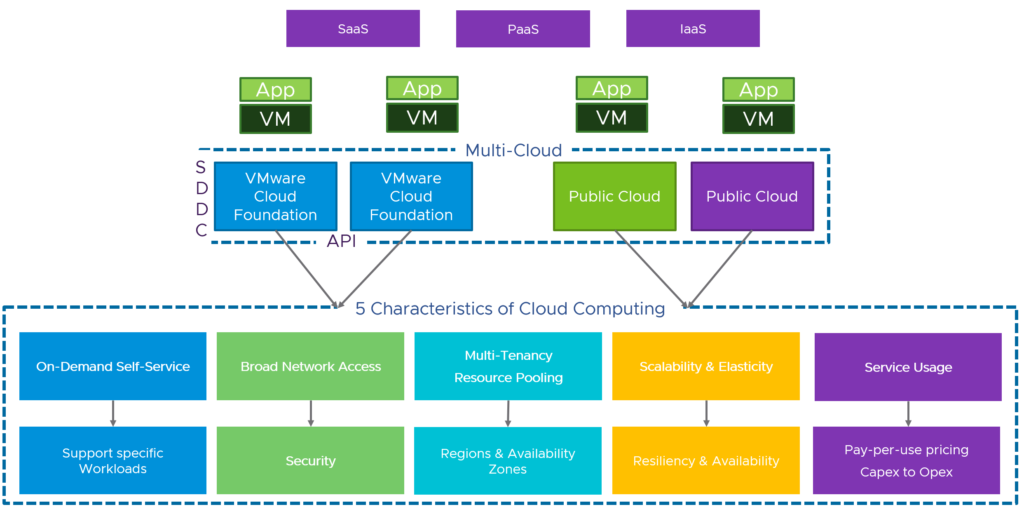

One of the reasons and one of the essential characteristics of a cloud computing model I mentioned is resource pooling.

By the National Institute of Standards and Technology (NIST) resource pooling is defined with the following words:

The provider’s computing resources are pooled to serve multiple

consumers using a multi-tenant model, with different physical and virtual

resources dynamically assigned and reassigned according to consumer demand.

There is a sense of location independence in that the customer generally has no

control or knowledge over the exact location of the provided resources but may be

able to specify location at a higher level of abstraction (e.g., country, state, or

data center).

This time I would like to focus on multi-tenancy and how you can achieve that on top of VMware Cloud Foundation (VCF) with Cloud Director (formerly known as vCloud Director) and vRealize Automation, which both could be part of a VMware cloud management platform (CMP).

Multi-Tenancy

There are many understandings around about multi-tenancy and different people have different definitions for it.

If we start from the top of an IT infrastructure, we will have application or software multi-tenancy with a single instance of an application serving multiple tenants. And in the past even running on the same virtual or physical server. In this case the multi-tenancy feature is built into the software, which is commonly accessed by a group of users with specific permissions. Each tenant gets a dedicated or isolated share of this application instance.

Coming from the bottom of the data center, multi-tenancy describes the isolation of resources (compute, storage) and networks to deliver applications. The best example here are (cloud) services providers.

Their goal is to create and provide virtual data centers (VDC) or a virtual private cloud (VPC) on top of the same physical data center infrastructure – for different tenants aka customers. Normally, the right VMware solution for this requirement and service providers would be Cloud Director, but this is maybe not completely true anymore with the release of vRealize Automation 8.x.

To make it easier for all of us, I’ll call Cloud Director and vCloud Director “vCD” from now on.

VMware Cloud Director (formerly vCloud Director)

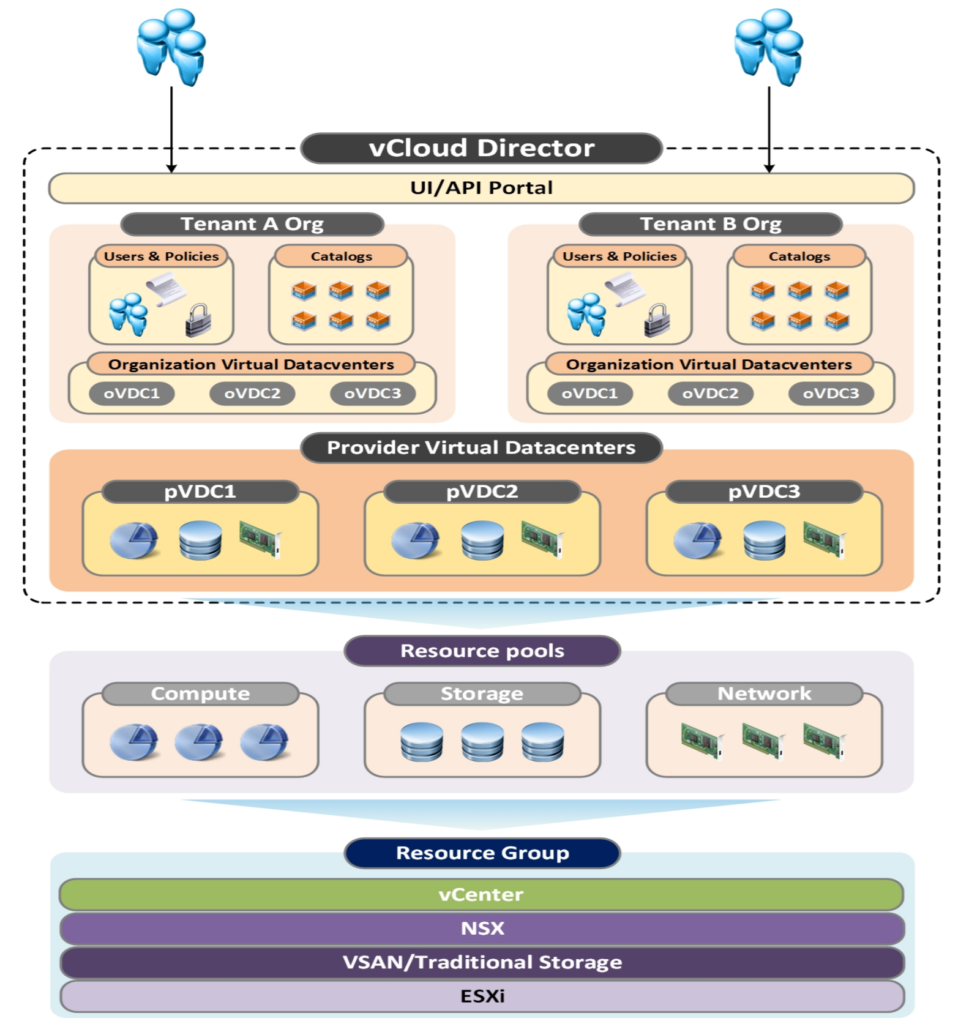

Cloud Director is a product exclusively for cloud service providers via the VMware Cloud Provider Program (VCPP). Originally released in 2010, it enables service providers (SPs) to provision SDDC (Software-Defined Data Center) services as complete virtual data centers. vCD also keeps resources from different tenants isolated from each other.

Within vCD a unit of tenancy is called Organization VDC (OrgVDC). It is defined as a set of dedicated compute (CPU, RAM), storage and network resources. A tenant can be bound to a single OrgVDC or can be composed of multiple Organization VDCs. This is typically known as Infrastructure as a Service (IaaS).

A provider virtual data center (PVDC) is a grouping of compute, storage, and network resources from a single vCenter Server instance. Multiple organizations/tenants can share provider virtual data center resources.

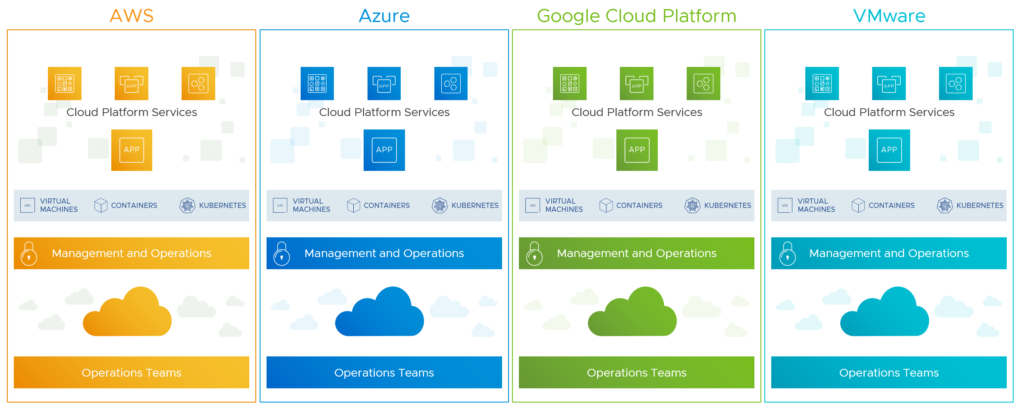

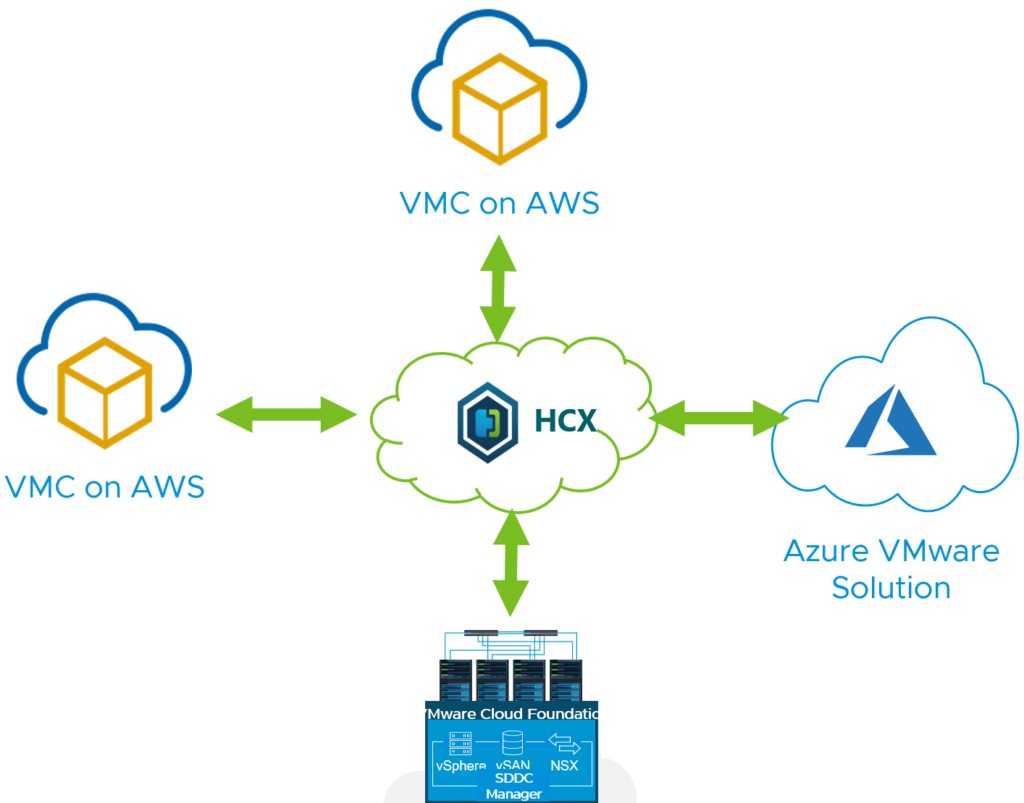

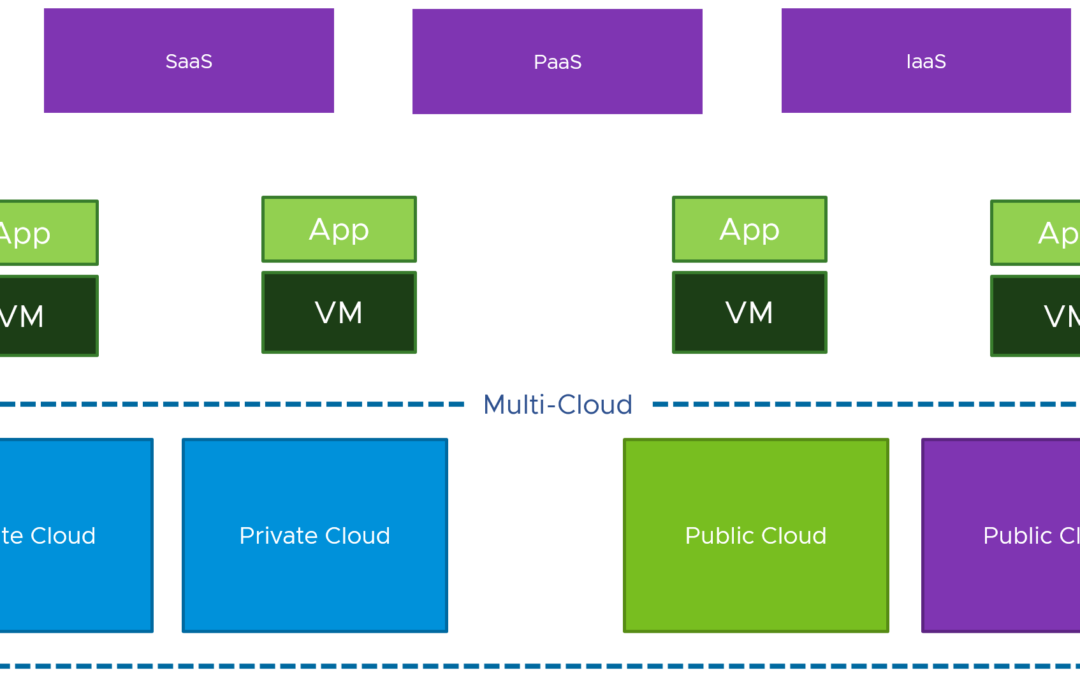

A lot of customers and VCPP partners have now started to offer their cloud services (IaaS, PaaS, SaaS etc.) based on VMware Cloud Foundation. For private and hybrid cloud scenarios, but also in the public cloud as a managed cloud service (VMware Cloud on AWS, Azure VMware Solution, Google Cloud VMware Engine, Alibaba Cloud VMware Solution and more).

Important: I assume that you are familiar with VCF, its core components (ESXi, vSAN, NSX, SDDC Manager) and architecture models (standard as the preferred).

Cloud Director components are currently not part of the VCF lifecycle automation, but it is a roadmap item!

Cloud Director Resource Hosting Models

vCD offers multiple hosting models:

- In the shared hosting model, multiple tenant workloads run all together on the same

resource groups without any performance assurance - In the reserved hosting model, performance of workloads is assured by resource

reservation. - In the physical hosting model, hardware is dedicated to a single tenant and performance

is assured by the allocated hardware

Tenant Using Shared Hosting on VCF Workload Domain

In this use case a tenant is using shared hosting backed by a VMware Cloud Foundation workload domain. A workload domain, which is mapped to a provider VDC.

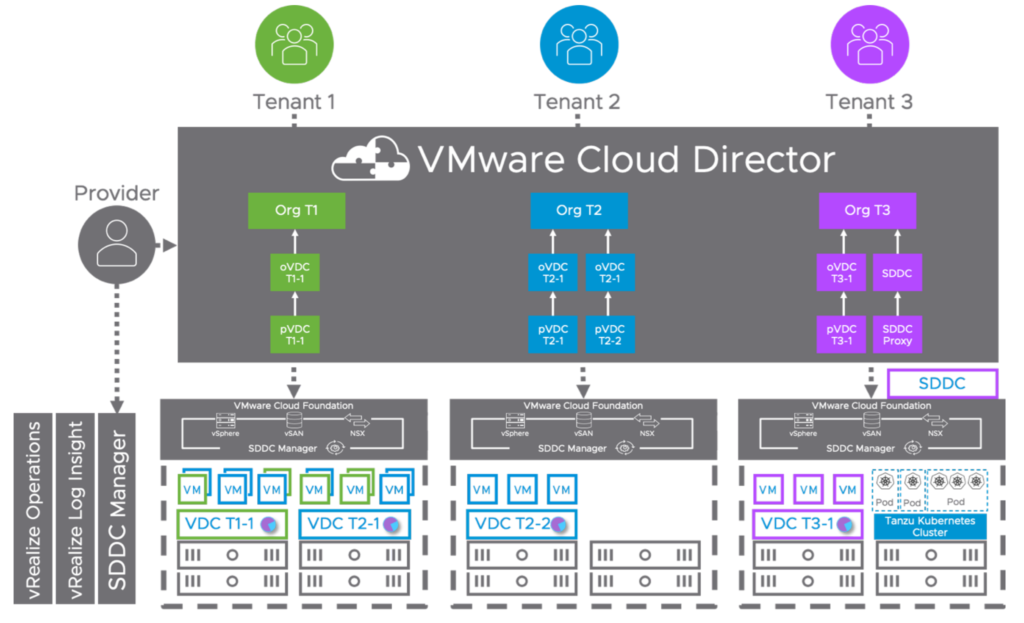

Tenant Using Shared Hosting and Reserved Hosting on Multiple VCF Workload Domains

This use case describes the example of customer using shared and reserved hosting backed by multiple VCD workload domains. Here each cluster has a single resource pool mapped to a single PVDC.

Tenant Using Physical Hosting and Central Point of Management (CPOM)

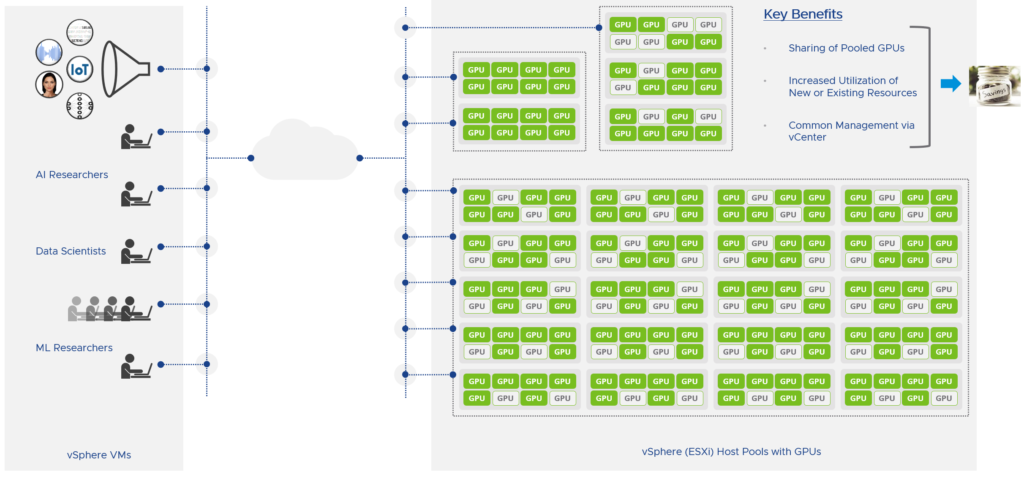

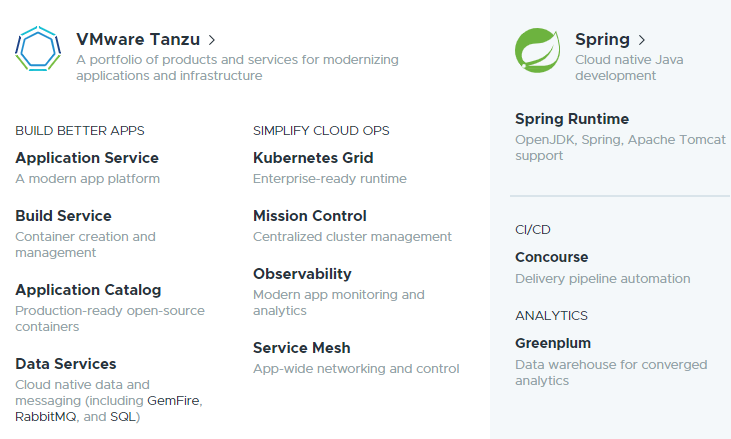

The last example shows a single customer using physical hosting. You will notice that there is also a vSphere with

Kubernetes workload domain. VMware Cloud Foundation automates the installation of vSphere with Kubernetes (Tanzu) which makes it incredibly easy to deploy and manage.

You can see that there is an “SDDC” box on top of the Kubernetes Cluster vCenter, which is attached to

the “SDDC Proxy” entity. vCD can act as an HTTP/S proxy server between tenants and the

underlying vSphere environment in VMware Cloud Foundation. An SDDC proxy is an

access point to a component from an SDDC, for example, a vCenter Server instance, an ESXi host, or

an NSX Manager instance.

The vCD becomes the central point of management (CPOM) in this case and the customer gets a complete dedicated SDDC with vCenter access.

Note: Since vCD 9.7 it is possible to present for example a vCenter Server instance securely to a tenant’s organization using the Cloud Director user interface. This is how you could build your own VMC-on-AWS-like cloud offering!

All 3 Tenants Together

Finally, we put it all together. In the first use case we can see that different customers are sharing resources from a

single PVDC. We can also see that resources from a single vCenter can be split across different provider virtual datacenters and that we can mix and match multi-tenants workload domains and workload domains offering dedicated private cloud all together.

Cloud Director Service and VMware Cloud on AWS

If you don’t want to extend or operate your own data center or cloud infrastructure anymore and provide a managed service to multiple customer, there are still options for you available backed by VMware Cloud Foundation as well.

Since October 2020 you have Cloud Director Service globally available, which delivers multi-tenancy to VMware Cloud on AWS for managed service providers (MSP).

VMware sees not only new, but also existing VCPP partners moving towards a mixed-asset portfolio, where their cloud management platform consists of a VCPP and MSP (VMware SaaS offerings) contract. This allows them for example to run vCD on-premises for their current customers and the onboarding of new tenants would happen in the public cloud with CDS and VMC on AWS.

Enterprise Multi-Tenancy with vRealize Automation

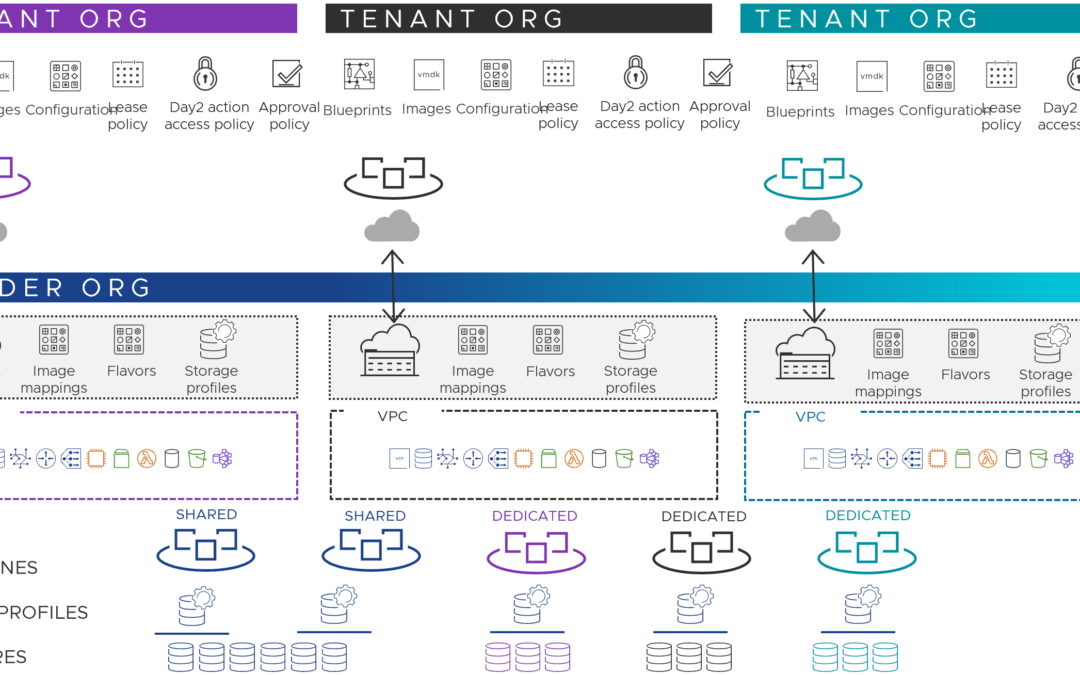

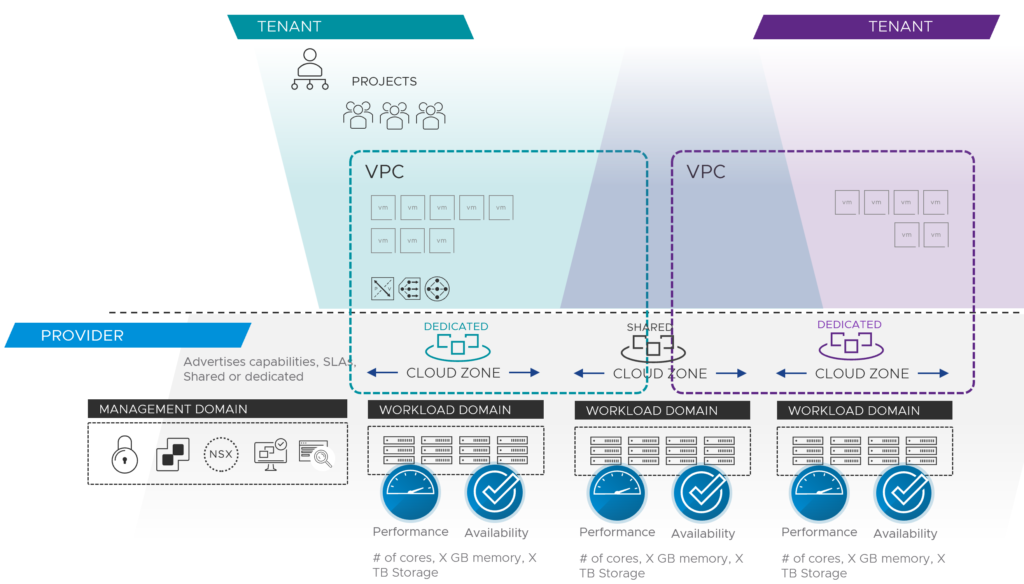

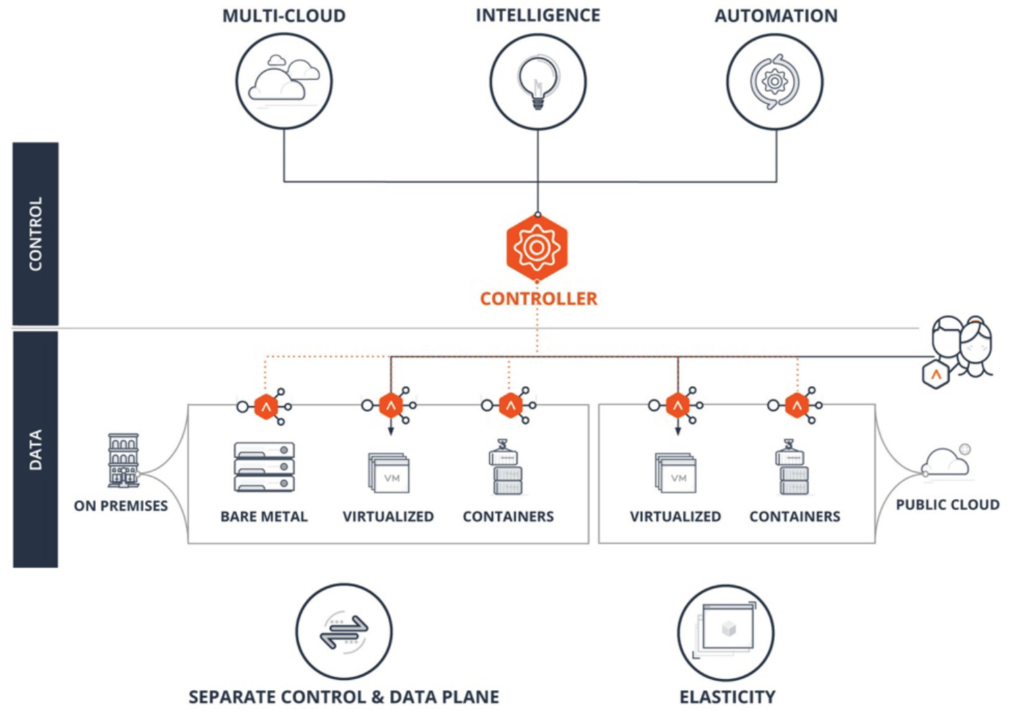

With the release of vRealize Automation 8.1 (vRA) VMware offered support for dedicated infrastructure multi-tenancy, created and managed through vRealize Suite Lifecycle Manager. This means vRealize Automation enables customers or IT providers to set up multiple tenants or organizations within each deployment.

Providers can set up multiple tenant organizations and allocate infrastructure. Each tenant manages its own projects (team structures), resources and deployments.

Enabling tenancy creates a new Provider (default) organization. The Provider Admin will create new tenants, add tenant admins, setup directory synchronization, and add users. Tenant admins can also control directory synchronization for their tenant and will grant users access to services within their tenant. Additionally, tenant admins will configure Policies, Governance, Cloud Zones, Profiles, access to content and provisioned resources; within their tenant. A single shared SDDC or separate SDDCs can be used among tenants depending on available resources.

With vRealize Automation 8.2, provider administrators got the ability to share infrastructure by creating and assigning Virtual Private Zones (VPZ) to tenant organizations.

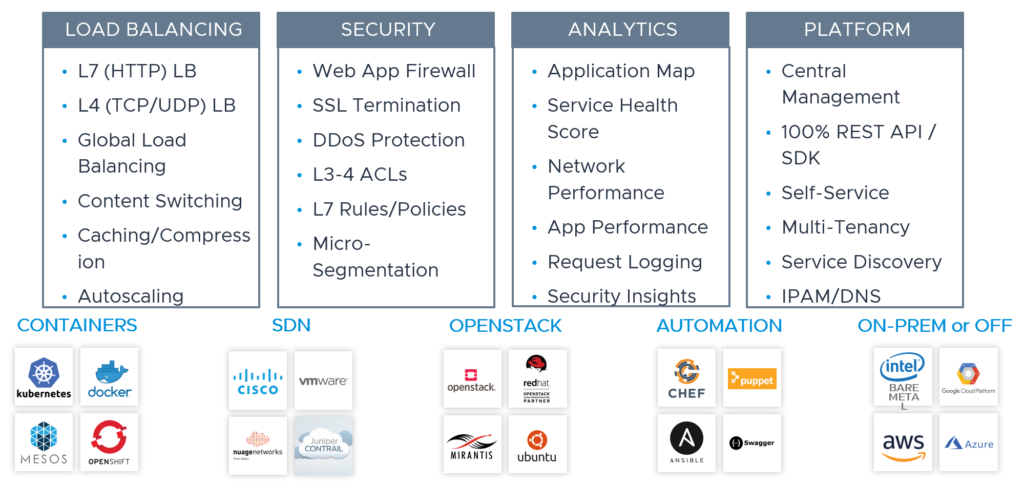

Think of VPZs as a kind of container of infrastructure capacity and services which can be defined and allocated to a Tenant. You can add unique or shared cloud accounts, with associated compute, flavors, images, storage, networking, and tags to each VPZ. Each component offers the same configuration options you would see for a standalone configuration.

vRealize Automation and VMware Cloud Foundation

With the pretty new multi-tenancy and VPZ capability a new consumption model on top of VCF can be built. You (provider) would map the Cloud Zones (compute resources on vSphere (or AWS for example)) to a VCF workload domain.

The provider sets these cloud zones up for their customers and provides dedicated or shared infrastructure backed by Cloud Foundation workload domains.

This combination would allow you to build an enterprise VPC construct (like AWS for example), a logically isolated section of your provider cloud.

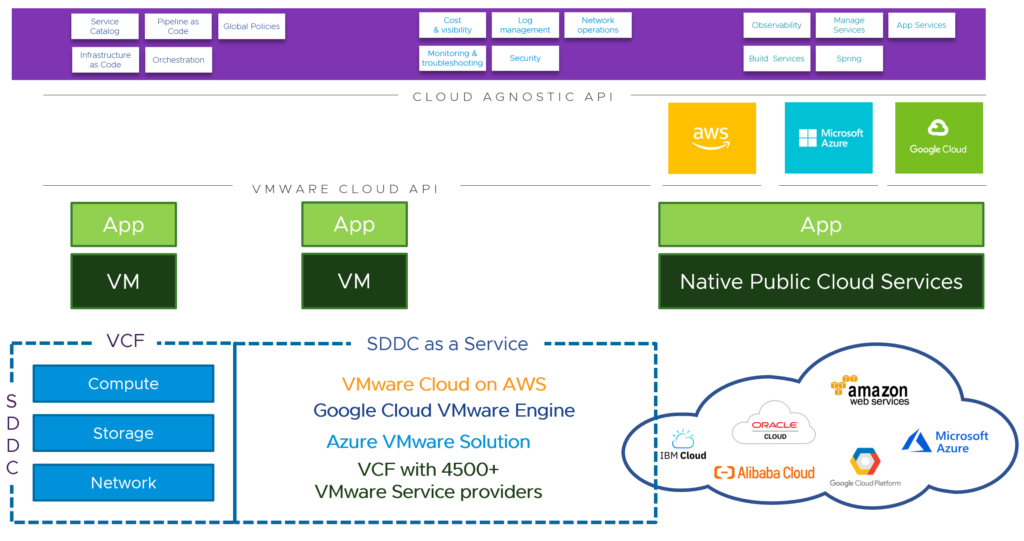

SDDC Manager Integration and VMware Cloud Foundation (VCF) Cloud Account

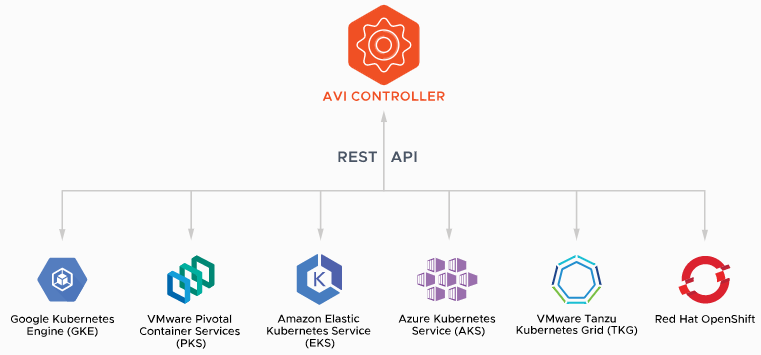

Since the vRA 8.2 release customers are also able to configure a SDDC Manager integration and on-board workload domains as VMware Cloud Foundation cloud accounts into the VMware Cloud Assembly service.

VMware Cloud Director or vRealize Automation?

You wonder if vRealize Automation could replace existing vCD installations? Or if both cloud management platforms can do the same?

I can assure you, that you can provide a self-service provisioning experience with both solutions and that you can provide any technology or cloud service “as a service”. Both have in common to be backed by Cloud Foundation, have some form of integration (vRA) and can be built by a VMware Validated Design (VVD).

vCD is known to be a service provider solution, where vRA is more common in enterprise environments. VMware has VCPP partners, that use Cloud Director for their external customers and vRealize Automation for their internal IT and customers.

If you are looking for a “cloud broker” and Infrastructure as Code (IaC), because you also want to provision workloads on AWS, Azure or GCP as well, then vRealize Automation is the better solution since vCD doesn’t offer this deep integration and these deployment options yet.

Depending on your multi-tenant needs and if you for example only have chosen vCD in the past, because of the OrgVDC and resource pooling feature, vRealize Automation would be enough and could replace vCD in this case.

It is also very important to understand how your current customer onboarding process and operational model look like:

- How do you want to create a new tenant?

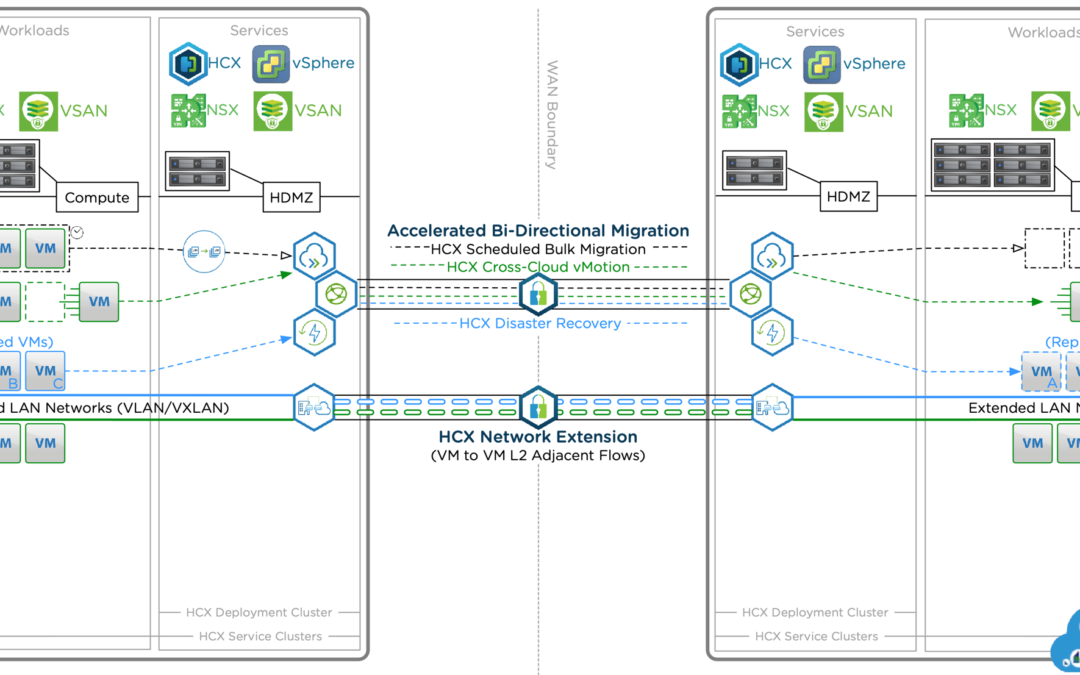

- How do you want to onboard/migrate existing customer workloads to your provider infrastructure?

- Do you need versioning of deployments or templates?

- Do customers require access to the virtual infrastructure (e.g. vCenter or OrgVDC) or do you just provide SaaS or PaaS?

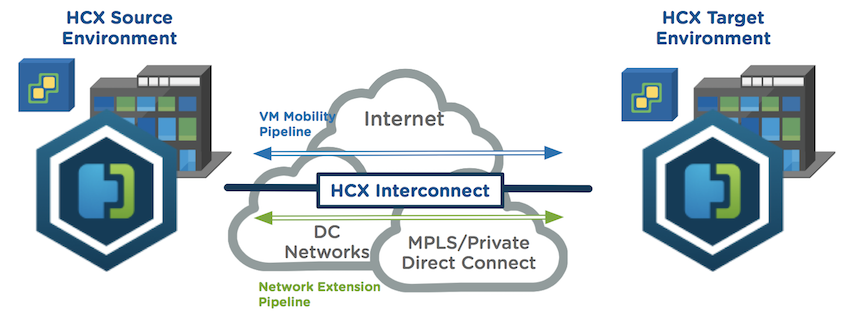

- Do customers need a VPN or hybrid cloud extension into your provider cloud?

- How would you onboard non-vSphere customers (Hyper-V, KVM) to your vSphere-based cloud?

- Does your customer rely on other clouds like AWS or Azure?

- How do you do billing for your vSphere-based cloud or multi-cloud environment?

- What is your Kubernetes/container strategy?

- And 100 other things 😉

There are so many factors and criteria to talk about, which would influence such a decision. There is no right or wrong answer to the question, if it should be VMware Cloud Director or vRealize Automation. Use what makes sense.

Which could also be a combination of both.

You could summarize it like this, that Microsoft is bringing Azure infrastructure and services to any infrastructure. It’s not necessary to understand the technical details of Azure Stack and Azure Arc. More important is the messaging and the strategy. It’s about managing and securing Windows/Linux servers, virtual machines and K8s clusters everywhere and this with their Azure Resource Manager (ARM). Arc ensures that the right configurations and policies are in place to fulfill governance requirements across clouds. Run your workloads where you need it and where it makes sense, even it isn’t Azure.

You could summarize it like this, that Microsoft is bringing Azure infrastructure and services to any infrastructure. It’s not necessary to understand the technical details of Azure Stack and Azure Arc. More important is the messaging and the strategy. It’s about managing and securing Windows/Linux servers, virtual machines and K8s clusters everywhere and this with their Azure Resource Manager (ARM). Arc ensures that the right configurations and policies are in place to fulfill governance requirements across clouds. Run your workloads where you need it and where it makes sense, even it isn’t Azure.