DevSecOps with VMware Tanzu – Intrinsic Security for a Modern Application Supply Chain

Intrinsic security is something we heard a lot in the past from VMware and it was mostly used to describe the strategy and capabilities behind the Carbon Black portfolio (EDR) that is complemented with the advanced threat prevention from NSX (NDR), that form together the VMware XDR vision.

I see similarities between intrinsic security and workout I am doing in the gym. My goal is to build more strength and power, and to become healthier in general. For additional muscle gain benefits and to be more time efficient, I have chosen compound exercises. I am not a fan of single muscle group exercises, which involve isolation exercises. Our body has a lot of joints for different movements, and I think it’s just natural if you use multiple muscle groups and joints during a specific exercise.

Therefore, when you perform compound exercises, you involve different muscles to complete the movement. This improves your intermuscular coordination of your muscles. In addition, as everyone would tell you, these exercises improve your core strength and they let your body become a single unit.

While doing weight training, it is very important to use the proper technique and equipment. Otherwise, the risk for injuries and vulnerabilities increases.

This is what intrinsic security means for me! And I think this is very much relevant to understand when talking about DevSecOps.

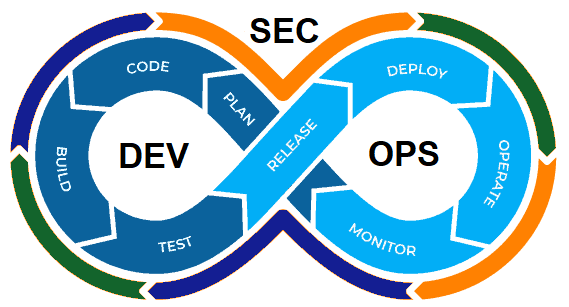

Understanding DevSecOps

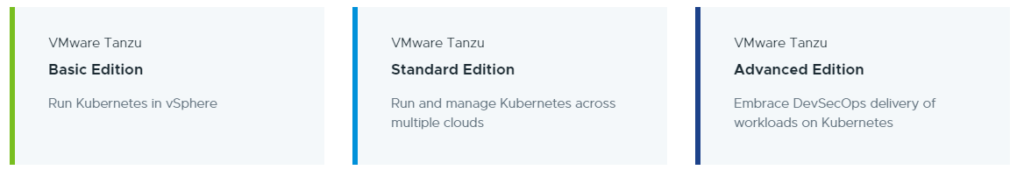

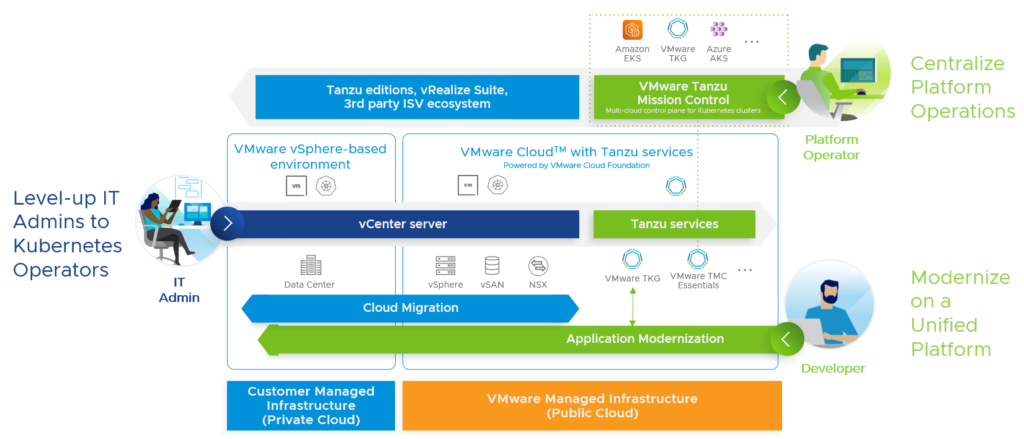

For VMware, talking to developers and talking about DevOps started in 2019 when they presented VMware Tanzu the first time at VMworld. The ideas and innovation behind the name “Tanzu” should bring developers and IT operators closer together for collaboration.

DevOps is the combination of different practices, tools and philosophies that should help an organization to deliver applications and services at a higher pace. In the example above it would mean, that application developers and operations teams are not working isolated in silos anymore, they become one team, a single unit. But technology plays very important role to support the success of the new mindset and culture!

DevOps is about efficiency and the automation of manual tasks or processes. You want to become fast, flexible and efficient. When you put security in the center of this, then we start talking about DevSecOps. You want to know if one of your muscles or parts of the body become weak (defect) or vulnerable.

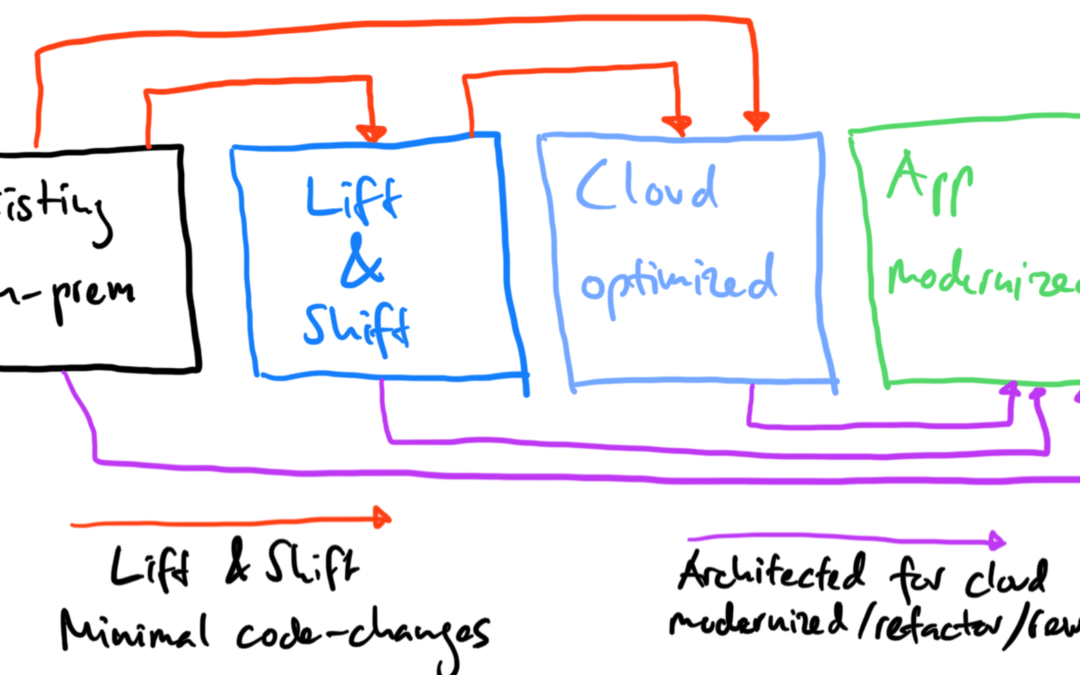

Depending on where you are right now on this application modernization journey, doing DevSecOps could mean a huge cultural and fundamental change to how you develop applications and do IT operations.

For me, DevSecOps is not about bringing security tools together from different teams and technologies. If DevOps and DevSecOps mean that you must change your mindset, then it is maybe also about time to consider the importance of new technology choices.

If DevSecOps means that you put security in the center of a DevOps- or container-centric environment, then security must become an intrinsic part of a modern application supply chain.

The VMware Tanzu portfolio has a lot of products and services to bring developers, operations and security teams together.

Where do we start? We need to “shift left” and this means we need to integrate security already early in the application lifecycle.

Code – Spring Framework

Before you can deliver an application to your customer, you need to develop it, you need to code. Application frameworks are a very effective approach for developing more secure and optimized applications.

Frameworks help to write code faster and more efficient. Not only does a framework can save your developers a lot of coding effort, but it also comes with pre-defined templates. They incorporate best practices and help you simplifying the overall application architecture.

Why is this important? To achieve better security or a more secure cloud native application, it makes sense to standardize and automate. Automation is key for security. Standardization makes it easier to understand or reuse code. You can write all the code yourself, but the chances are high that someone else did parts of your work already. Less variability reduces complexity and therefore enhances security.

There is the open-source Spring Framework for example, which uses Java as the underlying language (or .NET for Steeltoe). Both projects are managed by VMware and millions of developers use them.

What happens next? You would now run your continuous integration (CI) process (integration tests, unit tests) and then you are ready to package or build your application.

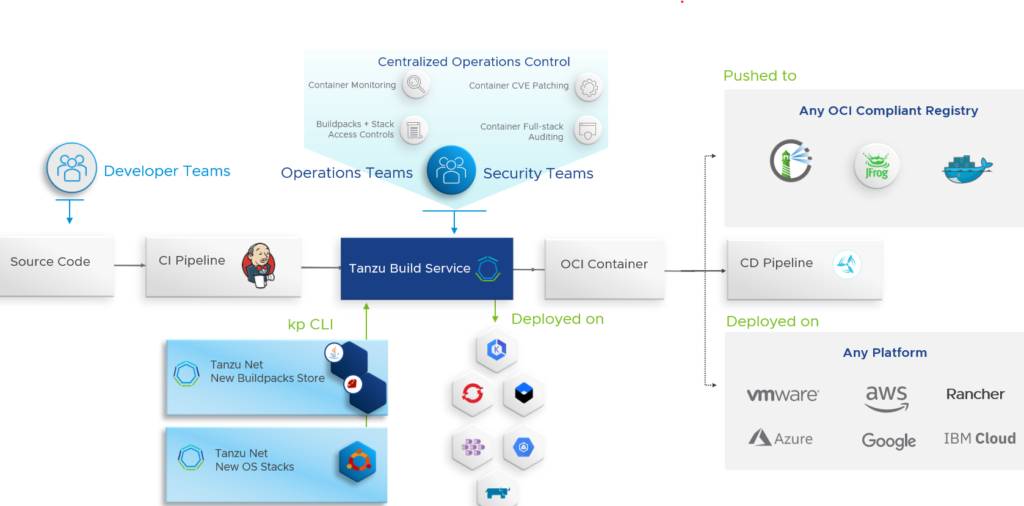

Build – Tanzu Build Service (TBS)

So, your code is now good for release. If you want to deploy your application to a Kubernetes environment, then you need a secure, portable and reproducible build that can be checked for security vulnerabilities, and you need an easy way to patch those vulnerabilities.

How are you going to build your container image where you application is going to be built into? A lot of customers and vendors have a dockerfile based approach.

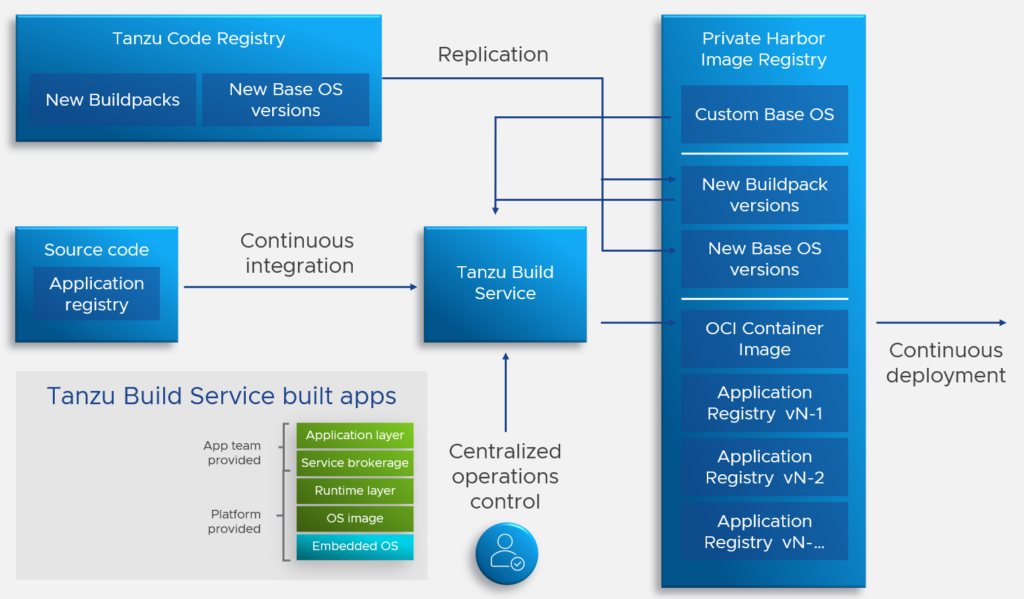

VMware recommends Tanzu Build Service (TBS), which uses Tanzu Buildpacks that are based on the open-source Cloud Native Buildpacks CNCF project to turn application source code into container images. So, no dockerfiles.

TBS is constantly looking for changes in your source code and then automatically builds an image based on that. This means with TBS you don’t need any advanced knowledge of container packaging formats or know how to optimally construct a container creation script for a given programming language.

Tanzu Build Service knows all the images you have built and understands all the dependencies and components you have used. If something changes, your image is going to be rebuilt automatically and then stored in a registry of your choice. More about the registry in a second.

What happens if a vulnerability comes out and one of your libraries, operating systems or components is affected? TBS would patch this vulnerability and all the affected downstream container images would be updated automatically.

Imagine how happy your CISO would be about this way of building secure container images! 🙂

Build – Harbor

We have now pushed our container image to a container repository, a so-called registry. VMware uses Harbor (open-source cloud native registry by VMware, donated to the CNCF in 2018) as an enterprise-grade storage for container images. Additionally, Harbor provides static analysis of vulnerabilities in images through open-source projects like Trivy and Clair.

We have now developed our applications and stored our packaged images in our Harbor registry. What else do we need?

Build – VMware Application Catalog (VAC)

Developers are not going to build everything by themselves. Other services like databases or caching are needed to build the application as well and there are so many known and pre-packaged open-source software freely available online. This brings additional security risks and provides malicious actors to publish container images that contain vulnerabilities.

How can you mitigate this risk and reduce the chance for a critical application outage or breach?

In 2019, VMware acquired Bitnami, which delivers and maintains a catalog of 130+ pre-packaged and ready-to-use open-source application components, that are “continuously maintained and verifiably tested for use in production environments”.

Known as VMware Application Catalog (VAC, formerly also known as Tanzu Application Catalog), VAC as a SaaS offering provides your organization a customizable private collection of open-source software and services, that can automatically be placed in your private container image registry. In this case in your Harbor registry.

Example apps that are supported today:

| Language Runtimes | Databases | App Components | Developer Tools | Business Apps |

| Nodejs | MySQL | Kafka | Artifactory | WordPress |

| Python | PostgreSQL | RabbitMQ | Jenkins | Drupal |

| Ruby | MariaDB | TensorFlow | Redmine | Magento |

| Java | MongoDB | ElasticSearch | Harbor | Moodle |

How does it work?

There are two product features that I would like to highlight:

- Build-time CVE scan reports for container images using Trivy

- Build-time Antivirus scans for container images using ClamAV

Your application, built by Tanzu Build Service and VMware Application Catalog, is complete now, and stored in your Harbor registry. And since you use VAC, you also have your “marketplace” of applications, that is curated by a (security) team in your organization.

If you want to see VAC in action, have a look at this Youtube video.

Note: Yes, VAC is a SaaS hosted application and you may have concerns because you are a public/federal customer. That’s no problem. Consider VAC as your trusted source where you can copy things from. There is no data stored in the public cloud nor does it run anything up there. Download your packages from this trusted repository over to you air gapped environment.

Run – Tanzu Kubernetes Grid (TKG)

Your application is ready to be deployed and the next step is in your pipeline is “continuous deployment“. We finally can deploy our applications to a Kubernetes cluster.

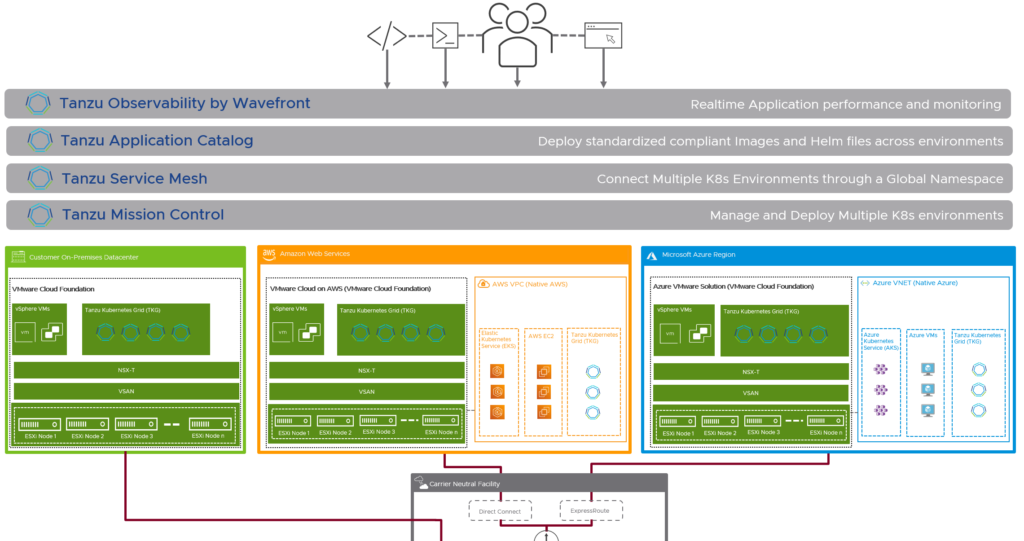

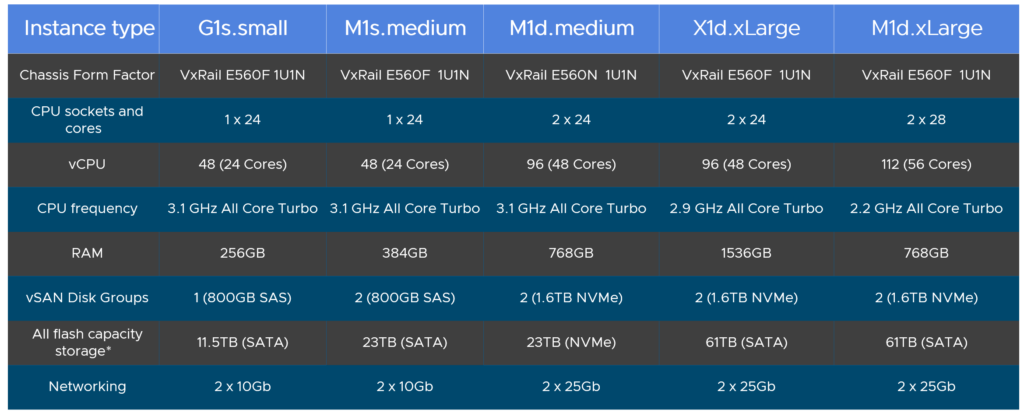

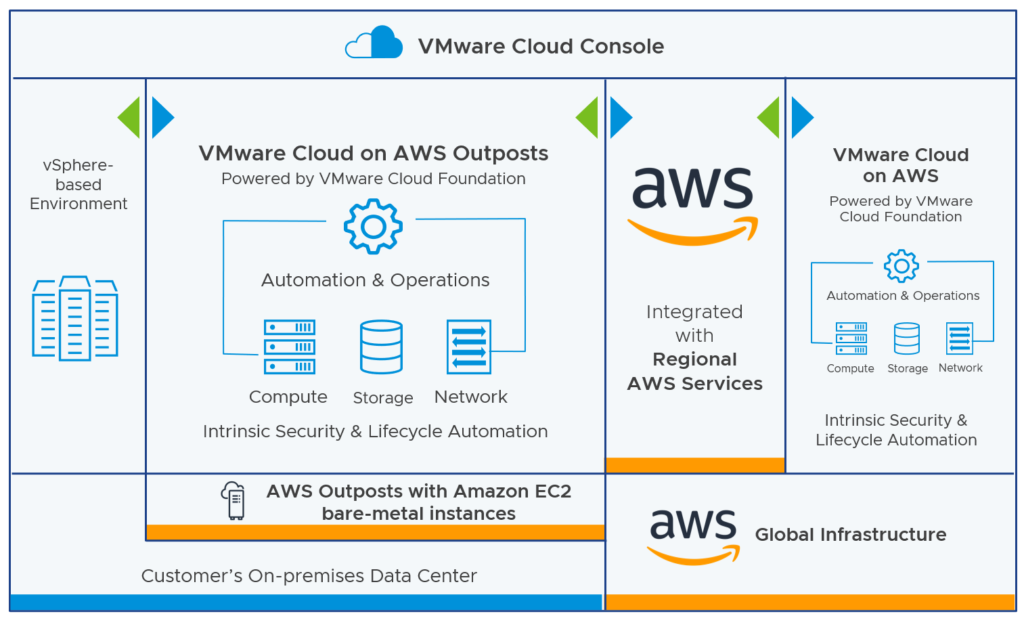

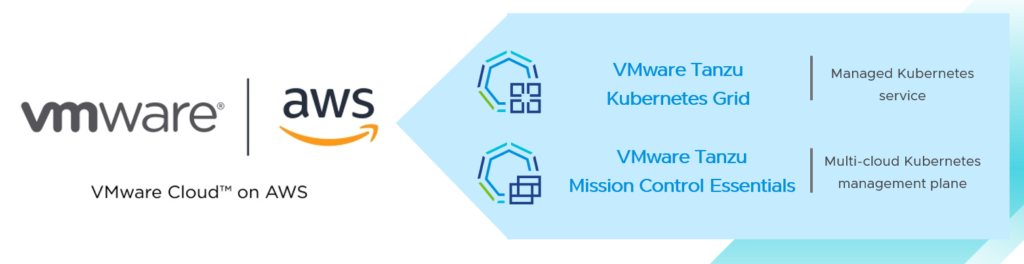

Tanzu Kubernetes Grid or TKG is VMware’s own consistent and conformant Kubernetes distribution that can run in any cloud. VMware’s strategy is about running the same Kubernetes dial tone across data centers and public cloud, which enables a consistent and secure experience for your developers.

TKG has a tight integration with vSphere called “vSphere with Tanzu”. Since TKG is an enterprise-ready Kubernetes for a multi-cloud infrastructure, it can run also in all major public clouds.

If consistent automation is important to you and you want to run Kubernetes in an air gapped environment, where there is no AWS, Azure or any other major public cloud provider, then a consistent Kubernetes version like TKG would add value to your infrastructure.

Manage/Operate – Tanzu Mission Control (TMC)

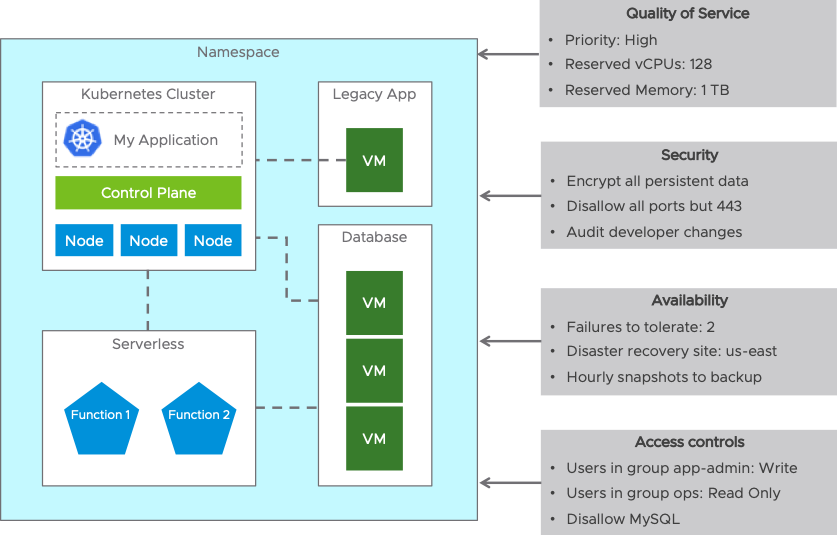

How do we manage these applications on any Kubernetes cluster (VMware TKG, Amazon EKS, Microsoft AKS, Google GKE), that can run in any cloud?

Some organizations started with TKG and others already started with managed Kubernetes offerings like EKS, AKS or GKE. That’s not a problem. The question here is how you deploy, manage, operate, and secure all these different clusters.

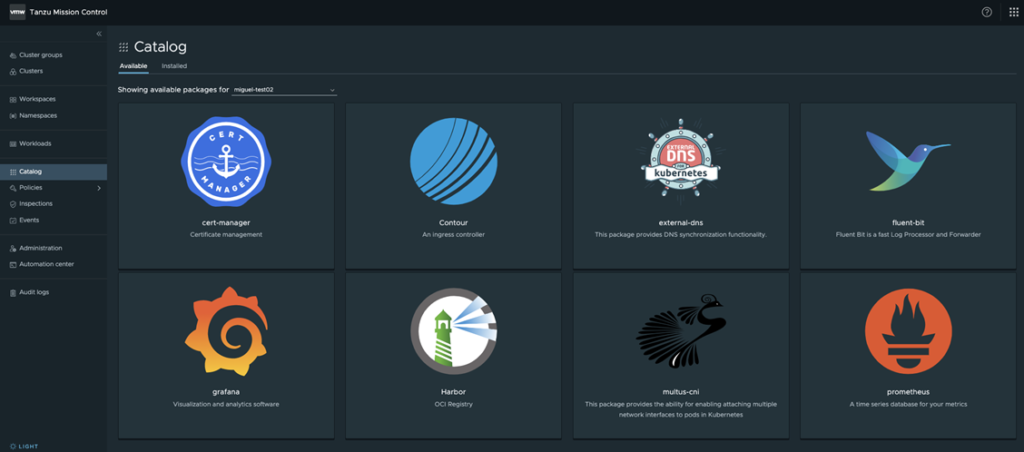

VMware’s solution for that is Tanzu Mission Control, which is also a SaaS-based tool hosted by VMware, that is the first offering I’m going to cover, that is part of a global Tanzu control plane. TMC is a solution that makes your multi-cloud and multi-cluster Kubernetes management much easier.

With TMC you’ll get:

- Centralized Cluster Lifecycle Management. TMC enables automated provisioning and lifecycle management of TKG cluster across any cloud. It provides centralized provision, scaling, upgrading and deletion functions for your Kubernetes clusters. Tanzu Mission Control also allows you to attach any CNCF-conformant Kubernetes cluster (K8s on-prem, K8s in public cloud, TKG, EKS, AKS, GKE, OpenShift) to the platform for management, visibility, and analytic purposes. I would expect that we can use TMC in the future to lifecycle managed offerings like EKS, AKS or GKE.

- Centralized Policy Management. TMC has a very powerful policy engine to apply consistent policies across clusters and clouds. You can create security, access, network, quota, registry, and custom policies (Open Policy Agent framework).

- Identity and Access Management. Another important feature you don’t want to miss with DevSecOps in mind is centralized authentication and authorization, and identity federation from multiple sources like AD, LDAP and SAML. Make sure you give the right people or project teams the right access to the right resources.

- Cluster Inspection. There are to inspection that you can run against your Kubernetes clusters. TMC leverages the built-in open-source project Sonobuoy that makes sure your cluster are configured in a conformant way with the Cloud Native Computing Foundation (CNCF) standards. Tanzu Mission Control provides CIS Benchmark inspection as another option.

Tanzu Mission Control integrates with other Tanzu products like Tanzu Observability and Tanzu Service Mesh, which I’m covering later.

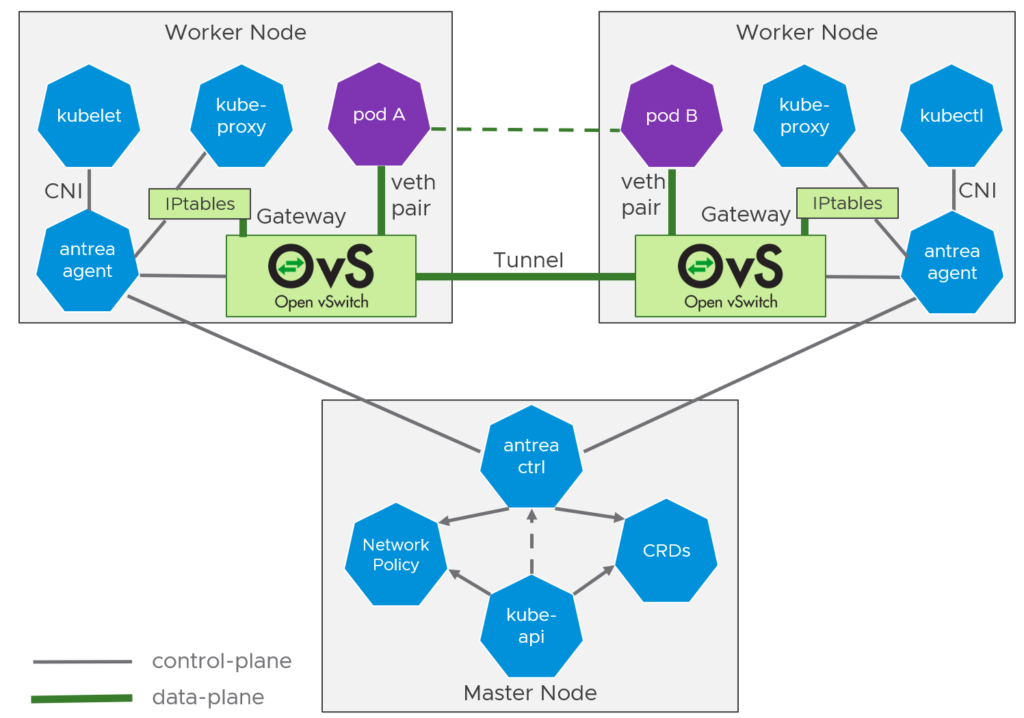

Connect – Antrea

VMware Tanzu uses Antrea as the default container network interface (CNI) and Kubernetes NetworkPolicy to provide network connectivity and security for your pods. Antrea is an open-source project with active contributors from Intel, Nvidia/Mellanox and VMware, and it supports multiple operating systems and managed Kubernetes offerings like EKS, AKS or GKE!

Antrea uses Open vSwitch (OvS) as the networking data plane in every Kubernetes node. OvS is a high performance and programmable virtual switch that not only supports Linux, but also Windows. VMware is working on the achievement to reach feature parity between them, and they are even working on the support for ARM hosts in addition to x86 hosts.

Antrea creates overlay networks using VXLAN or Geneve for encapsulation and encrypts node-to-node communication if needed.

Connect & Secure – NSX Advanced Load Balancer

Ingress is a very important component of Kubernetes and let’s you configure how an application can or should be accessed. It is a set of routing rules that describe how traffic is routed to an application inside of a Kubernetes cluster. So, getting an application up and running is only the half side of the story. The application still needs a way for users to access it. If you would like to know more about “ingress”, I can recommend this short introduction video.

While a project like Contour is a great open-source project, VMware recommends Avi (aka NSX Advanced Load Balancer) provides much more enterprise-grade features like L4 load balancing, L7 ingress, security/WAF, GSLB and analytics. If stability, enterprise support, resiliency, automation, elasticity, and analytics are important to you, then Avi Enterprise, a true software-defined multi-cloud application delivery controller, is definitely the better fit.

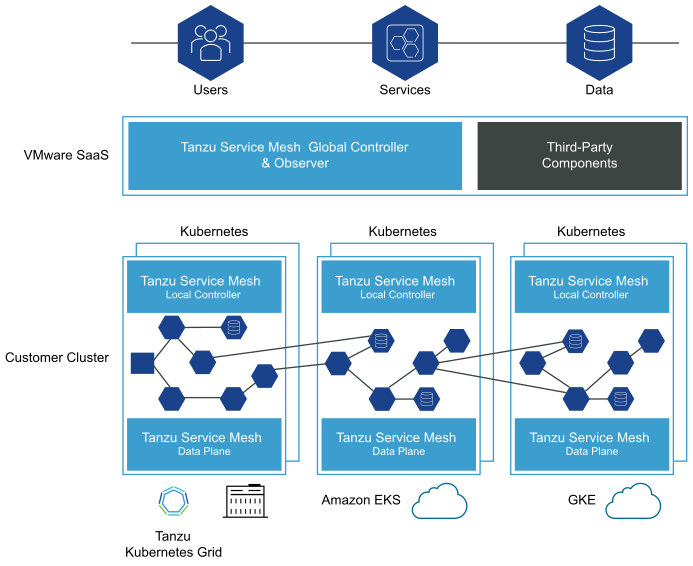

Secure – Tanzu Service Mesh (TSM)

Let’s take a step back and recap what we have achieve until here. We have a standardized and automated application supply chain, with signed container images, that can be deployed in any conformant Kubernetes cluster. We can also access the application from outside and pod-to-pod communication, so that applications can talk to each other. So far so far good.

Is there maybe another way to stitch these services together or “offload” security from the containers? What if I have microservices or applications running in different clouds, that need to securely communicate with each other?

A lot of vendors including VMware realized that the network is the fabric that brings microservices together, which in the end form the application. With modernized or partially modernized apps, different Kubernetes offerings and a multi-cloud environment, we will find the reality of hybrid applications which sometimes run in multiple clouds.

This is the moment when you need to think about the connectivity and communication between your app’s microservices. Today, many Kubernetes users do that by implementing a service mesh and Istio is most probably the most used open-source project platform for that.

The thing with service mesh is, while everyone thinks it sounds great, that there are new challenges that service mesh brings by itself. The installation and configuration of Istio is not that easy and it takes time. Besides that, Istio is also typically tied to a single Kubernetes cluster and therefore Istio data plane – and organizations usually prefer to keep their Kubernetes clusters independent from each other. This leaves us with security and policies tied to a Kubernetes cluster or cloud vendor, which leaves us with silos.

Tanzu Service Mesh, built on VMware NSX, is an offering that delivers an enterprise-grade service mesh, built on top of a VMware-administrated Istio version.

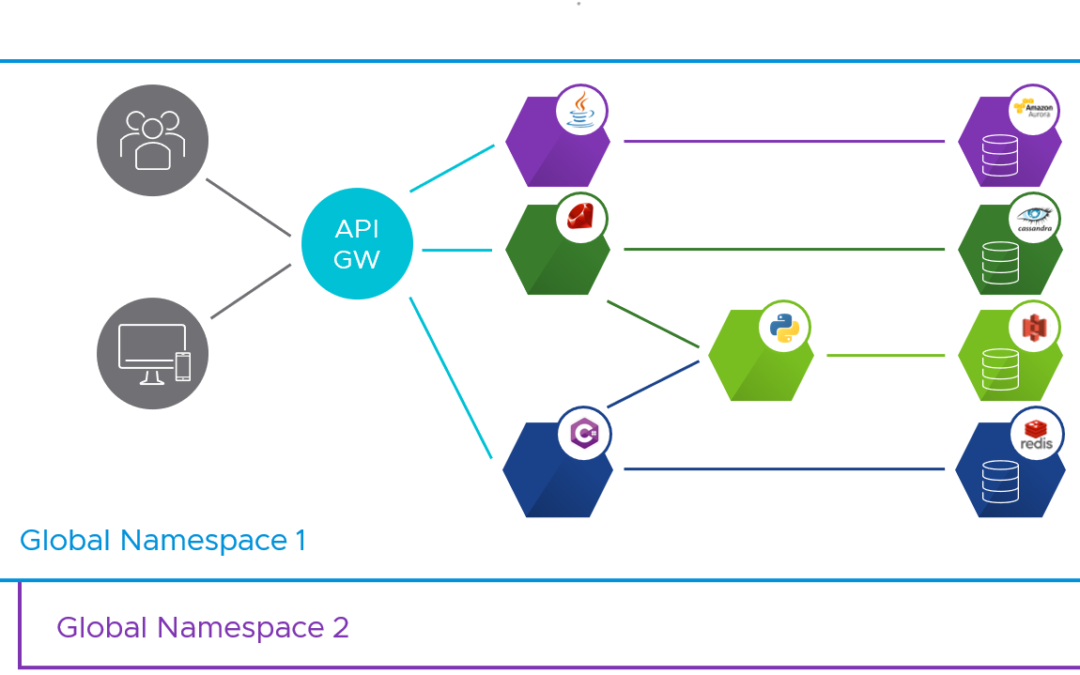

The big difference and the value that comes with Tanzu Service Mesh (TSM) is its ability to support cross-cluster and cross-cloud use cases via Global Namespaces.

A Global Namespace is a unique concept in Tanzu Service Mesh and connects resources and workloads that form the application into a virtual unit. Each GNS is an isolated domain that provides automatic service discovery and manages the following functions that are port of it, no matter where they are located:

- Identity. Each global namespace has its own certificate authority (CA) that provisions identities for the resources inside that global namespace

- Discovery (DNS). The global namespace controls how one resource can locate another and provides a registry.

- Connectivity. The global namespace defines how communication can be established between resources and how traffic within the global namespace and external to the global namespace is routed between resources.

- Security. The global namespace manages security for its resources. In particular, the global namespace can enforce that all traffic between the resources is encrypted using Mutual Transport Layer Security authentication (mTLS).

- Observability. Tanzu Service Mesh aggregates telemetry data, such as metrics for services, clusters, and nodes, inside the global namespace.

Monitor – Tanzu Observability (TO)

Another important part of DevSecOps with VMware Tanzu is observability. What happens if something goes wrong? What are you doing when an application is not working anymore as expected? How do you troubleshoot a distributed application, split in microservices, that potentially runs in multiple clouds?

Image an application split into different smaller services, that are running in a pod, which could be running in a virtual machine on a specific host in your on-premises datacenter, at the edge, or somewhere in the public cloud.

You need a tool that supports the architecture of a modern application. You need a solution that understands and visualizes cloud native applications.

That’s when VMware suggests Tanzu Observability to provide you observability and deep visibility across your DevSecOps environment.

Tanzu Observability has an integration with Tanzu Mission Control, which has the capability then to install the Wavefront Kubernetes collector on your Kubernetes clusters. The name “Wavefront” comes from the company Wavefront, which VMware acquired in 2017.

Since Tanzu Observability is only offered as a SaaS version, I would like to highlight that it is “secure by design” according to VMware:

- Isolation of customer data

- User & Service Account Authentication (SSO, LDAP, SAML)

- RBAC & Authorization

- Data encryption at rest and in transit

- Data at rest is managed by AWS S3 (protected by KMS)

- Certifications like ISO 27001/27017/27018 or SOC 2 Type 1

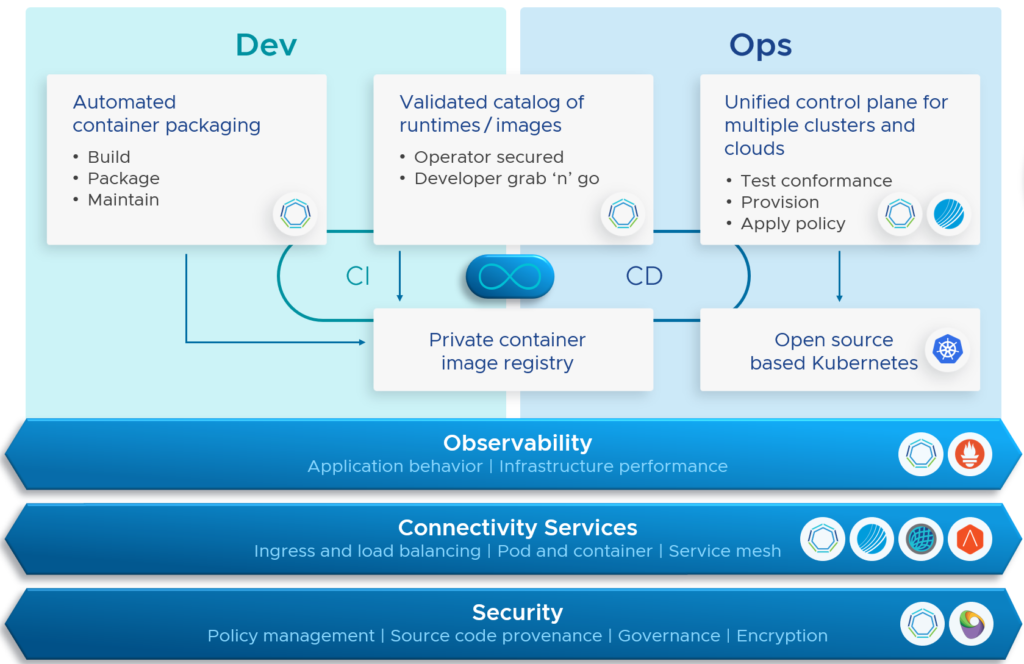

Summary – Tanzu Portfolio Capabilities

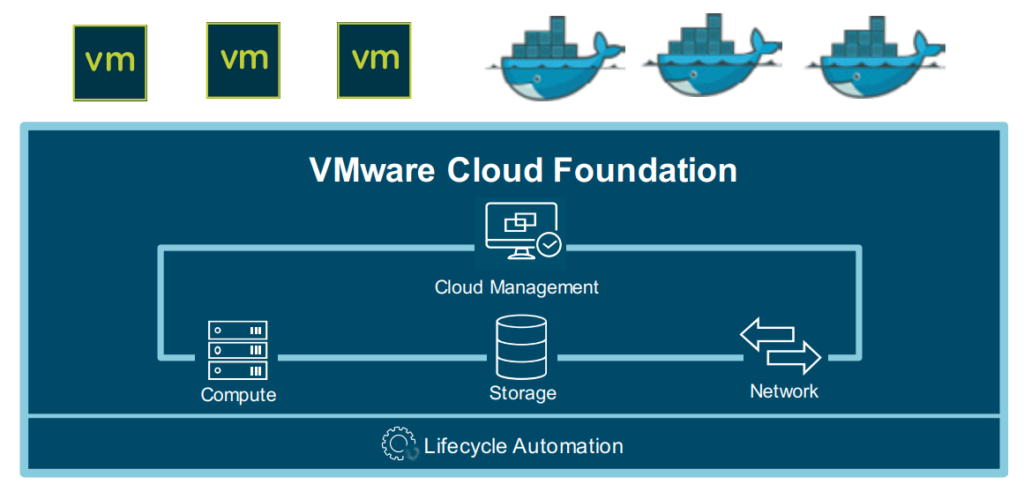

The container build and deploy process consists of the Spring runtime, Tanzu Application Catalog and Tanzu Build Service.

The global control plane (SaaS) is formed by Tanzu Mission Control, Tanzu Service Mesh and Tanzu Observability.

The networking layer consists of NSX Advanced Load Balancer for ingress & load balancing and uses Antrea for container networking.

The foundation of this architecture is built on VMware’s Kubernetes runtime called Tanzu Kubernetes Grid.

Note: There are other components like Application Transformer or Tanzu SQL (part of Tanzu Data Services), which I haven’t covered in this article.

Secure – Carbon Black Cloud Container

Another solution that might be of interest for you is Carbon Black Container. CB Container also provide visibility and control that DevSecOps team need to secure Kubernetes clusters and the application the deploy on top of them.

This solution provides container vulnerability & risk dashboard, image scanning, compliance policy scanning, CI/CD integration, integration with Harbor and supports any upstream Kubernetes like TKG, EKS, AKS, GKE or OpenShift.

Conclusion

DevSecOps with VMware Tanzu helps you to simplify and secure the whole container and application lifecycle. VMware has made some strategic acquisitions (Heptio, Pivotal, Bitnami, Wavefront, Octarine, Avi Networks, Carbon Black) in the past to become a major player the world of containerization, Kubernetes and application modernization.

I personally believe that VMware’s approach and Tanzu portfolio have a very strong position in the market. Their modular approach and the inclusion of open-source projects is a big differentiator. Tanzu is not just about Kubernetes, it’s about building, securing and managing the applications.

If you have a strong security focus, VMware can cover all the layers up from the hypervisor to the applications that can be deployed in any cloud. That’s the strength and unique value of VMware: A complete and diverse portfolio with products, that provide even more value when combined together.

Don’t forget, that VMware is number 1 when it comes to data center infrastructures and most of the customer workloads are still running on-premises. That’s why I believe that VMware and their Tanzu portfolio are very well positioned.

In case you missed it the announcements a few weeks ago, check out Tanzu Application Platform and Tanzu for Kubernetes Operations that meet the needs of all those who are concerned with DevSecOps!

And if you would like to know more about VMware Tanzu in general, have a look at my “10 Things You Didn’t Know About VMware Tanzu” article.

vRealize AI (vRAI) learns about your operating environments, application demands and adapts to changing dynamics, ensuring optimization per stated KPI. vRAI Cloud is only available on vRealize Operations Cloud via the vRealize Cloud Universal subscription.

vRealize AI (vRAI) learns about your operating environments, application demands and adapts to changing dynamics, ensuring optimization per stated KPI. vRAI Cloud is only available on vRealize Operations Cloud via the vRealize Cloud Universal subscription.