What Is Unique About Oracle Cloud VMware Solution?

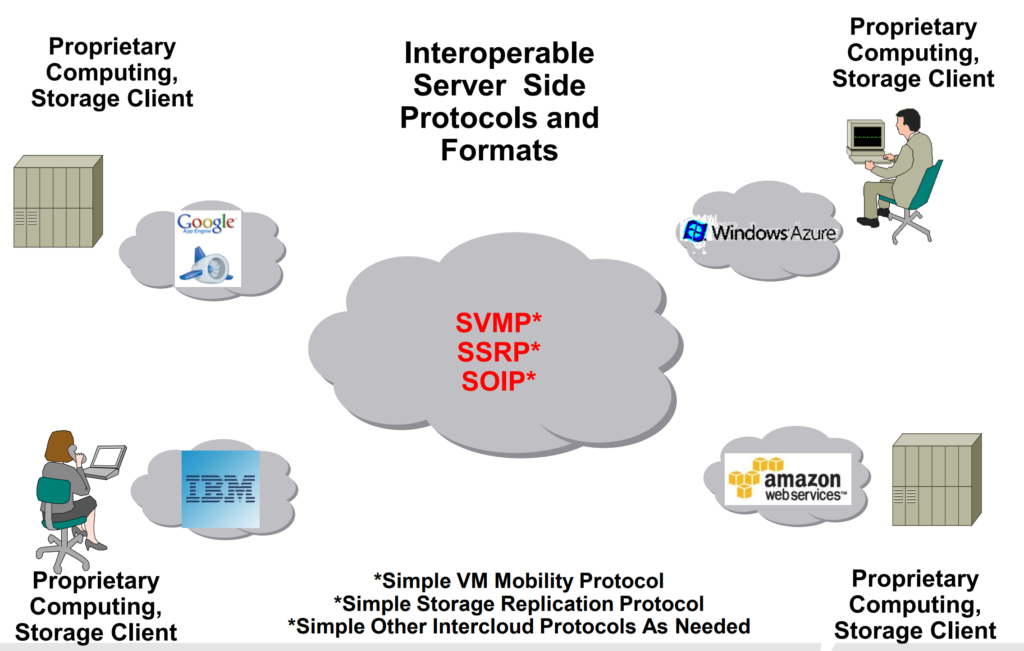

Everyone talks about multi-cloud and in most cases they mean the so-called big 3 that consist of Amazon Web Services (AWS), Microsoft Azure and Google Cloud. If we are looking at the 2021 Gartner Magic Quadrant for Cloud Infrastructure & Platform Services, one can also spot Alibaba Cloud, Oracle, IBM and Tencent Cloud.

VMware has a strategic partnership with 6 of these hyperscalers and all of these 6 public clouds offer VMware’s software-defined data center (SDDC) stack on top of their global infrastructure:

- AWS – VMware Cloud on AWS (VMC on AWS)

- Microsoft – Azure VMware Solution (AVS)

- Google – Google Cloud VMware Engine (GCVE)

- Alibaba – Alibaba Cloud VMware Service (ACVS)

- IBM – IBM Cloud for VMware Solutions

- Oracle – Oracle Cloud VMware Solution (OCVS)

While I mostly have to talk about AWS, AVS and GCVE, I am finally getting the chance to attend a OCVS customer workshop led by Oracle. That is why I wanted to prepare myself accordingly and share my learnings with you.

Amazon Web Services, Microsoft Azure and Google Cloud dominate the cloud market, but Oracle has unique capabilities and characteristics that no one else can deliver. Additionally, Oracle’s Cloud Infrastructure (OCI) has shown an impressive pace of innovation in the past two years, which led to a 16% increase on Gartner’s solution scorecard for OCI (November 2021, from 62% to 78%), which put them into the fourth place behind Alibaba Cloud!

What is Oracle Cloud VMware Solution?

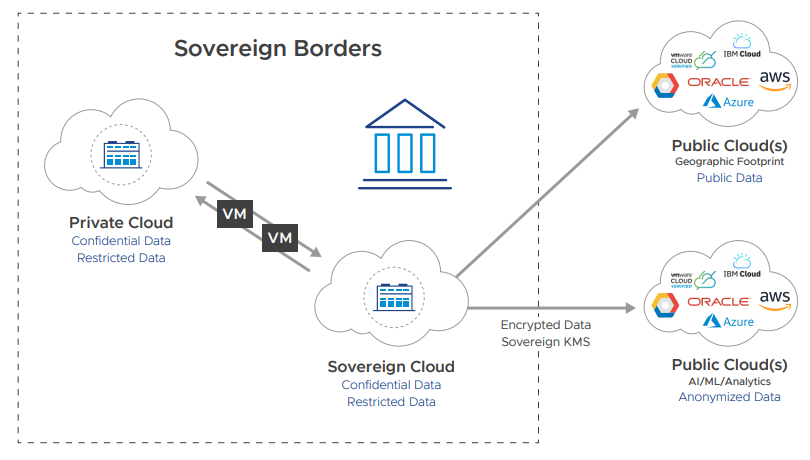

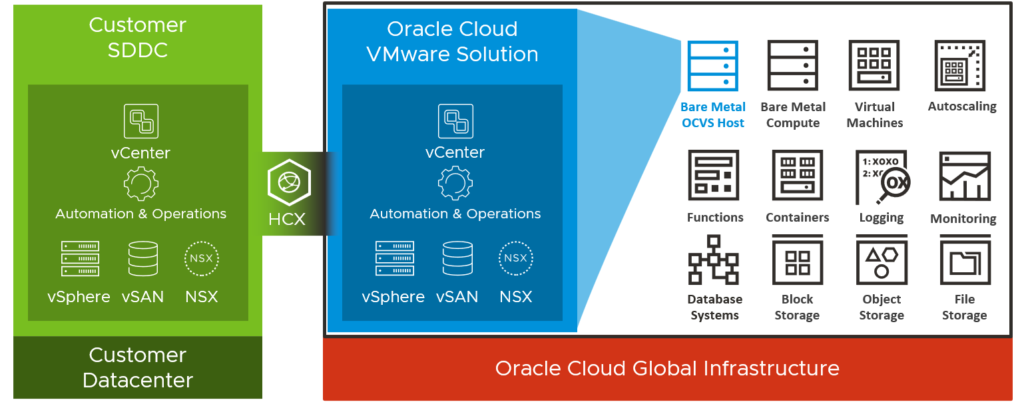

Oracle Cloud VMware Solution or OCVS is a result of the strategic partnership announced by VMware and Oracle in September 2019. Like the other VMware Cloud solutions like VMC on AWS, AVS or GCVE, Oracle Cloud VMware Solution will enable customers to run VMware Cloud Foundation on Oracle’s Generation 2 Cloud Infrastructure.

Meaning, running an on-premises VMware-based infrastructure combined with OCVS should make cloud migrations easier and faster, because it is the same foundation with vSphere, vSAN and NSX.

Key Differentiator #1 – Different SDDC Bundles

Key Differentiator #1 – Different SDDC Bundles

Customers can choose between a multi-host SDDC (minimum of 3 production hosts) and a single-host SDDC, that is made for test and dev environments. Oracle guarantees a monthly uptime percentage of at least 99.9% for the OCVS service.

OCVS offers three different ESXi software versions and supports the following versions of other components:

- ESXi 7.0, 6.7 or 6.5

- vCenter 7.0, 6.7 or 6.5

- vSAN 7.0, 6.7 or 6.5

- NSX-T 3.0

- HCX Advanced 4.0, 3.5 (default option)

- HCX Enterprise (billed upgrade)

Note: vSphere 6.5 and vSphere 6.7 reach the End of General Support from VMware on October 15, 2022.

Key Differentiator #2 – Customer-Managed & Baremetal Hosts

The VMware Cloud offerings from AWS, Azure or Google are all vendor-controlled and customers get limited access to the VMware hosts and infrastructure components. With Oracle Cloud VMware Solution, customers get baremetal servers and the same operational experience as on-premises. This means full control over VMware infrastructure and its components:

- SSH access to ESXi

- Edit vSAN cluster settings

- Browse datastores; upload and delete files

- Customer controls the upgrade policy (version, time, defer)

- Oracle has NO ACCESS after the SDDC provisioning!

Note: According to Oracle it takes about 2 hours to deploy a new SDDC that consists of 3 production hosts.

Customers can choose between Intel- and AMD-based hosts:

- Two-socket BM.DenseIO2.52 with two CPUs each running 26 cores (Intel)

- Two-socket BM.DenselO.E4.128 with two CPUs each running 16 cores (AMD)

- Two-socket BM.DenselO.E4.128 with two CPUs each running 32 cores (AMD)

- Two-socket BM.DenselO.E4.128 with two CPUs each running 64 cores (AMD)

Details about the compute shapes can be found here.

Key Differentiator #3 – Availability Domains

To provide high throughput and low latency, an OCVS SDDC is deployed by default across a minimum of three fault domains within a single availability domain in a region. But, upon request it is also possible to deploy your SDDC across multiple availability domains (AD), which comes with a few limitations:

- While OCVS can scale from 3 up to 64 hosts in a single SDDC, Oracle recommends a maximum of 16 ESXi hosts in a multi-AD architecture

- This architecture can have impacts on vSAN storage synchronization, and rebuild and resync times

Most hyperscaler only let you use two availability zones and fault domains in the same region. With Oracle it is possible to distribute the minimum of 3 hosts to 3 different availability domains. An availability domain consists of one or more data centers within the same region.

Note: Traffic between ADs within a region is free of charge.

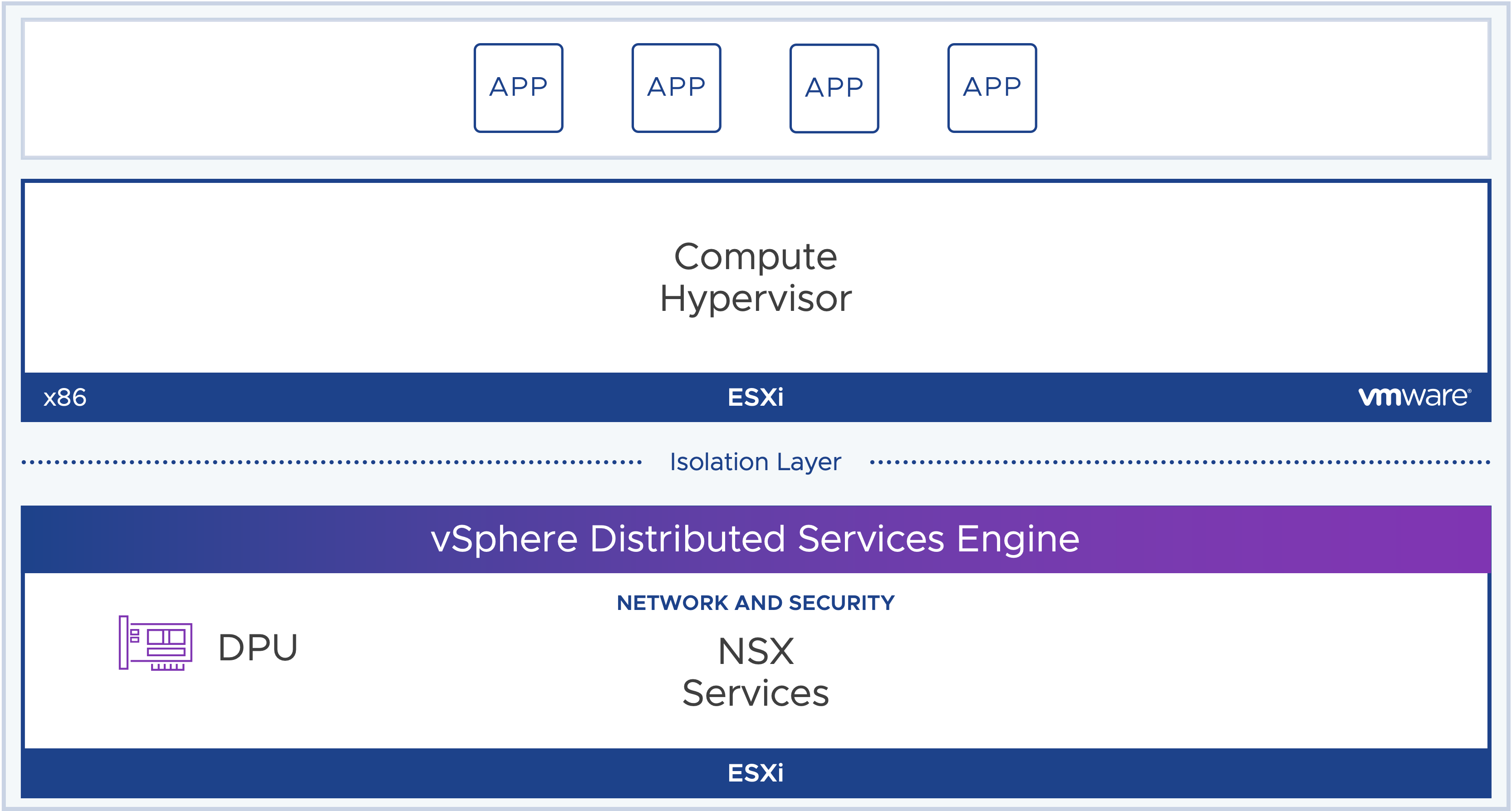

Key Differentiator #4 – Networking

Because OCVS is customer-managed and can be operated like your on-premises environment, you also get “full” control over the network. OCVS is installed within a customers’ tencancy, which gives customer the advantage to run their VMware SDDC workloads in the same subnet as OCI native services. This provides lower latency to the OCI native services, especially for customers that are using Exadata for example.

Another important advantage of this architecture is capability to create VLAN-backed port groups on your vSphere Distributed Switch (VDS).

Key Differentiator #5 – External Storage

Since March 2022 the OCI File Storage service (NFS) is certified as secondary storage for an OCVS cluster. This allows customers to scale the storage layer of the SDDC without adding new compute resources at the same time.

And just announced on 22 August 2022, with Oracle’s summer ’22 release, OCVS customers can now connect to a certified OCI Block Storage through iSCSI as a second external storage option.

Block Storage provides high IOPS to OCI, and data is stored redundantly across storage servers with built-in repair mechanisms with a 99.99% uptime SLA.

Key Differentiator #6 – Billing Options

OCVS is currently only sold and supported by Oracle. Like with other cloud providers and VMware Cloud offerings, customers have different pricing options depending upon their commitment levels:

- On-demand (hourly)

- 1 month

- 1 year

- 3 years

The rule of thumb for any hyperscaler says, that a 1-year commitment get around 30% discount and the 3-year commitments are around 50% discount.

The unique characteristic here is the monthly commitment option, which is caluclated with a discount of 16-17% depending on the compute shape.

Note: OCVS is not part (yet) of the VMware Cloud Universal subscription (VMCU).

Key Differentiator #7 – Global Reach

Currently, OCI is available in 39 different cloud regions (21 countries) and Oracle announced five more by the end of 2022. On day one of each region, OCVS is available with a consistent and predictable pricing that doesn’t vary from region to region.

To compare: AWS has launched 27 different regions with 19 being able to host the VMware Cloud on AWS service. In Switzerland, AWS just opened their new data center without having the VMware Cloud on AWS service available, while OCVS is already available in Zurich.

Use Cases

While OCVS is a great solution for joint VMware and Oracle customers, it is not necessary for customers to using Oracle Cloud Infrastructure native solutions.

Data Center Expansion

As you just learned before, OCVS is a great fit if you want to maintain the same VMware software versions on-premises and in OCI. The classic use case here is the pure data center expansion scenario, which allows you to stretch your on-premises infrastructure to OCI, without the need to use their native services.

VMware Horizon on OCVS

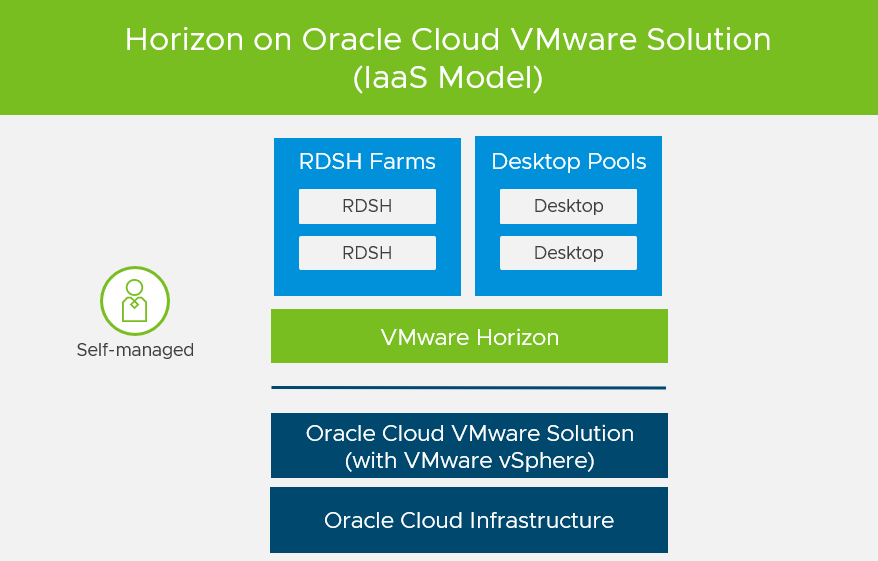

As I mentioned at the beginning, Oracle Cloud VMware Solution is based on VMware Cloud Foundation and so it is no surprise that Horizon on OCVS is fully supported.

The Horizon deployment on OCVS works a little bit different compared to the on-premises installation and there is no feature parity yet:

- Horizon on OCVS does not support vGPUs yet.

- Horizon on OCVS does not support IPv6 yet.

- Horizon on OCVS does not support vTPM yet. In this situation it is recommended to use shielded OCVS instances.

Note: The support of NSX Advanced Load Balancer (Avi) is still a roadmap item

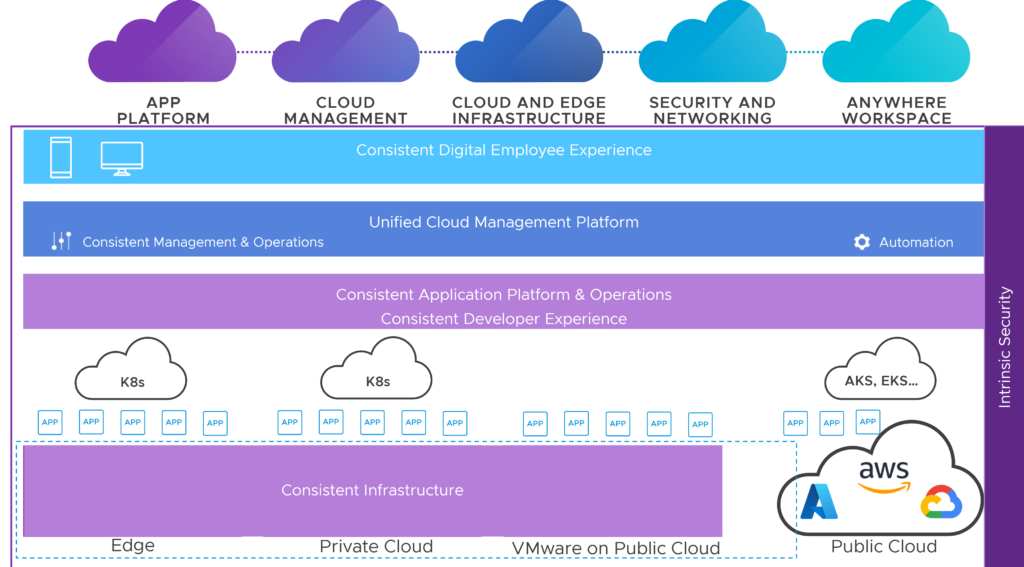

VMware Tanzu for OCVS

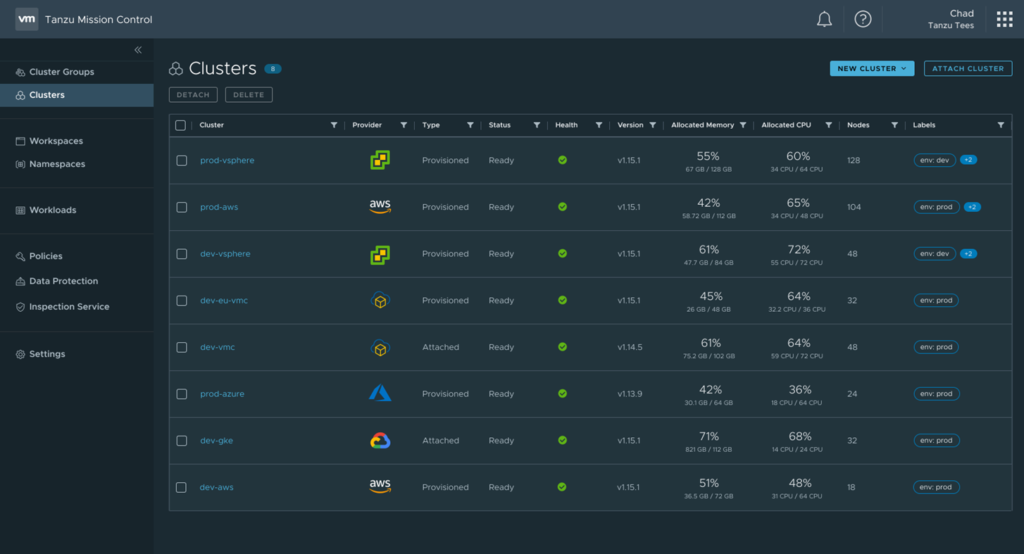

Since April 2022 it is possible for joint VMware and Oracle customers to use Tanzu Standard and its components with Oracle Cloud VMware Solution. Tanzu Standard comes with VMware’s Kubernetes distribution Tanzu Kubernetes Grid (TKG) and Tanzu Mission Control, which is the right solution for multi-cloud, multi-cluster K8s management.

With TMC you can deploy and manage TKG clusters on vSphere on-premises or on Oracle Cloud VMware Solution. You can even attach existing Kubernetes clusters from other vendors like RedHat OpenShift, Amazon EKS or Azure Kubernetes Service (AKS).

Oracle Cloud VMware Solution FAQ

VMware’s OCVS FAQ can be found here.

Oracle’s OCVS FAQ can be found here.

Additional Resources

Here is a list of additional resources: